Originally from KDnuggets https://ift.tt/2Qq55WF

How Rasa NLU is moving past Intents

Isn’t it about time we get past intents?

If you have ever developed a Conversational AI agent using NLU, you know how often users don’t follow the happy path.

They may say or type responses that make perfect sense however their responses still fall outside of any intent.

For example, if a user asks about a refund, by typing just their order number, what happens?

What is the intent of that message?

Obviously, the order number is an entity but since there isn’t a clear intent that it’s mapped to, it will trigger a retrieval action that combines all of your intents into a single FAQ and in this way we have already moved past intents and right into context!

RASA is taking this insight to the next level and on May 25th, Alan Nickole, the co-founder and CTO or Rasa will share how RASA is moving beyond intents and using context!

Featured Speaker

Alan Nickole, Co-founder & CTO @ Rasa

NLU: Going Beyond Intents & Entities

In this talk, Alan will share how RASA is going beyond Intents and Entities.

Very exciting talk as we are seeing NLP/NLU going through a major revolution.

Develop your own AI Agent in our Certified NLU Workshop

Join our NLU Workshop on May 27th you can create you own Conversational Agent in our full day workshop and get certified in Conversational AI Development.

How Rasa NLU is moving past Intents was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Via https://becominghuman.ai/how-rasa-nlu-is-moving-past-intents-1d1c6383eac3?source=rss—-5e5bef33608a—4

Data Science Books You Should Start Reading in 2021

Originally from KDnuggets https://ift.tt/3v5O8j0

The Three Edge Case Culprits: Bias Variance and Unpredictability

Originally from KDnuggets https://ift.tt/3ncJixN

What is Adversarial Neural Cryptography?

Originally from KDnuggets https://ift.tt/3sCTGjb

58 Resources To Help Get Started With Deep Learning ( In TF )

Fifty-eight blogs/videos to enter Deep Learning with TensorFlow ( along with 8 memes to keep you on track )

Deep Learning could be super fascinating if you’re already in love with other ML algorithms. DL is all about huge neural networks, with numerous parameters, different layers which perform specific tasks.

This checklist will provide you with a smooth start ( and also a safer start ) with Deep Learning in TensorFlow.

Warning: This story uses some dreaded terminology. Readers who have jumped directly into DL, thereby skipping basic ML algos, are advised.

Without wasting time, let’s get started.

? The 30,000 feet overview ( Contents )

? The Basics

Mathematics of backpropagation ( bonus, refresher ),

- Derivatives ( Khan Academy )

- Partial Derivatives ( Khan Academy )

- Chain Rule ( Khan Academy )

- Derivatives of Activation functions

? TensorFlow Essentials

? Entering the DL World

- Activation Functions

- Dense Layers

- Batch Normalization

- Dropout Layers

- Weight Initialization Techniques

⚔️ Training the Model

- Optimizers ( Variants of Gradient Descent )

- Loss Functions

- Learning Rate Schedule

- Early Stopping

- Batch Size ( and Epochs vs. Iterations vs. Batch Size )

- Metrics

- Regularization

??? More from the Author

? Contribute to this story

? The Basics

? Understanding Neural Networks

Artificial Neural Networks are systems inspired by biological neurons and are used to solve complex problems, as they have the unique ability to approximate functions, which map the inputs to their labels.

By Tony Yiu,

And 3Blue1Brown is a must,

☠️ Your attention here, please. The following topic which DL folks call “backpropagation” is a serial killer for many ML beginners. It roams around with creepy weapons like partial derivatives, chain rule, so beginners with weak mathematics are advised.

? Backpropagation

Backpropagation is used to optimize the parameters of ML models, which aims to find a minimum for the given cost function. Having a strong understanding of how backpropagation works under the hood, is essential in deep learning.

- Back Propagation Neural Network: Explained With Simple Example

- A Step by Step Backpropagation Example

- Back-Propagation is very simple. Who made it Complicated ?

This 3Blue1Brown playlist might be helpful,

? TensorFlow Essentials

Although the TensorFlow website is enough for a beginner to get started, I would like to focus on some important topics.

? Dataset

This is an important class, which helps us load huge datasets easily and feed them to our NNs. The Dataset class has a bunch of useful methods. Using the dataset.map() method along with various methods in tf.image module, we can perform a number of operations on images from our data.

? TensorBoard

TensorBoard will help you visualize the values of various metrics used during the training of our model. It could be the accuracy of our model or the MAE ( Mean Absolute Error ) in case of a regression problem.

The official docs,

TensorBoard.dev can help you share the results of your experiments easily,

TensorBoard.dev – Upload and Share ML Experiments for Free

Some other helpful resources,

- Using TensorBoard in Notebooks | TensorFlow

- Deep Dive into TensorBoard: Tutorial With Examples – neptune.ai

? Entering the DL World

? Activation Functions

Activation functions introduce non-linearity in our NN, so as to approximate the function which would map our input data with the labels. Every activation function has its own pros and cons, so the choice will always depend on your use case.

- Activation Functions : Sigmoid, ReLU, Leaky ReLU and Softmax basics for Neural Networks and Deep…

- How to Choose an Activation Function for Deep Learning – Machine Learning Mastery

- Deep Learning: Which Loss and Activation Functions should I use?

Also, have a look at the tf.keras.activations module, to see the available activation functions in TensorFlow,

Module: tf.keras.activations | TensorFlow Core v2.4.1

Trending AI Articles:

2. How AI Will Power the Next Wave of Healthcare Innovation?

? Dense Layers

Dense layers are quite important in every possible NN. You’ll find them in every architecture whether it is for text classification, image classification or GANs. Here’s a good read, by Hunter Heidenreich, about tf.keras.layers.Dense to have a better understanding,

Understanding Keras — Dense Layers

? Batch Normalization

Batch normalization is one of the most efficient techniques to accelerate the training of NN, by tackling internal covariate shift. Some good blogs you may read,

- A Gentle Introduction to Batch Normalization for Deep Neural Networks – Machine Learning Mastery

- How to use Batch Normalization with Keras? – MachineCurve

From Richmond Alake,

Batch Normalization In Neural Networks Explained (Algorithm Breakdown)

Andrew Ng’s explanation on Batch Normalization,

And lastly, make sure you explore the tf.keras.layers.BatchNormalization class,

tf.keras.layers.BatchNormalization | TensorFlow Core v2.4.1

? Dropout

Dropout is widely used in NNs, as it fights efficiently against overfitting. It randomly sets activations to 0, given a dropout_rate which is the probability of activation being set to 0.

Its always better to start with a question in mind,

- Why does adding a dropout layer improve deep/machine learning performance, given that dropout suppresses some neurons from the model?

- A Gentle Introduction to Dropout for Regularizing Deep Neural Networks – Machine Learning Mastery

By Amar Budhiraja,

Learning Less to Learn Better — Dropout in (Deep) Machine learning

Understanding the math behind Dropout, in a simple and lucid manner,

Simplified Math behind Dropout in Deep Learning

Make sure you explore the official TF docs for tf.keras.layers.Dropout ,

tf.keras.layers.Dropout | TensorFlow Core v2.4.1

? Weight Initialization Techniques

Considering Dense layers, the parameters W and b i.e the weights and the biases, are randomly initialized. They are then optimized by Gradient Descent ( backpropagation ). But there are some smart ways to initialize these parameters so that the loss function could quickly each the minima. Some good reads are,

By Saurabh Yadav,

- Weight Initialization Techniques in Neural Networks

- Weight Initialization for Deep Learning Neural Networks – Machine Learning Mastery

The story below will give you a glimpse of how and where should we use different weight initialization techniques, by James Dellinger,

Weight Initialization in Neural Networks: A Journey From the Basics to Kaiming

Lastly, explore the tf.keras.initializers module,

Keras documentation: Layer weight initializers

? Training the Model

? Optimizers ( Variants of Gradient Descent )

A number of different optimizers are available in the tf.keras.optimizers module. Each optimizer is a variant or improvement to the gradient descent algorithm.

By Imad Dabbura,

By Raimi Karim,

10 Gradient Descent Optimisation Algorithms

? Loss Functions

Cost functions ( synonymously called loss functions ) penalize the model for incorrect predictions. It measures how good the model is, and decides how much improvement is needed. Different loss functions have different use-cases, which are detailed thoroughly in the stories below,

- Loss and Loss Functions for Training Deep Learning Neural Networks – Machine Learning Mastery

- How to Choose Loss Functions When Training Deep Learning Neural Networks – Machine Learning Mastery

- Introduction to Loss Functions

A great video from Stanford,

? Learning Rate Schedule

By practice, the learning rate of a NN model should be decreased over time. As the value of the loss function decreases, or as the loss function is close to minima, we take smaller steps. Note, the learning rate decides the step size of gradient descent.

- Using Learning Rate Schedules for Deep Learning Models in Python with Keras – Machine Learning Mastery

- Learning Rate Schedule in Practice: an example with Keras and TensorFlow 2.0

Make sure you explore the Keras docs,

Keras documentation: LearningRateScheduler

? Early Stopping

Early stopping is a technique wherein we stop the training of our model when a given metric stops improving. So, we stop the training of our model, before it overfits, thereby avoiding any excessive training which can worsen the results.

- A Gentle Introduction to Early Stopping to Avoid Overtraining Neural Networks – Machine Learning Mastery

- Introduction to Early Stopping: an effective tool to regularize neural nets

By Upendra Vijay,

Early Stopping to avoid overfitting in neural network- Keras

? Batch Size ( and Epochs vs. Iterations vs. Batch Size )

Batch size is the number of samples present in a mini-batch. Each batch is sent through the NN and the errors are averaged across the samples. So, for each batch, the parameters of the NN are optimized. Also, see Mini-Batch Gradient Descent.

By Kevin Shen,

Effect of batch size on training dynamics

This could probably clear a common confusion among beginners,

By SAGAR SHARMA,

Epoch vs Batch Size vs Iterations

? Metrics

Metrics are functions whose value is evaluated to see how good a model is. The primary difference between a metric and a cost function is that the cost function is used to optimize the parameters of the model in order to reach the minima. A metric could be calculated at each epoch or step in order to keep track of the model’s efficiency.

By Shervin Minaee,

20 Popular Machine Learning Metrics. Part 1: Classification & Regression Evaluation Metrics

By Aditya Mishra,

- Metrics to Evaluate your Machine Learning Algorithm

- How to Use Metrics for Deep Learning with Keras in Python – Machine Learning Mastery

Sometimes, we may require some metrics which aren’t available in the tf.keras.metrics module. In this case, a custom metric should be implemented, as described in this blog,

Custom Loss and Custom Metrics Using Keras Sequential Model API

? Regularization

Regularization consists of those techniques which help our model prevent overfitting and thereby generalize itself better.

By Richmond Alake,

- Regularization Techniques And Their Implementation In TensorFlow(Keras)

- An Overview of Regularization Techniques in Deep Learning (with Python code)

Excellent videos from Andrew Ng,

✌️ More from the Author

✌️ Thanks

A super big read, right? Did you find some other blog/video/book useful, which could be super-cool for others as well? Well, you’re at the right place! Send an email at equipintelligence@gmail.com to showcase your resource in this story ( the credits would of course go with you! ).

Goodbye and a happy Deep Learning journey!

Don’t forget to give us your ? !

58 Resources To Help Get Started With Deep Learning ( In TF ) was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Startup Incubations & Accelerators For AI Startups

access to mentorship, funding, and experts

Continue reading on Becoming Human: Artificial Intelligence Magazine »

Who Are The Top Intelligent Document Processing (IDP) Vendors?

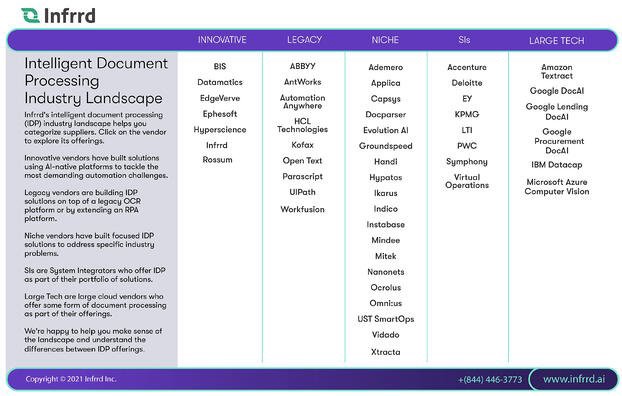

IDP Vendor Landscape Update December 2020: Holy smokes we’ve reached 46 IDP solutions on the landscape. In this update, we’re adding 13 more IDP vendors/solutions to the list. These are IDP vendors that we get questions about as enterprises and partners try to figure out what’s what and who’s who.

Remember, if you need help navigating this landscape, we’re happy to be your guide.

We welcome the newest IDP vendors to the landscape:

The Intelligent Document Processing Landscape

Intelligent Document Processing ( IDP) is rapidly emerging as a category of solutions that help you extract data from the documents that drive your business- like insurance claims, mortgage applications, contracts, purchase orders, invoices, engineering drawings, and so on.

Many vendors offer IDP products, but they’re all quite different so the landscape isn’t easy to navigate. The category itself can go by different names. Some call it Cognitive Document Processing. Others call it Intelligent Document Capture (IDC). Some even refer to IDP solutions as OCR or Machine Learning OCR … which is very misleading. To keep it simple, we’ll stick with IDP in this post so we can help you make sense of it all.

Making Sense of the IDP Vendor Landscape

IDP solutions automatically extract data from unstructured complex documents, then feed that data into business systems so a whole process can be automated. If you don’t have IDP, you’re extracting this data manually because it’s too difficult for older technologies like OCR to handle it accurately.

True IDP is very difficult, and few vendors actually do it well. Many vendors claim to have IDP solutions, but the scope of what their IDP can do varies A LOT. Choosing the right vendors for your shortlist depends on how the problem you need to solve aligns with the scope of each vendor’s IDP capability.

Five Categories of IDP Vendors

To help you navigate the landscape we group the vendors into five categories:

- Innovative IDP vendors

- Legacy IDP vendors

- Niche IDP vendors

- Systems Integrators with IDP offerings

- Technology providers with IDP components

Vendors in each category tend to view the IDP challenge through a different lens and have therefore developed solutions that are different from one another.

Trending AI Articles:

2. How AI Will Power the Next Wave of Healthcare Innovation?

The Top IDP Vendors

Listed in alphabetical order by category.

This list is based on vendors we often compete against, vendors we see in the market, and vendors covered by analysts. Our list includes only vendors that support enterprise-grade requirements and operations. If you want a solution for a small office or a single desktop, this list isn’t for you.

Vendors in each category below are listed in alphabetical order. Innovative IDP Vendors

This a younger group of up-and-coming vendors who have built solutions using AI-native platforms to tackle the most demanding automation challenges. Generally, Innovative vendors can handle documents that are more complex or have greater variation. As a result, they often can deliver a greater business impact than older technologies. Since these vendors are free from legacy technical debt, it’s easier for them to build next-gen, future-oriented solutions. Some vendors in this group have a more narrow focus than others, so be sure to make sure their expertise aligns with your use cases.

Tip: If your use case involves complex, unstructured, messy documents, then take a good look at these vendors. We at Infrrd believe we’re the leader among these innovators, but don’t take our word for it — check out our IDP platform and decide for yourself.

Legacy (OCR or RPA) vendors

Legacy vendors are typically building IDP solutions on top of a legacy platform. To make this work, a vendor may bolt some AI or Machine Learning onto its legacy OCR or RPA products. Typically, these vendors have a broader portfolio of automation offerings and are not laser-focused on the IDP challenge. These IDP solutions may not be as agnostic to a multi-vendor environment as other segments, though many have established partnerships with vendors who offer complementary capabilities. Also, OCR-centric solutions tend to be good when you have large volumes of relatively simple documents that don’t have a lot of variation.

Tip: If you are using one of these vendors’ products already, it makes sense to put their IDP offerings on your shortlist. But the real challenge is to get a sense of whether your problem requires a better OCR solution or a true AI-native IDP solution.

Niche IDP vendors

This group includes vendors that are just getting started or those with a particular focus or disruptive solutions to narrow problems. As these vendors may be smaller, pay particular attention to their ability to deliver enterprise-grade performance that meets your requirements.

Tip: Consider these vendors if you have a niche use case that is a good application fit.

IDP System Integrators

System Integrators may offer IDP as part of their portfolio of solutions. Their IDP offering may be a solution from another IDP vendor or developed in-house. Some of these vendors use Infrrd’s IDP platform, for example.

Tip: SIs can help you develop and deploy business and system-level strategies that leverage the transformative power of IDP.

IDP Technology Suppliers

The major technology platforms offer general-purpose technology components such as OCR and computer vision. Since these components are not full IDP solutions we only mention them in this post because some enterprises prefer to develop capabilities internally based on available technology components.

Tip: You will need a strong IT group with a data science team to build out all the functionality needed for a production solution based on these components.

Sources: The IDP vendor list was developed with data from The Everest Group, NelsonHall, and Infrrd’s research. At the time of this post, neither Gartner nor Forrester had a research that covered the IDP market landscape.

How should you navigate the IDP landscape?

Our experience is that most enterprises run this gauntlet more than once. You will likely define and redefine requirements as you better understand vendor capabilities and the scope of your own business process challenges.

One thing we have learned from conversations and deployments with many enterprises is that IDP is clearly a disruptive innovation. Many of our enterprise customers had expectations anchored by years of experience with OCR. They were surprised to learn that a true IDP solution can deliver automation they once thought was impossible. So if you haven’t explored this exciting new vendor landscape, you might be surprised too.

Tip: Define your requirements using your most complex use case. If IDP can solve the most complex challenge, then the rest of your use cases are easy peasy lemon squeezy. You end up with one platform that does it all instead of multiple OCR or specialized solutions that become a challenge to maintain and optimize

Don’t forget to give us your ? !

Who Are The Top Intelligent Document Processing (IDP) Vendors? was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

How to ace A/B Testing Data Science Interviews

Originally from KDnuggets https://ift.tt/3sCsRvv

Top 10 Must-Know Machine Learning Algorithms for Data Scientists Part 1

Originally from KDnuggets https://ift.tt/3elDP3u