The Backpropagation is used to update the weights in Neural Network .

Who Invented Backpropagation?

Paul John Werbos is an American social scientist and machine learning pioneer. He is best known for his 1974 dissertation, which first described the process of training artificial neural networks through backpropagation of errors. He also was a pioneer of recurrent neural networks. Wikipedia

Let us consider a Simple input x1=2 and x2 =3 , y =1 for this we are going to do the backpropagation from Scratch

Here , we can see the forward propagation is happened and we got the error of 0.327

We have to reduce that , So we are using Backpropagation formula .

we are going to take the w6 weight to update , which is passes through the h2 to output node

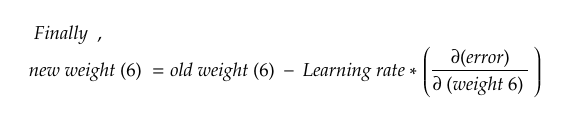

For the backpropagation formula we set Learning_rate=0.05 and old_weight of w6=0.15

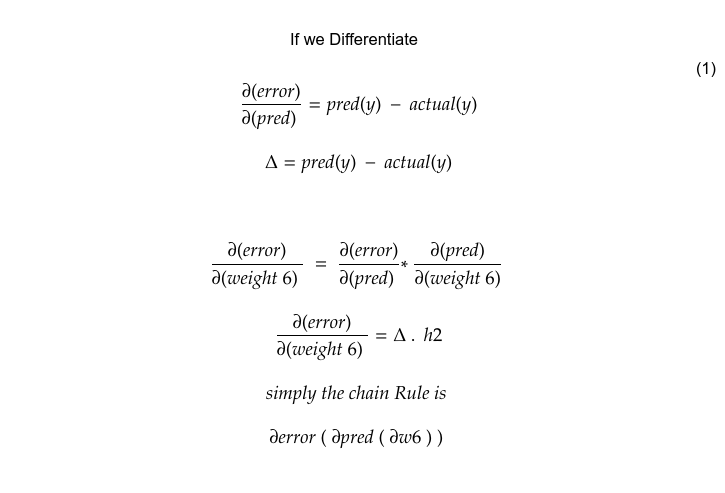

but we have to find the derivative of the error with respect to the derivative of weight

To find derivative of the error with respect to the derivative of weight , In the Error formula we do not have the weight value , but predication Equation has the weight

For that Chain rule comes to play , you can see the chain rule derivative ,we are differentiating respect with w6 so power of the w6 1 so it becomes 1–1, others values get zero , so we get the h2

for d(pred)/d(w6) we got the h2 after solving it

After Solving same as above we will get

d(error)/actual(y) = pred(y)- actual(y)

the more equation takes to get the weight values the more it gets deeper to solve

We now got the all values for putting them into them into the Backpropagation formula

After updating the w6 we get that 0.17 likewise we can find for the w5

But , For the w1 and rest all need more derivative because it goes deeper to get the weight value containing equation .

Trending AI Articles:

1. Cheat Sheets for AI, Neural Networks, Machine Learning, Deep Learning & Big Data

3. Getting Started with Building Realtime API Infrastructure

For Simplicity i have not used the bias value and activation function , if activation function is added means we have to differentiate that to and have to increase the function be like

d(error(d pred ( d h ( d sigmoid())) )

this is how the single backpropagation goes , After this goes again forward then calculates error and update weights , Simple…….

Don’t forget to give us your ? !

Chain Rule for Backpropagation was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Via https://becominghuman.ai/chain-rule-for-backpropagation-55c334a743a2?source=rss—-5e5bef33608a—4

source https://365datascience.weebly.com/the-best-data-science-blog-2020/chain-rule-for-backpropagation