Optimizers lies at the heart of machine learning.

Gradient descent is one of the most popular algorithms to perform optimization and by far the most common way to optimize neural networks. At the same time, every state-of-the-art Deep Learning library contains implementations of various algorithms to optimize gradient descent. These algorithms, however, are often used as black-box optimizers.

By the end of this blog post, you’ll have a comprehensive understanding of how gradient descent works at its core. We will intuitively by the means of gradient descent accomplish a task of rod balancing problem on our finger. I know, this might sound super obvious and simple but trust me, implementing gradient descent would be a piece of a cake once you understand the underlying concept.

Before that let us get familiar with what do we mean by optimization. Optimization is the act of making the best or most effective use of a situation or resource. In the real world, while running a business or solving any particular problem we are limited with the available resources to work on, that is where the true nature of optimizer comes. Everyone wants to solve a problem in such a manner that the outcome of the solution should be the best of all other possible solutions. For every optimization problem, the goal is provided beforehand either it can be to maximize(increase) or minimize(decrease) something. For example, choosing the optimal location for the warehouse to minimize shipment time, designing a bridge that can carry a maximum load possible for a given cost, designing an airplane wing to minimize weight while maintaining strength, designing load balancer to minimize the server load during heavy traffic, etc.

Since every concept comes with its underlying components, optimizers aren’t exceptional, here are some of them.

Components of Optimizer:

- Objective function: The value you are trying to optimize.

- Decision Variables: The values optimizer are allowed to tune.

- Constraints: The boundaries that optimizer cannot cross or the conditions which are supposed to meet for any solution.

- Gradients: The slope of the Objective function on inputs and Decision variables. (will discuss this later in detail).

From the above examples shipment time, load that bridge can carry, the weight of the airplane wing, load on servers are the Objective functions whereas the location of the warehouse, the structure of the bridge, shape of the wing, number of threads allotted to a server are Decision variables. And given the cost for bridge construction, maintaining the strength of an airplane, etc. are the Constraints.

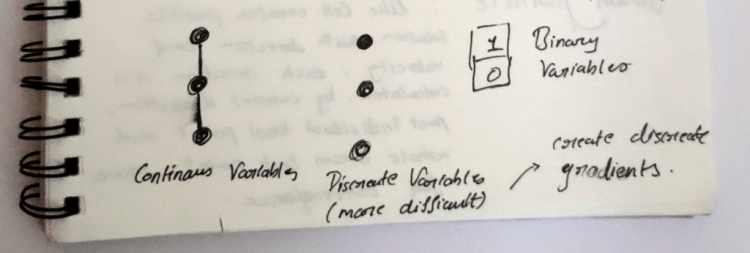

Note: the decision variables used in optimizing can be continuous, discrete, or binary. But we avoid discrete variables as it results in discrete gradients, more difficult to optimize.

Back to our balancing rod problem, if we break down this into components we would get,

Objective function: maximize the time it stays on the finger.

Decision Variable: the point at which we try to balance the rod. We’ll denote it as ‘x’.

Now we can state this problem as,

where,

f(x): balance the rod at x point and return the time(in seconds) it stayed on a finger.

When we consider the Objective function, always there will be some kind of curve with varying slope at a different value(s) of Decision variable(s). The goal of the optimizer is to converge to the bottom of the curve for a minimization problem OR to climb to the top of the curve for a maximization problem. When the optimizer reaches their desired goal, the value(s) of decision variable(s) at this point is considered as optimal value(s) for the given Machine learning model for further predictions.

In reality, we don’t know what the curve is, for our balancing problem we as a human being with our intellectual we can easily plot the curve(concave in our case) and select optimal value but as the number of decision variables increases curve becomes more complex and there human fails to derive solution and for machine simply the curve doesn’t exist until it starts exploring.

Top 4 Most Popular Ai Articles:

3. Real vs Fake Tweet Detection using a BERT Transformer Model in few lines of code

Types of Optimizers:

- Gradient Free Algorithms.

- Gradient Based Algorithms.

Gradient Free Algorithms don’t require to compute gradients, they are much more dependent on randomness, mutation, and fitness or scoring functions in nature, hence they are slower than gradient-based algorithms. The Decision Variables in these algorithms can be discrete, discontinuous, or noisy. Here is the list of gradient-free algorithms:

- Exhaustive Search

- Genetic Algorithm

- Particle Swarm

- Simulated Annealing

(we’ll not discuss Gradient free algorithms as it is somewhat out of context for this blog post, would recommend going ahead and explore as they’re quite interesting, most of reinforcement learning methods are based on these algorithms.)

If we try to solve our balancing rod problem using ‘Exhaustive search(or Exhaustive exploration)’ which is nothing but trying all possible solutions and pick the best one at the end of the experiment.

Solving Rod Balancing problem using Exhaustive Search

Here in the above video, we try almost all possible points (x) on the rod and plot the time on the y-axis for each test/point on the rod. Eventually, over time we select the best point on the rod for maximum balance time.

In the image above Fig.(A) shows the initial state when we aren’t aware of the curve, Fig.(B) shows some progression and we start to realize how the curve looks like, After testing enough we get the complete curve which is shown by Fig.(C), at this point we select the point on x-axis i.e., the point at which we should balance the rod for maximum success.

Exhaustive Search does provide the solution but it takes a long time to optimize. In Machine Learning the amount of time required to train a model is a critical factor. If the model is not properly designed it may take months to converge, on the other side if the right selection of the algorithm is made, resulting in faster training.

Now that we know why we shouldn’t always go for this brute-force approach, we can try other Gradient-free methods for our problem but it will be overkill. In part-2 of this series, we’ll talk about Gradient-based Algorithm, also we will solve Rod Balancing problem using Gradient descent method. here is the link of the second part.

Introduction to Optimization and Gradient Descent Algorithm [Part-2].

Do leave a clap if you like this post, see you in part-2. cheers!!

Don’t forget to give us your ? !

Introduction to Optimization and Gradient Descent Algorithm [Part-1]. was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.