ResNet : Convolution Neural Network

ResNet, also known as residual neural network, refers to the idea of adding residual learning to the traditional convolutional neural network, which solves the problem of gradient dispersion and accuracy degradation (training set) in deep networks, so that the network can get more and more The deeper, both the accuracy and the speed are controlled.

The problem caused by increasing depth :

- The first problem brought by increasing depth is the problem of gradient explosion / dissipation . This is because as the number of layers increases, the gradient of backpropagation in the network will become unstable with continuous multiplication, and become particularly large or special. small. Among them , the problem of gradient dissipation often occurs. i.e effect of the weight decreases.

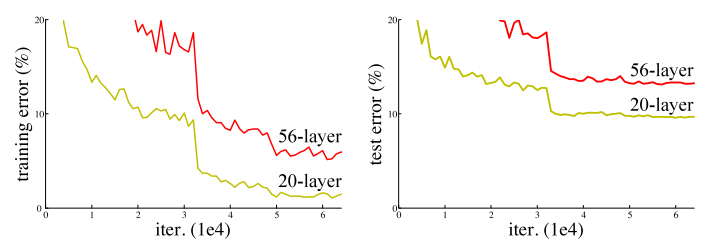

- Another problem of increasing depth is the problem of network degradation, that is, as the depth increases, the performance of the network will become worse and worse, which is directly reflected in the decrease in accuracy on the training set. The residual network article solves this problem. And after this problem is solved, the depth of the network has increased by several orders of magnitude.

Trending AI Articles:

1. Machine Learning Concepts Every Data Scientist Should Know

3. AI Fail: To Popularize and Scale Chatbots, We Need Better Data

From above figure we can conclude that till 20th layer its ok. But if we increase the number of layers , instead of increase in accuracy it starts decreasing.Based on this problem the ResNet comes into picture.

ResNet :

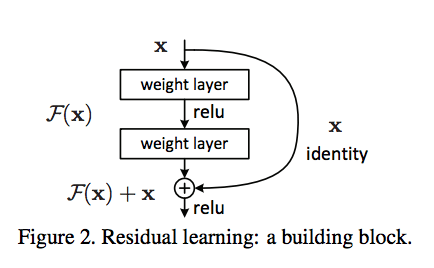

The block contains two branches (i) Indentity branch that refers to own itself i.e x . (ii) F(x) referes to the network part called residual mapping .

Assume x as input .If weights over which we are training are negative just skip the input. We are passing those weights into relu activation function which not allow to pass it for further calculation.

Why we use identity blog if there is relu which chop off all negative weights ?

The main architecture contains image → convolution → relu . For negative weights if I will able to stop is to pass in convolution layer and make unnecessary calculations and then is send to the relu , then is can be say I will able to reduce the parameters as well as calculations.

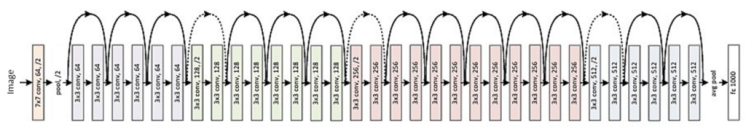

This is equivalent to reducing the amount of parameters for the same number of layers , so it can be extended to deeper models. So the author proposed ResNet with 50, 101 , and 152 layers , and not only did not have degradation problems, the error rate was greatly reduced, and the computational complexity was also kept at a very low level .

Don’t forget to give us your ? !

ResNet : Convolution Neural Network was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Via https://becominghuman.ai/resnet-convolution-neural-network-e10921245d3d?source=rss—-5e5bef33608a—4

source https://365datascience.weebly.com/the-best-data-science-blog-2020/resnet-convolution-neural-network