Basic Example of Neural Style Transfer

This post is a practical example of Neural Style Transfer based on the paper A Neural Algorithm of Artistic Style (Gatys et al.). For this example, we will use the pretrained Arbitrary Image Stylization module which is available in TensorFlow Hub. We will work with Python and tensorflow 2.x.

Neural Style Transfer

Neural style transfer is an optimization technique used to take two images- an image and a style reference image (such as an artwork by a famous painter)-and blend them together so the output image looks like the content image, but “painted” in the style of the style reference image.

This is implemented by optimizing the output image to match the content statistics of the content image and the style statistics of the style reference image. These statistics are extracted from the images using a convolutional network.

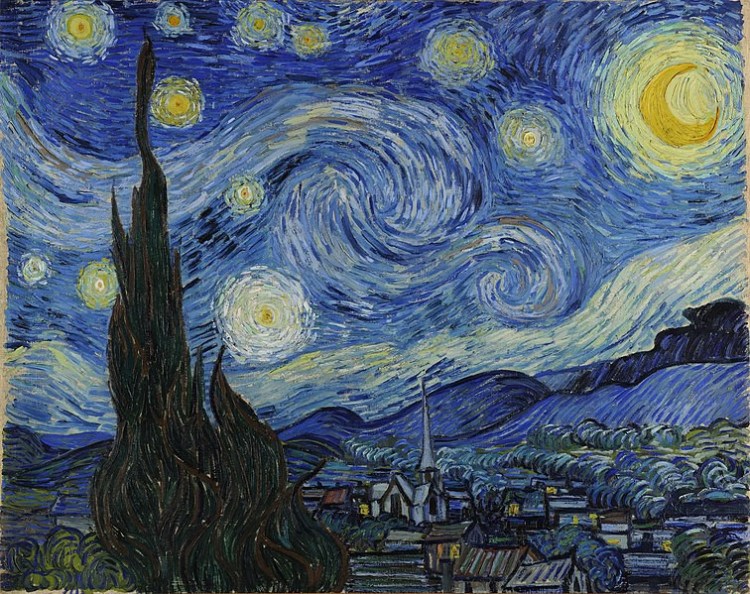

For example, let’s take an image of the Gold Gate Bridge and the Starry Night by Van Gogh.

Now how would it look like if Van Gogh decided to paint the picture of Golden Gate with this style?

Trending AI Articles:

1. Machine Learning Concepts Every Data Scientist Should Know

3. AI Fail: To Popularize and Scale Chatbots, We Need Better Data

Example of Neural Style Transfer using Tensorflow

Let’s start coding and also download the content and style images.

%tensorflow_version 2.x

from __future__ import absolute_import, division, print_function, unicode_literals

import tensorflow as tf

print(tf.__version__)

import IPython.display as display

import matplotlib.pyplot as plt

import matplotlib as mpl mpl.rcParams['figure.figsize'] = (20,20) mpl.rcParams['axes.grid'] = False

import numpy as np

import PIL.Image

import time

import functools

def tensor_to_image(tensor):

tensor = tensor*255

tensor = np.array(tensor, dtype=np.uint8)

if np.ndim(tensor)>3:

assert tensor.shape[0] == 1

tensor = tensor[0]

return PIL.Image.fromarray(tensor)

# Visualize the input

# Define a function to load an image and limit its maximum dimension to 512 pixels.

def load_img(path_to_img):

max_dim = 512

img = tf.io.read_file(path_to_img)

img = tf.image.decode_image(img, channels=3)

img = tf.image.convert_image_dtype(img, tf.float32)

shape = tf.cast(tf.shape(img)[:-1], tf.float32)

long_dim = max(shape)

scale = max_dim / long_dim

new_shape = tf.cast(shape * scale, tf.int32)

img = tf.image.resize(img, new_shape)

img = img[tf.newaxis, :]

return img

# Create a simple function to display an image:

def imshow(image, title=None):

if len(image.shape) > 3:

image = tf.squeeze(image, axis=0)

plt.imshow(image)

if title:

plt.title(title)

# Download images and choose a style image and a content image: content_path = tf.keras.utils.get_file('Golden_Gate.jpg', 'https://upload.wikimedia.org/wikipedia/commons/0/0c/GoldenGateBridge-001.jpg')

style_path = tf.keras.utils.get_file('Starry_Night.jpg','https://upload.wikimedia.org/wikipedia/commons/thumb/e/ea/Van_Gogh_-_Starry_Night_-_Google_Art_Project.jpg/757px-Van_Gogh_-_Starry_Night_-_Google_Art_Project.jpg')

content_image = load_img(content_path)

style_image = load_img(style_path)

plt.subplot(1, 2, 1)

imshow(content_image, 'Content Image')

plt.subplot(1, 2, 2)

imshow(style_image, 'Style Image')

Let’s confirm that we have downloaded and loaded correctly the images.

# Use the TensorFlow Hub

import tensorflow_hub as hub

hub_module = hub.load('https://tfhub.dev/google/magenta/arbitrary-image-stylization-v1-256/1')

stylized_image = hub_module(tf.constant(content_image),tf.constant(style_image))[0]

tensor_to_image(stylized_image)

Discussion

That was a practical example of how you can easily apply Neural Style Transfer and to be somehow a “digital artist” :-). Note that modern approaches train a model to generate the stylized image directly (similar to cyclegan).

In this tutorial, we assumed that the reader is familiar with Images. In the previous post, we have explained how we can extract text from images, how we can iterate over pixels, how we can get the most dominant color of an image and how we can blend images.

Don’t forget to give us your ? !

Basic Example of Neural Style Transfer — Predictive Hacks was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.