If you’re reading this you’ve landed straight in our blog post series about machine learning projects. We know that the implementation of these projects is still a big mystery for many of our customers. Therefore, we explain the phases of AI projects in a series of articles.

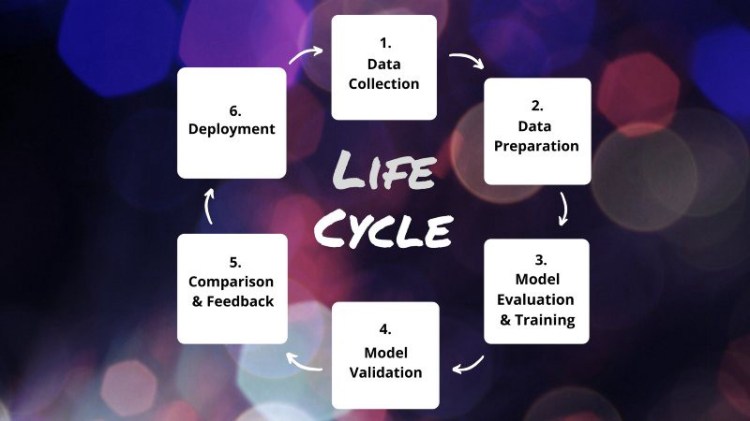

AI projects are usually carried out in a cyclic process. Our previous article dealt with the first two important phases of the cycle, data collection and data preparation. Today, we’re going to dive into the topic of model evaluation, which is a crucial part of phase 3 in our life cycle. Since this is a particularly complex topic, we will dedicate an entire article to it.

Let’s take a look at our example task again, so that we can figure out the process of selecting and evaluating the appropriate model. Our example project is about an umbrella federation for dance using a Digital Asset Management (DAM) system as a central hub for images. The DAM constantly receives images from all federation members. The dance federation uses these images as marketing collateral, but they do not want to use any of them; they only want to use the ones presenting the work of their members in a visually appealing way. The goal for the dance federation is to find aesthetic images. Consequently, our task here is to classify the images as aesthetic or unaesthetic.

Determine Classification Type

What many of our customers do not know: There are various types of classification tasks — from simple object classification to localization and complex classification on pixel level. The individual use case determines which classification type is right for a task:

- Classification of the whole image: Classify the image into one or multiple label classes. The labels may describe objects or other aspects like aesthetic, color mood or technical quality.

- Classification with localization: Classify the image into one or multiple label classes and give rough positions for each class. This is for example useful for placing shop links on product images.

- Object detection: Classification and localization of one or more objects in

an image. The objects and its exact positions as bounding boxes are predicted. - Semantic segmentation: Each pixel of an image is labeled to a corresponding class, for example a medical image where each pixel is labeled to either healthy or diseased tissue.

- Instance segmentation: Classification on pixel level that recognizes

each instance of a class. That means if we have an image with several shoes, each shoe is identified as a separate instance of the class “shoe”.

Trending AI Articles:

Choose the Right Model Complexity

In our example task we simply want to classify an entire image as aesthetic or unaesthetic. Convolutional Neural Networks (CNN) are great for image classification tasks and we can choose from different models like InceptionNet, VGG or Resnet. We found Resnet to be a good base architecture since it can easily be extended to suit different levels of task complexities.

The challenge is to find out which complexity is right for a given task and training data. A model that is too shallow will underfit the problem and is simply not complex enough to solve the task. A model that is too deep needs a lot of hardware resources, time for training and tends to overfit. An overfitting model memorizes the training data instead of generalizing the underlying concepts which results in poor classifications for unseen images.

In practice, we start with a simple model and then gradually increase its complexity until the model starts to show signs of overfitting. We then look at the results (loss and accuracy of training and validation data during the training) and choose the level of complexity that slightly overfits the training data. We stick to this model and take care of the overfitting in the training stage by applying regularization techniques. The more experience you have the faster you will be able to find the right model complexity.

Splitting the Data

Our example dataset consists of 7.400 images, half of which are aesthetic images and the other half unaesthetic images. We split the dataset randomly into three parts: 75% training, 10% validation and 15% test data.

The training data is used to train the model. The validation data is used to assess the performance of the model in each epoch of the training phase. The test data is not used during training but afterwards to check how well the model can generalize and perform on unseen data.

We always want to use as much data as possible for training, because more data means a better model. But we also need to know how well the model generalizes. Basically, the more samples we have the more we can use as training data. If we have millions of samples, the proportion of validation and test data can be smaller, but still meaningful, for example when having a split of 95%, 2.5%, and 2.5%.

Metrics to Assess Model Performance

What will be the metrics to evaluate the training success of our model? The most essential and popular metrics are accuracy and precision/recall.

Accuracy is given by the number of correctly classified examples divided by the total number of classified examples. Let’s say we have 1k test images. If the model predicts 863 images correctly, then our model accuracy would be 86.3%.

In situations in which different classes have different importance or we have an unbalanced data set, we also use precision/recall as metrics. For example a legitimate message should not be classified as spam (high precision) but we would tolerate some spam in the inbox (lower recall). In those cases we use metrics that can tell us how reliable the model is in classifying samples correctly (precision) and how good it is to correctly classify all available samples from a given class (recall). In practice we have to choose between a high precision or a high recall as it is usually impossible to have both.

However, since nerds want to keep things “simple”, we want to express the model performance in one number. To do so, we can combine precision and recall into one metric by calculating the harmonic mean between those two. The result is called the F1 score.

Summary

What we’ve talked about today is the work we have to do inbetween data collection/data preparation and the actual model training. We have defined the classification type for our task, we have chosen the right initial model architecture, we have divided the data set into subsets for model training, and we have defined the metrics we will apply to our chosen model. In the next part of our blog post series we will continue with the actual model training. Stay tuned!

And don’t forget: If you need an experienced helping hand for your own machine learning project, drop us a line.

Don’t forget to give us your ? !

ML Model Evaluation: Insights Into Our Machine Learning Process was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.