For the past few years, I have been tinkering with machine learning on the side but have struggled to find ways to include it into my day job as a Sr. UX designer.

I recently saw a presentation where the UX team at Airbnb took a hand-drawn wireframe sketch and fed it into a computer using a webcam. The computer was able to recognize the wireframe objects and turn them into HTML code https://airbnb.design/sketching-interfaces/. I was awestruck.

Airbnb’s presentation led me to the question of how easy or difficult it would be to reproduce their results? Since, to my knowledge, there are no code repositories or in-depth papers to reference, this left me with few clues on getting started.

Scope:

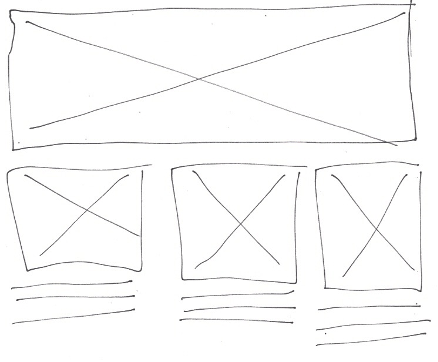

My initial goal was to detect a placeholder image and paragraph text on a hand-drawn wireframe fed into the computer via a scanner or webcam. A future iteration could include using the object detection size and location information from the machine learning model to dynamically insert HTML code into a web page.

Model:

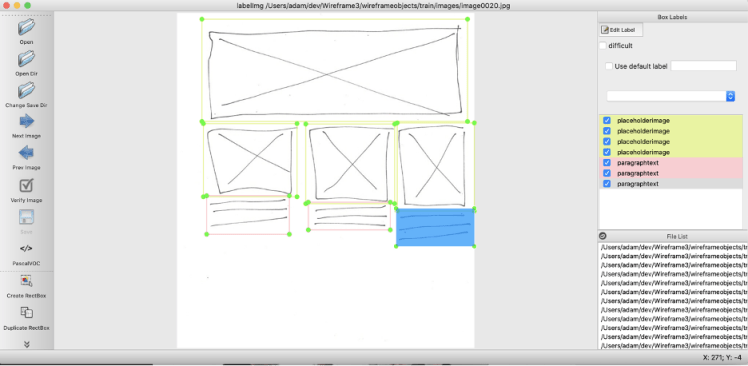

My first step was to create a custom object detection model. Using labelImg, I marked the locations of each placeholder image and placeholder text. I did this process for both the training images and validation images.

After annotating the images, it was time to train the model. I used the ImageAI library in Python to create the model. Depending on the number of images and epochs, this can be a real strain on the computer CPU / disk space and can take several hours, if not days, on a standard non-GPU computer.

Trending AI Articles:

1. Preparing for the Great Reset and The Future of Work in the New Normal

Results:

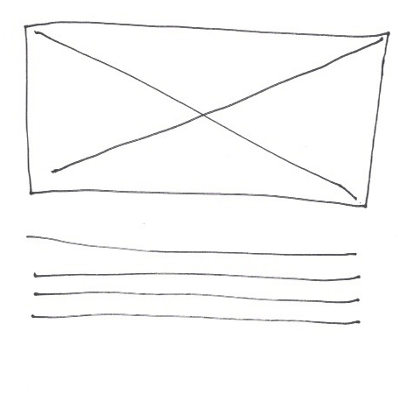

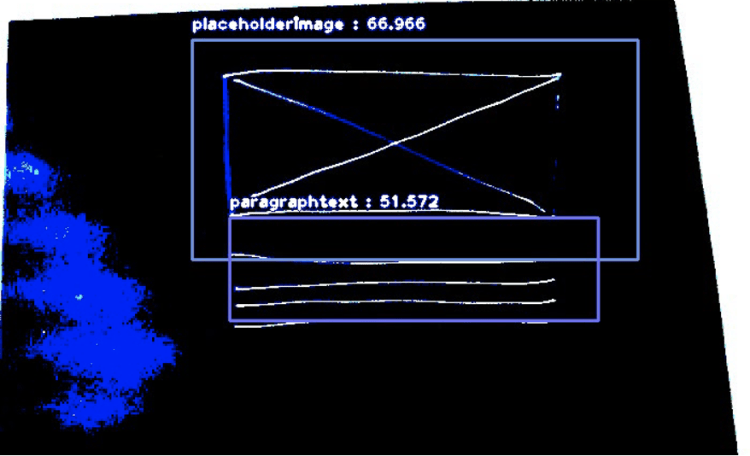

After training, the object detection model was ready for testing. Using a flatbed scanner, I scanned a wireframe sketch that was not part of the training or validation set. I ran the model against my scanned wireframe image to get a sense of how accurate the model was.

The image below shows that the model detected both the placeholder image and the paragraph text; however, the paragraph text’s detection probability is low at 51.6%. Adding additional images to the training and verification sets could produce a more accurate model.

Thoughts:

While it’s easy to dismiss Airbnb’s work up as presentational fluff that gets shown to executives (been there, done that), I think their work is more substantial. Airbnb demonstrated how machine learning could automate the busy work of converting wireframe sketches into code when the design standards are well defined — leaving their designers more time to work on value-added activities. As ML continues to improve, we will see fundamental changes to both the designs themselves and the tools and workflows used for design.

Don’t forget to give us your ? !

Using Machine Learning to Recognize Objects on Hand Drawn Wireframes was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.