Have you ever wondered what goes into a creative mind? Creativity, especially artistic creativity, has long been considered innately human. But to what extent can deep learning be used to produce creative work, and how does it differ from human creativity?

As my first attempt in such exploration, I’d like the train an LSTM (long-short term memory) neural network on a set of jazz standards and generate new jazz music.

DATA COLLECTION

I wrote a scraper to download over 100 royalty-free jazz standards in MIDI format. MIDI files are more compact than MP3s and contain information such as the sequence of notes, instruments, key signature, and tempo, which makes processing music data easier.

I selected a subset of songs to be my training dataset, including Dave Brubeck Quartet’s 1959 recording “Take 5”, one of the most requested and most popular songs of all time.

MODELING

To extract data from MIDI files, I’m using music21, a python toolkit developed by MIT. I’m also using Keras, a deep-learning API that runs on top of Tensorflow, a library for deep neural networks.

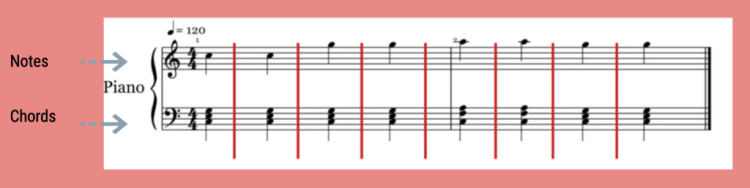

Generally speaking, there are three components to any given piece of music, melody, harmony, and rhythm. So for each MIDI file, I’m going to extract a sequence of notes, which represents melody, and chords, which represents harmony.

Trending AI Articles:

2. Generating neural speech synthesis voice acting using xVASynth

Similar to text data, music data is also sequential, thus a recurrent neural network (RNN) is used as the sequence learning model. In a nutshell, an RNN repeats the same operation over and over again, hence recurrent.

In particular, I will be using Long Short-Term Memory (LSTM) network, a type of RNN, which remembers information for a long period of time, typically used for text generation, or on time series prediction.

I’m setting the sequence length as 100, which means the next note is predicted based on the information from the previous 100 notes. Offset is the interval between two notes or two chords, so setting a number prevents notes from stacking. With those hyperparameters tuned, the model generates a list of notes, from which I can pick a random starting place, and I’m going to generate a sequence of 500 notes, which is the equivalent of about 2 mins of music, enough time to validate the quality of the output.

RESULTS

With everything said, here’s a snippet of the generated music:

Despite the fact that it sounds nothing like what a jazz musician would play, I’m impressed by the fact that there are a variety of melodic elements and somewhat jazz harmony. The rhythm can most definitely be improved.

This concludes my personal attempt at training a music generation model. As far as AI-music creation goes, there’s Google’s Magenta project which produced songs written and performed by AI, and Sony’s Flow Machines has released a song called “Daddy’s Car” based on the music of The Beatles.

With potentially more complex models, there is a lot neural networks can do in the field of creative work. While AI is no replacement for human creativity, it’s easy to see a collaborative future between artists, data scientists, programmers, and researchers to create more compelling content.

The code for this project can be found on my GitHub page. Feel free to reach out and connect with me here or on LinkedIn. Thanks!

Don’t forget to give us your ? !

Generating Music Using LSTM Neural Network was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.