Fifty-eight blogs/videos to enter Deep Learning with TensorFlow ( along with 8 memes to keep you on track )

Deep Learning could be super fascinating if you’re already in love with other ML algorithms. DL is all about huge neural networks, with numerous parameters, different layers which perform specific tasks.

This checklist will provide you with a smooth start ( and also a safer start ) with Deep Learning in TensorFlow.

Warning: This story uses some dreaded terminology. Readers who have jumped directly into DL, thereby skipping basic ML algos, are advised.

Without wasting time, let’s get started.

? The 30,000 feet overview ( Contents )

? The Basics

Mathematics of backpropagation ( bonus, refresher ),

- Derivatives ( Khan Academy )

- Partial Derivatives ( Khan Academy )

- Chain Rule ( Khan Academy )

- Derivatives of Activation functions

? TensorFlow Essentials

? Entering the DL World

- Activation Functions

- Dense Layers

- Batch Normalization

- Dropout Layers

- Weight Initialization Techniques

⚔️ Training the Model

- Optimizers ( Variants of Gradient Descent )

- Loss Functions

- Learning Rate Schedule

- Early Stopping

- Batch Size ( and Epochs vs. Iterations vs. Batch Size )

- Metrics

- Regularization

??? More from the Author

? Contribute to this story

? The Basics

? Understanding Neural Networks

Artificial Neural Networks are systems inspired by biological neurons and are used to solve complex problems, as they have the unique ability to approximate functions, which map the inputs to their labels.

By Tony Yiu,

And 3Blue1Brown is a must,

☠️ Your attention here, please. The following topic which DL folks call “backpropagation” is a serial killer for many ML beginners. It roams around with creepy weapons like partial derivatives, chain rule, so beginners with weak mathematics are advised.

? Backpropagation

Backpropagation is used to optimize the parameters of ML models, which aims to find a minimum for the given cost function. Having a strong understanding of how backpropagation works under the hood, is essential in deep learning.

- Back Propagation Neural Network: Explained With Simple Example

- A Step by Step Backpropagation Example

- Back-Propagation is very simple. Who made it Complicated ?

This 3Blue1Brown playlist might be helpful,

? TensorFlow Essentials

Although the TensorFlow website is enough for a beginner to get started, I would like to focus on some important topics.

? Dataset

This is an important class, which helps us load huge datasets easily and feed them to our NNs. The Dataset class has a bunch of useful methods. Using the dataset.map() method along with various methods in tf.image module, we can perform a number of operations on images from our data.

? TensorBoard

TensorBoard will help you visualize the values of various metrics used during the training of our model. It could be the accuracy of our model or the MAE ( Mean Absolute Error ) in case of a regression problem.

The official docs,

TensorBoard.dev can help you share the results of your experiments easily,

TensorBoard.dev – Upload and Share ML Experiments for Free

Some other helpful resources,

- Using TensorBoard in Notebooks | TensorFlow

- Deep Dive into TensorBoard: Tutorial With Examples – neptune.ai

? Entering the DL World

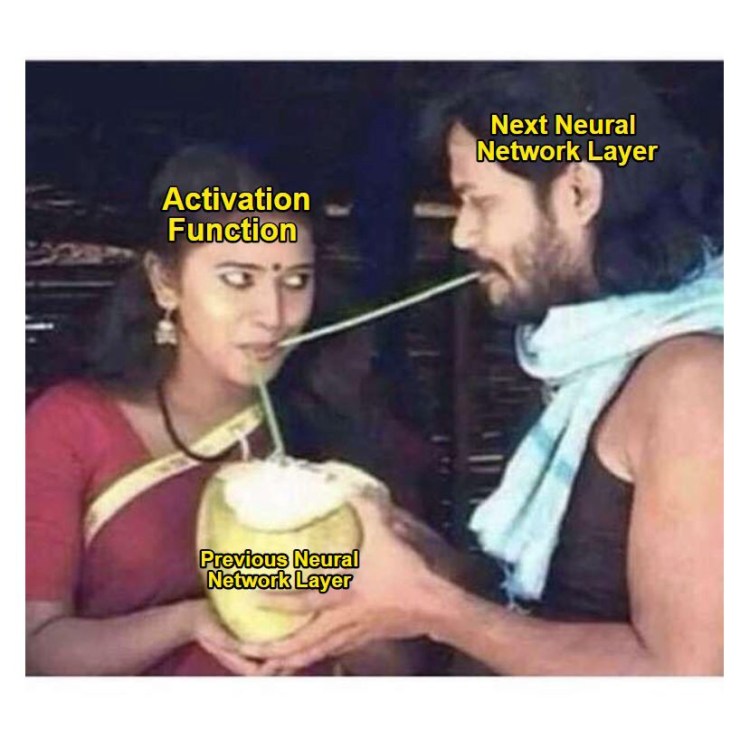

? Activation Functions

Activation functions introduce non-linearity in our NN, so as to approximate the function which would map our input data with the labels. Every activation function has its own pros and cons, so the choice will always depend on your use case.

- Activation Functions : Sigmoid, ReLU, Leaky ReLU and Softmax basics for Neural Networks and Deep…

- How to Choose an Activation Function for Deep Learning – Machine Learning Mastery

- Deep Learning: Which Loss and Activation Functions should I use?

Also, have a look at the tf.keras.activations module, to see the available activation functions in TensorFlow,

Module: tf.keras.activations | TensorFlow Core v2.4.1

Trending AI Articles:

2. How AI Will Power the Next Wave of Healthcare Innovation?

? Dense Layers

Dense layers are quite important in every possible NN. You’ll find them in every architecture whether it is for text classification, image classification or GANs. Here’s a good read, by Hunter Heidenreich, about tf.keras.layers.Dense to have a better understanding,

Understanding Keras — Dense Layers

? Batch Normalization

Batch normalization is one of the most efficient techniques to accelerate the training of NN, by tackling internal covariate shift. Some good blogs you may read,

- A Gentle Introduction to Batch Normalization for Deep Neural Networks – Machine Learning Mastery

- How to use Batch Normalization with Keras? – MachineCurve

From Richmond Alake,

Batch Normalization In Neural Networks Explained (Algorithm Breakdown)

Andrew Ng’s explanation on Batch Normalization,

And lastly, make sure you explore the tf.keras.layers.BatchNormalization class,

tf.keras.layers.BatchNormalization | TensorFlow Core v2.4.1

? Dropout

Dropout is widely used in NNs, as it fights efficiently against overfitting. It randomly sets activations to 0, given a dropout_rate which is the probability of activation being set to 0.

Its always better to start with a question in mind,

- Why does adding a dropout layer improve deep/machine learning performance, given that dropout suppresses some neurons from the model?

- A Gentle Introduction to Dropout for Regularizing Deep Neural Networks – Machine Learning Mastery

By Amar Budhiraja,

Learning Less to Learn Better — Dropout in (Deep) Machine learning

Understanding the math behind Dropout, in a simple and lucid manner,

Simplified Math behind Dropout in Deep Learning

Make sure you explore the official TF docs for tf.keras.layers.Dropout ,

tf.keras.layers.Dropout | TensorFlow Core v2.4.1

? Weight Initialization Techniques

Considering Dense layers, the parameters W and b i.e the weights and the biases, are randomly initialized. They are then optimized by Gradient Descent ( backpropagation ). But there are some smart ways to initialize these parameters so that the loss function could quickly each the minima. Some good reads are,

By Saurabh Yadav,

- Weight Initialization Techniques in Neural Networks

- Weight Initialization for Deep Learning Neural Networks – Machine Learning Mastery

The story below will give you a glimpse of how and where should we use different weight initialization techniques, by James Dellinger,

Weight Initialization in Neural Networks: A Journey From the Basics to Kaiming

Lastly, explore the tf.keras.initializers module,

Keras documentation: Layer weight initializers

? Training the Model

? Optimizers ( Variants of Gradient Descent )

A number of different optimizers are available in the tf.keras.optimizers module. Each optimizer is a variant or improvement to the gradient descent algorithm.

By Imad Dabbura,

By Raimi Karim,

10 Gradient Descent Optimisation Algorithms

? Loss Functions

Cost functions ( synonymously called loss functions ) penalize the model for incorrect predictions. It measures how good the model is, and decides how much improvement is needed. Different loss functions have different use-cases, which are detailed thoroughly in the stories below,

- Loss and Loss Functions for Training Deep Learning Neural Networks – Machine Learning Mastery

- How to Choose Loss Functions When Training Deep Learning Neural Networks – Machine Learning Mastery

- Introduction to Loss Functions

A great video from Stanford,

? Learning Rate Schedule

By practice, the learning rate of a NN model should be decreased over time. As the value of the loss function decreases, or as the loss function is close to minima, we take smaller steps. Note, the learning rate decides the step size of gradient descent.

- Using Learning Rate Schedules for Deep Learning Models in Python with Keras – Machine Learning Mastery

- Learning Rate Schedule in Practice: an example with Keras and TensorFlow 2.0

Make sure you explore the Keras docs,

Keras documentation: LearningRateScheduler

? Early Stopping

Early stopping is a technique wherein we stop the training of our model when a given metric stops improving. So, we stop the training of our model, before it overfits, thereby avoiding any excessive training which can worsen the results.

- A Gentle Introduction to Early Stopping to Avoid Overtraining Neural Networks – Machine Learning Mastery

- Introduction to Early Stopping: an effective tool to regularize neural nets

By Upendra Vijay,

Early Stopping to avoid overfitting in neural network- Keras

? Batch Size ( and Epochs vs. Iterations vs. Batch Size )

Batch size is the number of samples present in a mini-batch. Each batch is sent through the NN and the errors are averaged across the samples. So, for each batch, the parameters of the NN are optimized. Also, see Mini-Batch Gradient Descent.

By Kevin Shen,

Effect of batch size on training dynamics

This could probably clear a common confusion among beginners,

By SAGAR SHARMA,

Epoch vs Batch Size vs Iterations

? Metrics

Metrics are functions whose value is evaluated to see how good a model is. The primary difference between a metric and a cost function is that the cost function is used to optimize the parameters of the model in order to reach the minima. A metric could be calculated at each epoch or step in order to keep track of the model’s efficiency.

By Shervin Minaee,

20 Popular Machine Learning Metrics. Part 1: Classification & Regression Evaluation Metrics

By Aditya Mishra,

- Metrics to Evaluate your Machine Learning Algorithm

- How to Use Metrics for Deep Learning with Keras in Python – Machine Learning Mastery

Sometimes, we may require some metrics which aren’t available in the tf.keras.metrics module. In this case, a custom metric should be implemented, as described in this blog,

Custom Loss and Custom Metrics Using Keras Sequential Model API

? Regularization

Regularization consists of those techniques which help our model prevent overfitting and thereby generalize itself better.

By Richmond Alake,

- Regularization Techniques And Their Implementation In TensorFlow(Keras)

- An Overview of Regularization Techniques in Deep Learning (with Python code)

Excellent videos from Andrew Ng,

✌️ More from the Author

✌️ Thanks

A super big read, right? Did you find some other blog/video/book useful, which could be super-cool for others as well? Well, you’re at the right place! Send an email at equipintelligence@gmail.com to showcase your resource in this story ( the credits would of course go with you! ).

Goodbye and a happy Deep Learning journey!

Don’t forget to give us your ? !

58 Resources To Help Get Started With Deep Learning ( In TF ) was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.