Roadmap to Data Science

In this article, I will give you a complete road map on how to become a Data Scientist with skills like Machine Learning, Deep Learning and Artificial Intelligence.

So you probably are thinking that you need a Masters Degree or PhD from a great university to become a Data Scientist. Somehow it’s true that having a Masters or PhD in this background from a very top university will open doors for us in the field of Data Science and Machine Learning.

But you really don’t need any degree in order to become a Data Scientist, Let me explain how to do it.

How to Become a Data Scientist

There are 5 steps to become a Data Scientist, they are:

1. Learn Python

Python is a Great Language for Data Science, Now there will be some people who will say, to learn R or Matlab instead of python. Just don’t listen to them. Yet one day you might need to learn R and Matlab.

But to begin with, Python is the best choice for me, because the support of external libraries for Data Science and Machine Learning is best and easy in python.

2. Learn Mathematics(Linear Algebra and Statistics)

Data Science is all about applying maths to data. If you don’t know about maths, you will face difficulties with data science. You can start learning Mathematics for Data Science using Numerical Python and Statistics.

3. Learn Python Libraries

The Python Libraries are absolutely fantastic. If you don’t know what a library is, It’s basically something that you can add on to python, that gives python a lot more functionality.

There are some great Libraries for Data Science, you are required to learn:

- Numpy (for linear algebra)

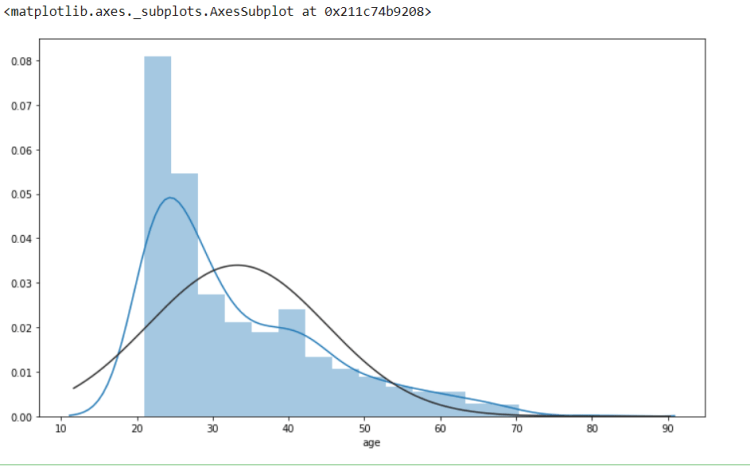

- Pandas (for statistics and data manipulation)

- Matplotlib (for data visualization)

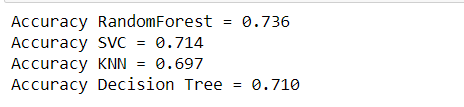

- Scikit-Learn (for Machine Learning)

- Learn Every Topic of Data Science

4. Start working on projects

After learning all these libraries you can start working on your own projects. You can get more than 20 projects here at — Data Science and Machine Learning Projects, or otherwise, you can research on the Internet to download the data sets for your practice.

You can download the data sets from https://catalog.data.gov/dataset, and if you want to work on projects related to Finance then https://in.finance.yahoo.com/ would be best for you.

5. Register yourself with Github

The last step is to register yourself at Github, here you can share your projects with everyone in the world.

The idea is to create a good portfolio of your projects at Github, so that people may know about you that what you do and what is your level with Programming.

Try to upload at least one project every month on Github, so that when employers will ask you for your contribution to any project, you can impress him with your skills.

Don’t forget to give us your ? !

Roadmap to Data Scientist was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Via https://becominghuman.ai/roadmap-to-data-scientist-b1dcb17896e7?source=rss—-5e5bef33608a—4

source https://365datascience.weebly.com/the-best-data-science-blog-2020/roadmap-to-data-scientist