Originally from KDnuggets https://ift.tt/2Z0wqyX

source https://365datascience.weebly.com/the-best-data-science-blog-2020/bias-in-ai-a-primer

365 Data Science is an online educational career website that offers the incredible opportunity to find your way into the data science world no matter your previous knowledge and experience.

Originally from KDnuggets https://ift.tt/2Z0wqyX

source https://365datascience.weebly.com/the-best-data-science-blog-2020/bias-in-ai-a-primer

Originally from KDnuggets https://ift.tt/2NkO2QG

source https://365datascience.weebly.com/the-best-data-science-blog-2020/machine-learning-in-dask

This is the second part of the series Optimization, In this blog post, we’ll continue to discuss the Rod Balancing problem and try to solve using Gradient descent method. In Part-1 we understood what is an optimization and tried to solve the same problem using Exhaustive Search(a gradient-free method). If you haven’t read that here is the link.

Introduction to Optimization and Gradient Descent Algorithm [Part-1].

Gradient-Based Algorithms are usually much faster than gradient-free methods, the whole objective here is to always try to improve over time i.e., somehow try to make the next step which results in a better solution than previous one. The Gradient Descent Algorithm is one of the most well-known gradients based algorithm. The Decision Variables used here are continuous ones since it gives more accurate gradients(slope) at any given point on the curve.

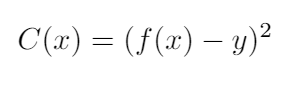

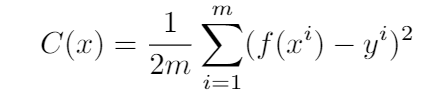

So, to solve our problem with gradient descent we’ll reframe our Objective function to a minimization problem. To do this we’ll make an assumption and define our cost function(it is also sometimes known as loss function or error function). We will assume that the best solution would be the one which can balance the rod for at least 10 seconds (let’s state this assumption as ‘y’). The cost function at its base is the function which returns the difference of the actual output and desired output. For our problem, the cost function would become:

Note: we squared the difference to avoid negative values or you can just take absolute value, either will work.

Now for every test result [f(x)]i.e., the time in seconds, the rod stayed on the finger, we can calculate our cost [C(x)]. So our Objective function would now change to minimizing C(x) instead of maximizing f(x), we can state the modified Objective function as,

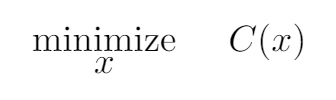

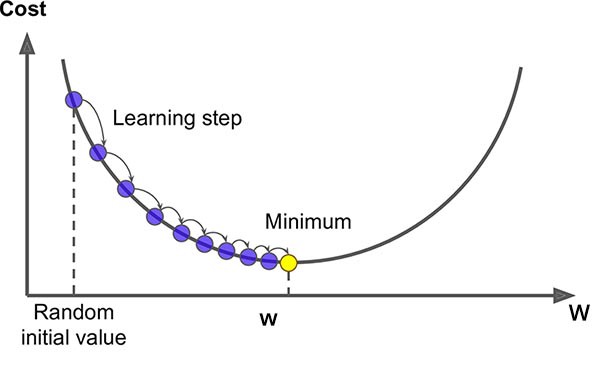

Since Objective function is changed now, our curve also gets inverse, i.e., on the y-axis instead of time we plot cost and will try to minimize it.

Any Gradient descent based algorithm follows 3 step procedure:

1. Search direction.

2. Step size

3. Convergence check.

Once we know the error, we have to find the direction of where we should move our finger on the rod for a better solution. The direction is decided by taking the derivative of the cost function with respect to the decision variable(s). This simply means calculating slope(‘dC/dx’ ) on the curve for a specific value of the decision variable, this slope is known as the gradient. The greater the slope, the further we are from the minima(i.e., the lowest point on the curve).

For Gradient descent we apply a simple rule,

“If the slope is negative, we increase decision variable(s) and if the slope is positive, we decrease decision variable(s) with some value.”

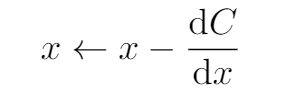

Once we know the direction of where we want to take our variables for the next step we update them, The above rule can be easily given in mathematical term as,

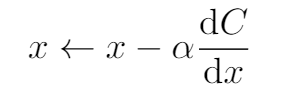

But using this update rule may overshoot the value, resulting in skipping the minima and jump to the other side of the curve. So, instead of reaching to the centre of the rod the variable may jump and go to the other corner and may introduce greater error. To avoid this we decide the step size by multiplying a very small value(usually 0.001 or 0.0001) to the gradient, this value prevents overshooting as we are not taking a very huge step. This is known as the learning rate(α), unlocks the key principle where Gradient descent shines,

“Big steps when away, small steps when closer.”

What above statement says is when the slope is greater(i.e., when the steepness is high) the variables will update with larger values and when the slope starts getting smaller(i.e., the steepness is low, reaching to the bottom) the variables will update with a very small value, This is the behaviour what we actually follow in the real world while solving this kind of problems. So our update rule now changes to,

We perform this operation of updating variable for a certain number of epochs(one complete pass to the training examples) until it converges.

Below is the video of me trying to solve the rod balancing problem using the gradient descent method, you‘ll notice how fast, compare to exhaustive search we discussed in part-1, we get the point ‘x’ on the rod where it balances perfectly.

3. Real vs Fake Tweet Detection using a BERT Transformer Model in few lines of code

solving Rod balancing problem by Gradient descent method

How the value of x converges to the minima, can be shown by the graph below.

There are different variants of Gradient descent, the one we looked at in this post is Stochastic Gradient Descent(SGD). SGD performs the update operation on variables after each example/test, just like we did in our problem. There are also other variants such as :

Batch or Mini-batch Gradient Descent: In this, the update operation is performed after each iteration of the training examples, iteration may consist of ‘m’ training examples. In batch gradient descent m is the total training examples whereas in mini-batch gradient descent total training examples are divided into batch-size(usually 32 or 64) and this batch-size will become m training examples for the update operation. An epoch will be considered complete only when all the batches have completed their update operation. For this, the most common cost function we use is Mean Squared Error(MSE), it is almost same as what we have used in our problem. The things which differ are, we add all individual errors for ‘m’ examples and divide by ‘2m’. It is given as,

There are also different Gradients Descent Algorithms, few important ones are listed below. They do provide better performance but underlying concepts remains the same.

Refer to this article, it explains the performance difference and how they vary from each other.

An overview of gradient descent optimization algorithms

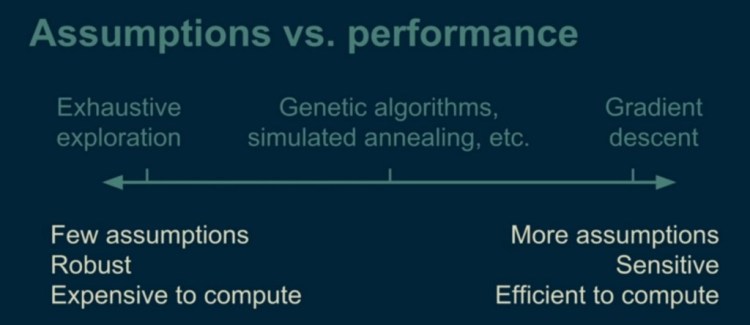

While selecting the optimization algorithm it is always advised to select based on their popularity. But, this is not always true, look at the image below and decide what suits best for your problem.

Congratulations!!! If you have made this far you must be having a comprehensive understanding of Gradient Descent Algorithm by now. Let me know if you found this 2 part series helpful by leaving response or giving a clap. I will cover more Machine Learning foundation topics in future, to get notified do follow me here.

Introduction to Optimization and Gradient Descent Algorithm [Part-2]. was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Spoiler Alert, It’s not magic it’s machine learning

An Absurd Challenge

Today I will show you how to obtain churn predictions before your coffee is ready. Put some coffee on the machine or french press so that when you get all those churn predictions you can enjoy going through them with that hot coffee you just brewed in the meantime.

A Friendly Introduction 🙂

Let me introduce myself. I am M Ahmed Tayib working as a Data-Scientist in Gauss Statistical Solutions. I am your friendly neighborhood data-scientist guy who loves coffee and loves an irrelevant challenge like making coffee vs conducting churn prediction.

Firstly, A definition

Churn is a term/label that is given to the customers who discontinue the services/subscription a company provides. For instance; if a user has not renewed Spotify subscription for 4 months then Spotify may consider that user a Churn.

Similarly, this can be said for any business in this modern era. Every business has churn customers, every business has a few segments of customers, well to be precise ex-customers, that discontinued the services.

Why Churn Prediction is Important?

A wise guy once said;

“Retaining a customer is always less expensive than acquiring a new one.”

I guess the quote speaks for itself. Of course, you need to obtain new customers to grow but that does not mean you have to lose some of them and do nothing to retain them.

Solution; Once you know which customers are likely to churn and why you can take appropriate action to retain them. However, the problem is in real life it is much much and much hard to know which customers are about to pull a stun of churn and let alone why.

How to Predict Churn?

Now that I have established what churn is and why churn prediction is important, lemme wrap it up with how to actually do it and do it really fast like never had been done before.

All you need is to have those sales and customer/user data. Please follow the steps below;

Voila! That’s it. Literally all you need to do is just upload the data and everything rest is taken care of.

3. Real vs Fake Tweet Detection using a BERT Transformer Model in few lines of code

Go grab your coffee it should be ready by now and then we can see what these predictions are and what good they will do.

A Much Needed Explanation

Well, you must be asking what about feature engineering, model training, model testing, and all those tasks. Lemme clear that up for you. Once you upload your data to Enhencer platform what it does is;

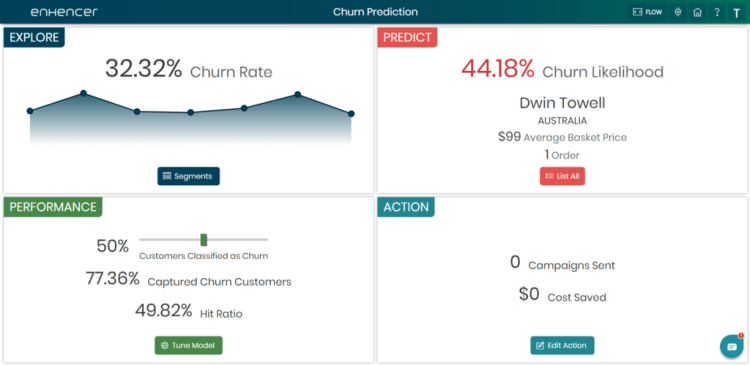

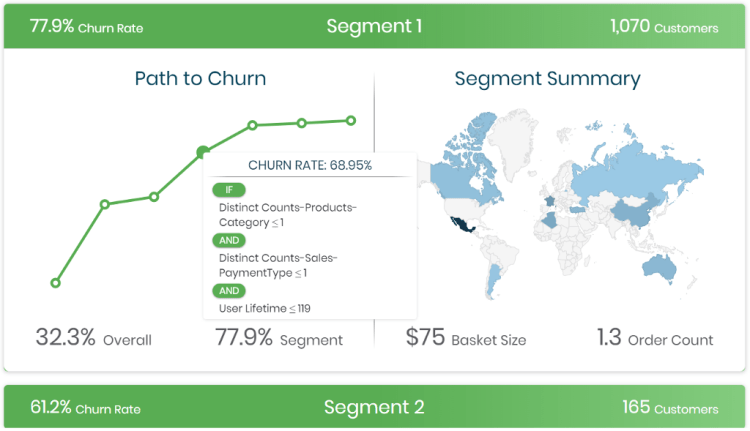

What you see in the first picture is the summary of the whole process. It gives the historical churn rate over time. Then it provides the likelihood of churn for all your customers so that you know which are customers are going to churn in the near future. Lastly most important of all in the second picture it provides the segments of customers. This shows why customers in these segments are going to churn.

Pretty neat hah… All you need you to do is look at which customers are highly likely to churn and see what is the reason behind that and take immediate appropriate action. This should be more than enough to help anyone to retain their customers and reduce the churn rate significantly.

An Unnecessary Conclusion

Definitely you can change the models and tune them later if something is not to you liking and what’s more, they have tons of algorithms as options for you, but that’s for the advanced enthusiast users.

You can’t get easier than that and from my experience in the data science field, all these would have taken days, if not weeks, in the traditional manner using R, Python, SQL, etc.

Here is a video to help you out how to upload your data in Enhencer and obtain Churn Predictions easily.

Well go ahead and try yourself and thank me later;

Enhencer – The Predictive Storyteller

Churn Prediction in 5 minutes was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Via https://becominghuman.ai/churn-prediction-in-5-minutes-1c24602fd9f3?source=rss—-5e5bef33608a—4

source https://365datascience.weebly.com/the-best-data-science-blog-2020/churn-prediction-in-5-minutes

An estimated 5 to 25 million tons of plastic are thrown in our oceans every year. Although we know that those devastating trashes for biodiversity and environment mainly come from rivers and beaches, it remains really tough to catch them all, especially in some poor and neglected countries.

From this observation, we decided to consider a solution using deep learning to detect rubbish with a camera.

Is it possible to generate a trash detector which would be the first step to clean up our rivers?

Such an issue is the kind we want to address at Picsell.ia.

The code for this experiment is available here.

The TACO trashes Dataset available on the Dataset Hub seemed to be really suited to begin our project. This Dataset contains 1500 pictures of everyday life trashes that we tried to annotate accurately.

As you can see the Dataset isn’t really well balanced and doesn’t have a lot of objects annotated but that’s a first try, we will allow you to contribute to this Dataset in the next few week !

We fine tuned a Faster RCNN model pre-trained on COCO (model already available on the Model Hub) and within 2 hours, our “trash detector” model was ready to be tested on the playground with our own data.

Let’s have a look on the playground :

Here, the bottles are well detected on this picture, but to be honest, our model is not as good on every images.

The reasons are of course the lack of training data, and the fact that we didn’t optimized our network so far. This leaves a lot of room for improvements.

Although this project has proved itself, it can hardly be used for the moment. Our final goal is to embed our model on an edge device (eg. NVIDIA Jetson..)and run it in real time. But for that the model has to be way more accurate than it is now and also suited for near real-time inference.

But how to improve the model ?

This article is the first part of a series, here we have just made a ‘prototype’ of our algorithm but the next parts will be dedicated to :

The different ideas we would like to implement are the following ones :

Finally, if we succeed in setting this trashes detector up, the next goal will be to find a way to pull the trashes detected out of the water. That is now out of our field, but we are sure that you will find solutions such as the first floating devices that already exist.

3. Real vs Fake Tweet Detection using a BERT Transformer Model in few lines of code

This is a long run project that will need a lot of iterations but after that’s what we want to facilitate at Picsell.ia !

Do not hesitate to come along and help us built the biggest Open Computer Vision Hub and share this with your relatives, also please come and ask for help if you need it, it’s always a pleasure to guide you 😉

Trying to contribute to the fight against waste pollution with Computer Vision — Part I was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally from KDnuggets https://ift.tt/3dszaKt

Originally from KDnuggets https://ift.tt/3hOC3Jd

Originally from KDnuggets https://ift.tt/3emKdpW

One of the most common statements you hear today is ‘We are living in a digitalized world’. But are you actually aware of what…

Continue reading on Becoming Human: Artificial Intelligence Magazine »

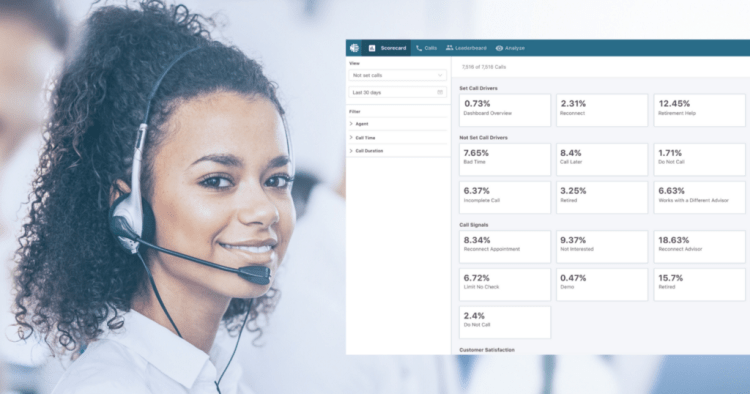

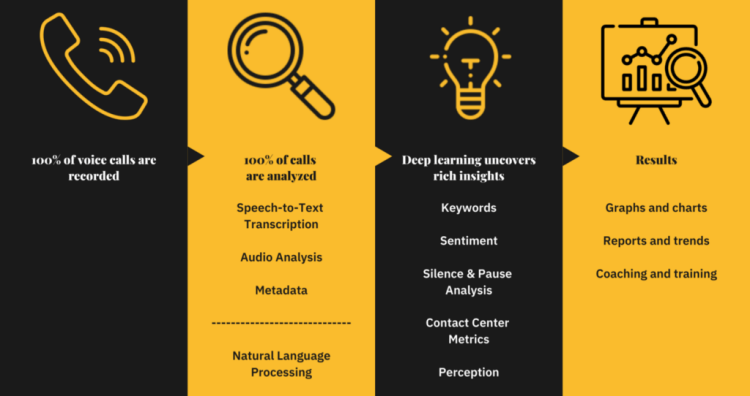

Voice AI sits at the intersection of speech analytics and quality management, using cutting edge speech technology and natural language processing to transcribe and analyze support calls at a massive scale. It enables organizations to analyze 100% of customer conversations with the ultimate goal of improving agent performance and the overall customer experience.

With Voice AI, key moments in conversation can be unearthed to provide a more accurate picture of how the contact centers as a whole, and the individual agents staffing them, are performing across key metrics. Analytics on interactions like sentiment, emotion, dead air, hold times, supervisor escalations, redaction, and more are often game-changing for businesses who previously had low QA coverage, and Voice AI is the key to identifying them.

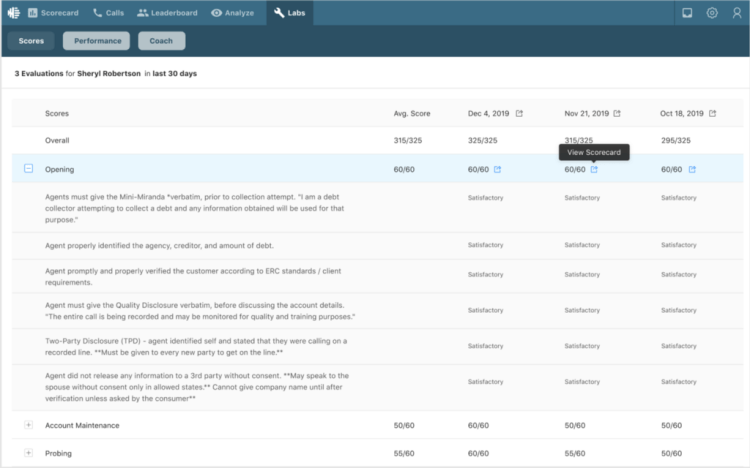

Once transcribed and analyzed, Voice AI automatically scores some parts of conversations and enables organizations to create tailored coaching programs for agents.

Voice AI emerged as a result of the inefficiencies of highly manual traditional quality management (QM) programs. Organizations struggled to fully-understand performance, monitor mission-critical KPIs and compliance, and better enable their agents with relevant training.

Voice AI, built around Analytics-enabled Quality Management, radically transforms an organization’s quality programs in a number of ways:

”Success for our team means bringing out the best in each agent. We’re able to do that by throwing out the one size fits all coaching approach and tailoring conversations on an individual basis. Voice AI helps ensure you’re an optimized leader by identifying and addressing the right gaps.”

– Kyle Kizer, Compliance Manager at Root Insurance

Voice AI provides a wide variety of benefits to improve processes across a contact center. Next, we’ll dig into some real-world use cases of how Voice AI and quality automation is used today.

Regulatory compliance is paramount across all industries, most notably financial, insurance, and healthcare. It ensures the protection of customer data, backed by strict legislation to enforce it. As a result, monitoring mandatory compliance dialogues and categorizing voice calls relevant to specific compliance regulations is mission-critical.

Examples

Measurable KPIs

3. Real vs Fake Tweet Detection using a BERT Transformer Model in few lines of code

The beginning of a conversation is important from both a customer experience and a compliance standpoint. The end of a conversation is also important for customer experience, and it also is an opportunity to both better confirm how the call went and create next steps.

Examples

Measurable KPIs

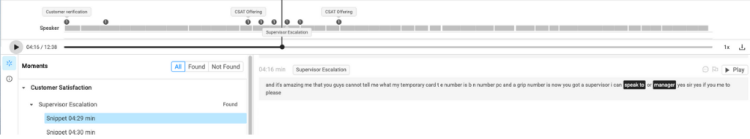

Supervisor escalations are a strong indicator of a negative customer experience, a metric for agent call-handling, or an organizational inefficiency. Escalations in any contact center are costly due to the amount of time and resources required to resolve them.

Examples

Measurable KPIs

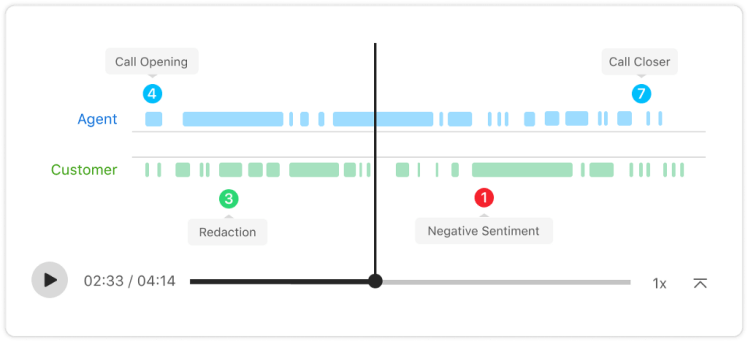

Customer sentiment analysis is an indicator of how people feel about a brand, its products, and its service. Simple sentiment analysis is determined based on words alone (what’s being said), while advanced sentiment analysis (tonality-based) considers tone and volume as well (what, how, and why it’s said).

Examples

Measurable KPIs

Voice AI is transforming the contact center as we know it, uncovering deep insights across every single voice call that takes place, and providing the data needed to drive more targeted training programs for agents.

“What’s exciting about Voice AI is that we can change the way we’re coaching and re-write our quality cards. We can move away from check-boxes and focus on real skill development. Using Voice AI helps us change behavior faster.”

– Dale Sturgill, VP Call Center Operations, EmployBridge

What is Voice AI? Benefits and Use Cases for Transforming Quality Management was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.