Originally from KDnuggets https://ift.tt/2BQ05TE

Artificial Intelligence in Testing

Today, the surface area for testing software and quality assurance is not as wide. Applications interact with each other through a number of APIs, legacy systems, and an increase in complexity from one day to the next. However, the increased complexity leads to a fair share of challenges that can be overcome by machine-based intelligence.

As software development life cycles become more complex as day and delivery time decreases, testers need to provide feedback and evaluation to development teams promptly. Given the breakneck pace of new software and product launches, there is no way to test soberly and rigorously in this day and age.

To Know More: How Much Does It Cost To Make A Mobile App 2020

Releases that happen once a month are now done on a weekly basis and updates are a factor almost every day. Therefore, it is very clear that artificial intelligence is the key to streamlining software testing and making it more smart and efficient.

By assembling machines that can accurately simulate human behavior, a team of testers can progress beyond the traditional path of manual testing models to an automated and precision-based continuous testing process.

The AI-powered connected trial platform can detect altered controls more efficiently than humans, and with constant updates to its algorithms, even slight changes can be observed.

When it comes to automation testing, artificial intelligence is widely used in object application classifications for all user interfaces. Here, marked controls are classified as you create the tools, and testers can pre-set train controls, which are usually found in out-of-the-box setups. After observing the hierarchy of controls, testers can create a technical map, looking at the AI Graphical User Interface (GUI) to obtain labels for various controls.

Since testing is about verification of results, access to many areas of test data is essential. Interestingly, Google DeepMind has created an AI program that uses deep reinforcement learning to play video games, thereby generating a lot of test data.

Below the line, the Artificial Intelligence test site will be able to track users who are doing exploratory testing, to evaluate and identify applications being tested using the human brain. In turn, this puts business users to the test, and users can fully automate test cases.

When assessing consumer behavior, the risk priority can be assigned, monitored, and classified accordingly. These data are a classic case for automated testing for assessing and combining different conflicts. Heat maps can help identify obstacles in the process and help you decide which tests to perform. By automating repetitive test cases and manual testing, testers can focus more on making data-driven connections and decisions.

Finally, the limited time to test risk-based automation is a critical factor when it comes to helping users decide which tests to run to get the greatest coverage. With the amalgamation of AI in test creation, implementation and data analysis, testers can permanently eliminate the need to manually update test cases, and detect defects and components between controls and spot links in a more effective manner.

Advantages of AI in testing:

Here are some important advantages of AI services in testing.

Improved accuracy

It is human to do wrong. Even the most accurate tester must make mistakes when performing a monotonous manual test. Automatic testing helps by making sure the same steps are performed each time, and never get lost in recording detailed results. Freed from repeatable manual testing, testers have more time to create new automated software tests and deal with advanced features.

To Know More: Top Benefits And Risks Of Artificial Intelligence

Beyond the limits of manual testing

Running a controlled web application test with 1,000+ users is almost impossible for most important software / QA components. With automated testing, it can mimic tens, hundreds, or thousands of virtual users that interact with network, software, or web-based applications.

Can help both developers and testers

Developers can use shared automated tests to quickly diagnose problems before moving to QA. Inspections run automatically whenever source code changes are checked in and notify the team or developer if they fail. Such features save developers time and boost their confidence.

Increase in total test coverage

With automated testing, software quality increases the overall depth and scope of testing, which can lead to overall improvements. Automated software testing memory and file contents, internal program status to see if the software behaves as expected. All in all, Test Automation can run 1000+ different test cases on each test run, providing coverage that is not possible with manual tests.

Time saving + money = fastest time to market

Because software tests are repeated every time the source code is revised, manually repeating those tests is not only time consuming but also very expensive. In contrast, once created, automated tests can be run again and again, very quickly, with zero additional cost. Software testing time can be reduced from days to just hours, which directly saves costs.

AI-based automation tools

Popular AI-based test automation tools are used here.

Testim.io

Testim.io uses ML for the writing, execution, and management of automated tests. It features working end-to-end testing and user interface testing. The tool becomes smarter with more runs and increases the stability of test suites. Testers can manage JavaScript and HTML to compose complex programming logic.

Appvance

Uses artificial intelligence to create test cases based on consumer behavior. The test portfolio comprehensively describes what it does on real systems production systems. It is 100% user-centric.

Test.ai

Testai is a mobile test mechanization that uses AI to perform regression examination. This is useful when obtaining the performance metrics of your application and is more of a monitoring tool than a functional testing tool.

Functionize

Functionalize uses machine learning for functional testing and is similar to other tools in the market for its ability to create tests quickly (without scripts), run multiple tests in minutes, and perform in-depth analytics.

Closing point:

As artificial intelligence moves into the software development lifecycle, with each passing day, companies are considering whether to fully embrace their product engineering practices.

In the long run, AI is not limited to assisting software testers but applies to all roles in software development that are involved in delivering high-quality products to the market.

Don’t forget to give us your ? !

Artificial Intelligence in Testing was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Via https://becominghuman.ai/artificial-intelligence-in-testing-1da665725c92?source=rss—-5e5bef33608a—4

source https://365datascience.weebly.com/the-best-data-science-blog-2020/artificial-intelligence-in-testing

[ CVPR ] Automatic Understanding of Image and Video Advertisements

There are more images in this world, outside from natural images. This is another research topic, how can a model understand advertising…

Continue reading on Becoming Human: Artificial Intelligence Magazine »

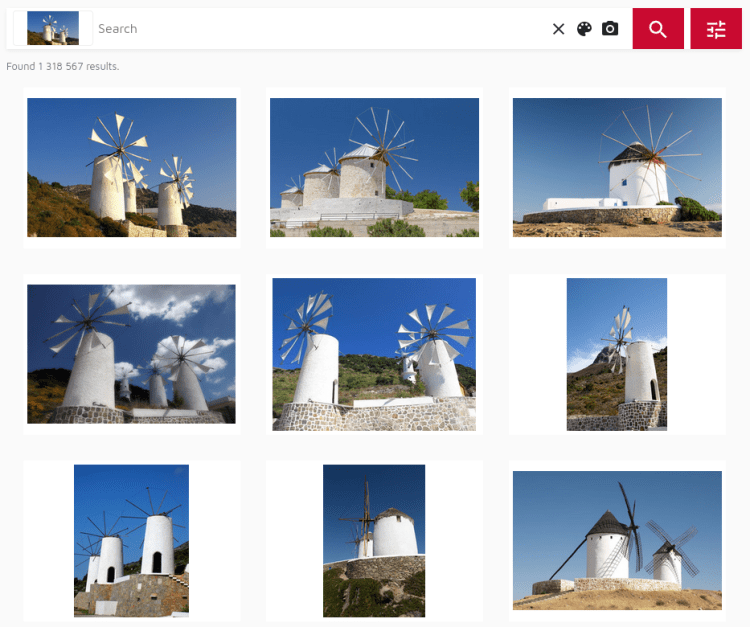

pixolution flow 4Our New Visual Search Engine

pixolution flow 4 — Our New Visual Search Engine

We’re thrilled to introduce our new major release today: pixolution flow 4. This is a complete rewrite of our visual search engine, with massive improvements and radical innovations.

In this article you’ll learn how all core functionalities have been improved in their performance, how our technology can adapt even better to individual needs thanks to the possibility of custom AI modules, and we’re going to introduce a new feature that opens up new use cases.

Better Visual Search, Duplicate Detection, and Image Tagging

pixolution flow 4 is based on an improved AI model we built. We used a deeper model and trained it with ten times more concepts than our previous version.

With this deeper insight into the content of images we are able to noticable improve the visual search experience. Compared to our previous product version we can now provide more relevant results for visual search queries. The precision of duplicate detection is also significantly improved. It can cope with more variations and is more robust against image modifications.

Our image tagging approach is based on the retrieval of the most similar images that are already tagged and part of your collection. Improvements of our visual search therefore have a direct impact on the quality of tagging predictions. This way pixolution flow 4 helps you to tag new images you want to add to your image collection. And this is done by using your language and specific wording style of your metadata.

Custom AI Modules for Your Individual Needs

If you have a specialized image collection you would like to classify or adapt visual search to subtle differences in images, we can help you even better now.

This is possible because pixolution flow 4 is based on a completely new modular architecture. pixolution flow is a hub for modules adding features. For example, the visual search, the multi-color search, or the text space filter are encapsulated in modules.

Modularity is the key. We are now able to build and integrate custom AI modules into pixolution flow 4. In short, it works like this: You tell us your needs and we train an individual AI model with your data. The resulting module can be easily added to your pixolution flow instance providing the custom functionality like specialized analysis and search features.

Trending AI Articles:

3. Real vs Fake Tweet Detection using a BERT Transformer Model in few lines of code

The possibilities here are endless. For example, we could integrate a module for recognizing certain objects and logos, or to detect persons in images, or a module that classifies image content based on specific categories.

Drop us a line and we’ll talk about your individual wishes for a custom module.

Multi Image Support

In pixolution flow 3 we extended the text search engine Apache Solr with visual search capabilities, but the visual search was limited to only one image representing one document. pixolution flow 4 now supports multiple images that visually represent one document.

This opens up some awesome new application possibilities. For example, you can index a series of images or different versions of images to make them searchable. Long videos with changing content can also be fully indexed and searchable.

These application scenarios are possible now:

Close-Up Search

When an image contains more than just one object it is often not clear what is actually the important part in the image. For example it can be as small as a ring.

With pixolution flow 4 you can handle this and index close-up images as well. If a user in an online shop searches for a specific ring, pixolution flow 4 will find one of its close-ups and you can display the associated main image. This is a fantastic user experience.

Search for Products and 3D Objects

If you have product shots from different angles, you can index all product shots to achieve a more robust search quality when it comes to 3D objects like shoes or cars.

Video search

Instead of indexing just one master keyframe per video, you can now index several frames. This way videos with changing scenes and changing content can be made fully searchable.

We have put a lot of hard work into our new product version, and we can’t wait to see it in action. Let’s have a chat if you want to learn more about it or visit our API docs. We’d love to hear from you!

Originally published on https://pixolution.org/blog.

Don’t forget to give us your ? !

pixolution flow 4 — Our New Visual Search Engine was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

GPT-3 a giant step for Deep Learning and NLP?

Originally from KDnuggets https://ift.tt/3f7TL8q

5 Essential Papers on Sentiment Analysis

Originally from KDnuggets https://ift.tt/2MK5NZu

Nitpicking Machine Learning Technical Debt

Originally from KDnuggets https://ift.tt/2YbBWhH

Natural Language Processing with Python: The Free eBook

Originally from KDnuggets https://ift.tt/2YbSAxV

Top Stories Jun 1-7: Dont Democratize Data Science; Deep Learning for Coders with fastai and PyTorch: The Free eBook

Originally from KDnuggets https://ift.tt/3h8QV4D

Introduction to RNN and LSTM(Part-1)

This article is the beginning of the series on RNN and LSTM and in this one, I’ll go through the basics of recurrent neural network.

The only prerequisite of this article is that you should have some idea about the neural network.

What is a Recurrent Neural Network?

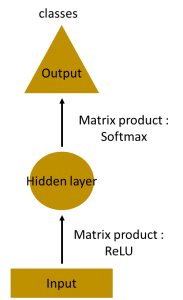

There are very different types of raw data available to us and at the same time we got different types of neural network for these types of data.

In a Neural network, we got an input layer, a bunch of hidden layers and an output layer. Note that these hidden layers are independent of each other.

That means each hidden layer will have their independent Weights(W) and biases(b).

So here comes RNN into the picture when we want to store previous information while processing the new information. This previous information is termed as a memory. In this network, a part of data is made to pass through the same set of parameters. It basically just makes use of sequential data in hand.

The complexity of assigning parameters is greatly reduced.

Where should we use this awesome network???

Well since the main speciality about the recurrent network is the memory, this network works really great on the sequence of data.

Data such as text and audio comes under sequence of data. For example in language processing, to predict the next words, the network should have some knowledge about the previous words. Same goes while processing audio spectrogram.

How does it work?

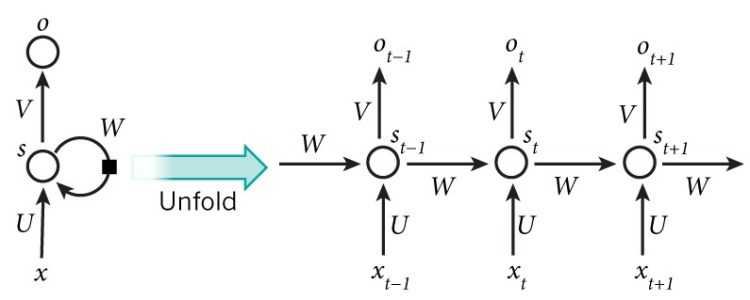

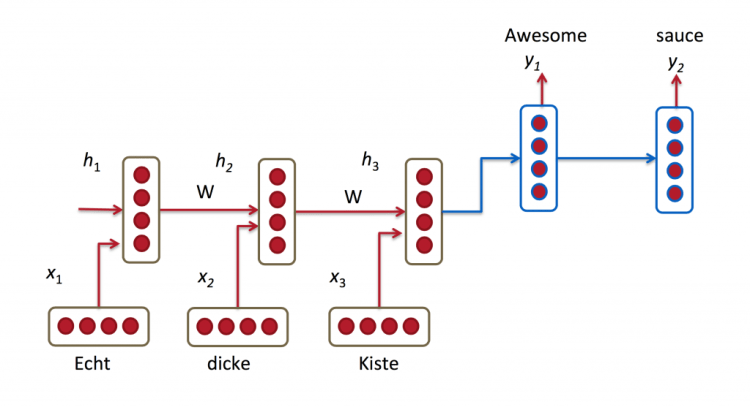

The above-shown figure is an unrolled recurrent neural network. In this context, unrolling simply means the number of times the part of data is being passed.

For example, if we are using a sentence at its input then the length of the network will be the count of words in that sentence. That is one layer for each word. In that way, it can keep track of it.

- x is part of the data applied to the network. By part of the data, I mean a single word

- o is the output of each network. Suppose we are using this network for the prediction of next work then it will be all the possibility of vocabulary probability provided. Depending on the task, it will not necessary to spit out the results after each intermediate steps.

- s is the memory which is being passed on to the successive network. It can be shown as s(t) = function(W * s (t-1) + U*x(t) ).The functions commonly used for hidden layers are either tanh or ReLU. Keep in mind that it is not possible for the network to hold all the information from times.

Training

Training a Recurrent Network is similar to that of a Neural Network. There is a slight difference in the backpropagation algorithm. Since we are using the same set of parameters for training, we will have to backpropagate through time.

Top 4 Most Popular Ai Articles:

3. Real vs Fake Tweet Detection using a BERT Transformer Model in few lines of code

This is known as Backpropagation through time(BPTT). I will go deep into it in the successive articles.

Applications

Recurrent Neural Network is heavily used in Natural Language Processing domain along with speech recognition. Multiple open source modules specially devoted to language processing has been created in languages. Anyone with a will easily create something and use it on various platforms.

Now I’m going to list down the major application of RNNS.

Translation

You must have stumbled across any foreign sentence and translated it using google translator, behind the scene a RRN is at work.

For a given sequence of words in a particular language, each token follows a pipeline architecture and at the end we obtain a translated tokens in appropriate order.

Speech Recognition

Alexa and Siri are able to understand your voice because of complex speech recognition system behind it.

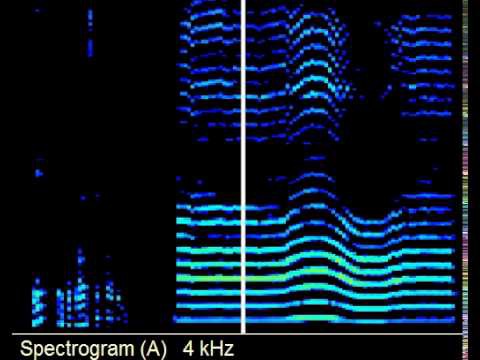

Our voice is converted into a Spectrogram where the system makes sense out of the highs and lows in frequency to understand it and generate a reply. Amazing isn’t it!!?

Image Description

In this application, Recurrent neural network along with a convolutional network is used to generate a description of a particular image.

It works like this:

- An CNN is used to tag different objects of that image.

- These tagged objects are then passed onto an RNN to generate an appropriate description.

The end result:

“two young girls are playing with lego toy.”

Thank You for taking the time to read this article.

Have a fantastic day 🙂

Don’t forget to give us your ? !

Introduction to RNN and LSTM(Part-1) was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Via https://becominghuman.ai/introduction-to-rnn-and-lstm-part-1-b6a5934a791c?source=rss—-5e5bef33608a—4

source https://365datascience.weebly.com/the-best-data-science-blog-2020/introduction-to-rnn-and-lstmpart-1