According to LinkedIn workforce report published in 2018, data scientist roles in the US had grown by 500 percent since 2014, while machine learning engineer roles had increased by 1,200 percent. Mobile devices have become data factories, pumping out a massive amount of data daily; consequently, the AI and analytic skills required to harness these data has grown by a factor of nearly 4.5 since 2013

According to the IBM Marketing cloud study, 90% of the data on the internet today, has been created only since 2016. A publication by Seagate today, also predicts worldwide data creation will grow to an enormous 163 zettabytes (ZB) by 2025. That’s ten times the amount of data produced in 2017, and a plethora of embedded devices would drive this growth.

Top organisations are competing for the best AI talents in the market. In 2014 Google acquired DeepMind, a British AI start-up, purportedly for some $600 million, around the same time, Facebook started an AI lab and hired an academic from New York University, Yann LeCun, to oversee it. By some estimates, Facebook and Google alone employ 80 percent of the machine learning PhDs coming into the market. As industries turn to big data, the result has been a global shortage of AI talents.

“I cannot even hold onto my grad students,” says Pedro Domingos, a professor at the University of Washington who specialises in machine learning. “Companies are trying to hire them away before they graduate.”

There are concerns AI expertise could become concentrated disproportionately in a few private-sector firms such as Google, which now leads in the field. Although these private companies make public some of their research through open-source, many profitable findings are not shared. To avoid the threat of any single firm having too much influence over the future of AI, several tech executives, including Tesla’s Elon Musk, pledged to invest over $1 billion on a not-for-profit initiative, OpenAI, which will make all its research public.

The mission of OpenAI is to ensure AI’s benefits are as widely and evenly distributed as possible

The extra money on offer in AI has excited new students to enter the field; college students are rushing in record numbers to study AI-related subjects according to this AI index 2019 annual report. Stanford’s enrollment in the school’s “Introduction to Artificial Intelligence” course has grown “fivefold” between 2012 and 2018, according to the report, “Introduction to Machine Learning” course at the University of Illinois also grew twelvefold between 2010 and 2018

Data and analytics are a critical component of an organisation’s digital transformation. Studies conducted by Indeed’s Hiring Lab showed an overall increase of 256% in data science job openings since 2013, with a rise of 31% year on year.

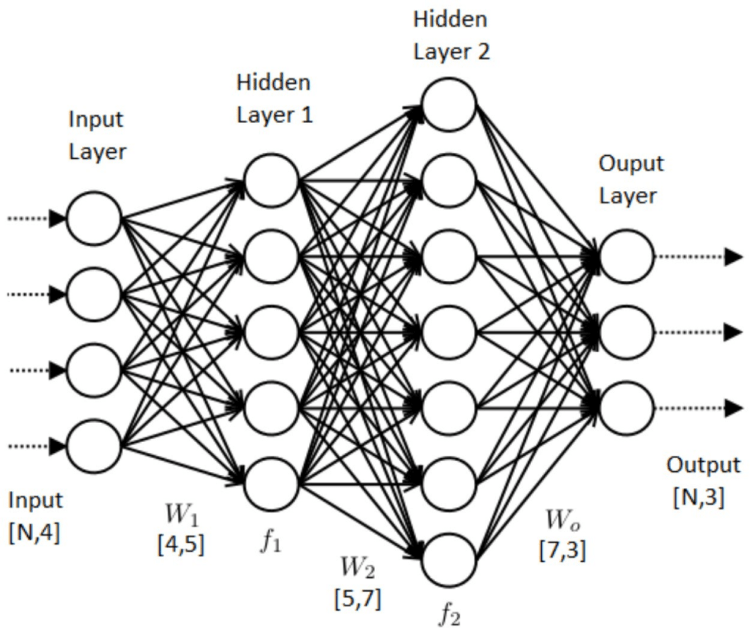

Whiles many individuals have job titles related to AI, many are unskilled at machine learning and AI. To train more aspiring data scientist, boot camps, massive open online courses(MOOCs), and certificates have grown in popularity and availability. Many of these boot camps are available online. Coursera alone has more than four specialisations in data science taught by seasoned experts and researchers in the field, edx, datacamp, udemy all offer impactful training, to name a few.

Top 4 Most Popular Ai Articles:

3. Real vs Fake Tweet Detection using a BERT Transformer Model in few lines of code

To address the overall demand for AI training, the government of powerful nations have begun to take huge steps, For instance, China

has made AI a central pillar of its thirteenth five-year plan and is investing massively in AI research. The Chinese Ministry of Education has drafted its own “AI Innovation Action Plan for Colleges and Universities,” beckoning for 50 world-class AI textbooks, 50 national-level online AI courses, and 50 AI research centres to be established by 2020. Many countries are following a similar program with China and the US leading the way

Conclusion

We have entered a transition period, and organisations everywhere are looking to retrain their existing workers and recruit skilled AI graduates to help align their business to the ongoing digital transformations. The demand for AI talents would not plummet anytime soon; we are in the data generation. How is your organisation implementing AI solutions, and what are thoughts on the skills shortage?

Don’t forget to give us your ? !

The Battle for AI Talent was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Via https://becominghuman.ai/the-battle-for-ai-talent-e938f4082f94?source=rss—-5e5bef33608a—4

source https://365datascience.weebly.com/the-best-data-science-blog-2020/the-battle-for-ai-talent