Originally from KDnuggets https://ift.tt/36RzvFb

Forecasting Stories 4: Time-series too Causal too

Originally from KDnuggets https://ift.tt/36Qr50B

Machine Learning for Societal Advancement

Society is changing at rates which can seem fantastical at times. Merely years ago we were blown away by the advancements in communication…

Continue reading on Becoming Human: Artificial Intelligence Magazine »

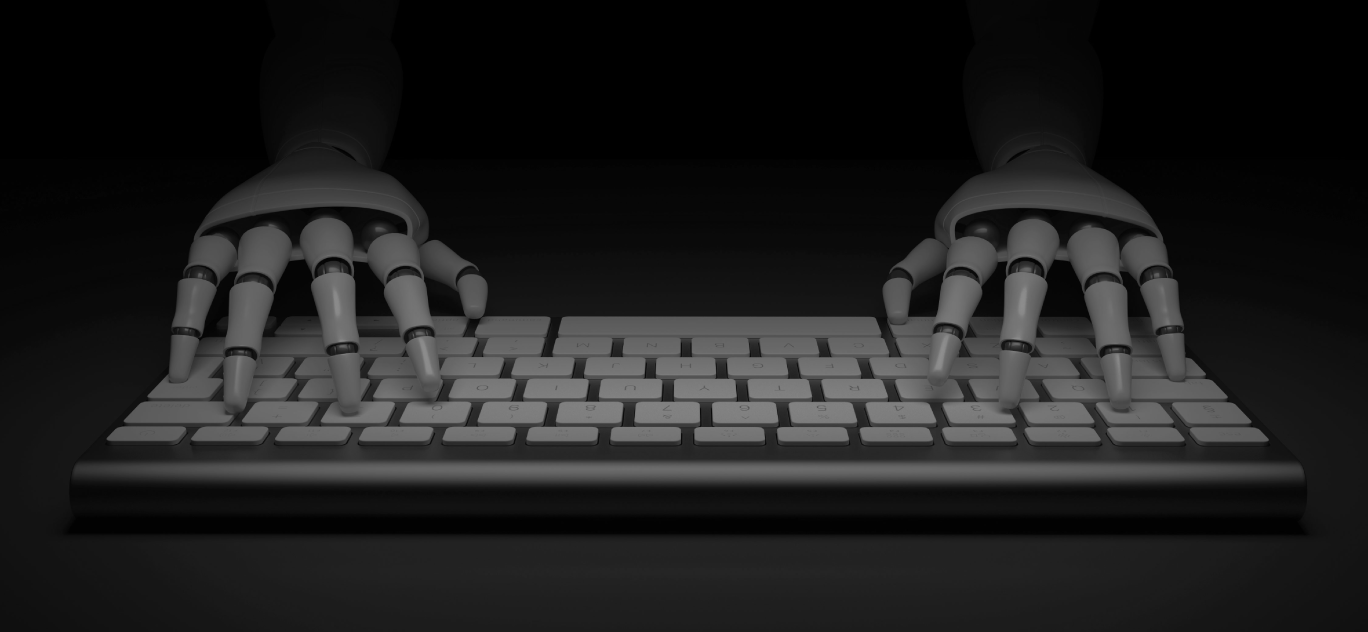

This Entire Article Was Written by Open AIs GPT2

Disclaimer: The following content was generated by OpenAI’s GPT-2. All headings are prompts fed to the system. While some of the…

Continue reading on Becoming Human: Artificial Intelligence Magazine »

The Key To Relevance In The Next Decade

We are amid a massive disruption at a scale never seen before. Digital transformation is fiercely taking shape, and many businesses are striving for a seat at the table. The confluence of accelerating technologies like elastic cloud computing, big data, AI and IoT is why digital transformation is one of the greatest buzzwords of the past few years. When you google “digital transformation”, you are likely to get results in the millions.

Before I continue its essential, I make a crucial distinction between digitisation and digital transformation. Digitisation is using digital tools and technologies to improve the way business is being run without really altering the fundamental modus operandi, digital transformation however helps you move gracefully from one way of working to an entirely new one. Merely investing in digital technology to digitise existing processes is not enough to transform an industry, digital transformation requires revolutionary changes to its core corporate processes and parts.

Digital transformation affects the way businesses think and act.

The way to maintain relevance in today’s business is to transform, and early adopters are likely to reap the more substantial benefits. The companies that fail to innovate and digitally transform are often afraid of risk; they would instead stick with what is proven.

When Apple introduced the iPhone in 2007, Nokia did not see the need to innovate. It’s clear which company is thriving today. Similar to evolutionary theory, moments of economic stability are suddenly disrupted with little forewarning, fundamentally changing the business landscape. We cannot predict precisely how and where the impacts will fall, but we know the scale of change would be enormous.

Listen to the words of Nike’s CEO Mark Parker: “Fueled by a transformation of our business; we are attacking growth opportunities through innovation, speed and digital to accelerate long-term sustainable and profitable growth.”

If large companies don’t evolve, they would be replaced by smaller companies. Size has never been a guarantee for longevity. More than half of the companies that comprised the Fortune 500 in 2000 are no longer on the list today.

John Chambers delivered a keynote as he left his two-decades-long role as Cisco CEO: “Forty percent of businesses in this room, unfortunately, will not exist in a meaningful way in 10 years. If I’m not making you sweat, I should be”

The gap between the companies that have embraced digital transformation and those that have not is already wide and is expected to increase exponentially. Companies can now take advantage of elastic computing, Big Data, AI and IoT to facilitate their digital transformation journey.

The drive for industries to digitally transform cannot be overemphasised; otherwise, by the time you adapt to today’s digital environment, that environment would have experienced significant change.

The Future of Digital Transformation

Digital transformation is expected to make strides in business and society at large. These new technologies would boost the economy, improve healthcare delivery and bring greater efficiency to our daily lives. Some of the ways digital transformation would improve human lives include:

- Healthcare: Early detection and diagnosis of diseases are expected. Robots would assist surgeons in performing surgeries with high precision. This advancement would democratise healthcare and dramatically reduce the cost of care

- Automotive Industry: Self-driving cars would help lessen casualties caused by distractions on the road, drunk driving and so on

- Customer Experience: Conversational AI or intelligent chatbots would be available to provide customer care 24/7 and free up the task from your support team to focus on the creative aspect of their work

- Manufacturing: 3D printing would allow for mass and inexpensive customisation with low or no distribution costs

- Resource Management: resources would be matched with needs, think of how elastic cloud computing would reduce cost on building an on-premise IT infrastructure

I have listed just a few of the applications, but the list is endless as we see the use of AI in industries never before anticipated. Digital transformation is not just for large cooperations; even smaller firms can increase productivity by embracing these technologies.

Top 4 Most Popular Ai Articles:

3. Real vs Fake Tweet Detection using a BERT Transformer Model in few lines of code

Five years from now, we expect 35 percent of the essential workforce skills to have changed. Companies have to initiate and start training their workforce on the skills that would be needed in the digital age to complement the work of machines. The world Economic Forum wrote in an influential 2016 paper on digital transformation: “Robotics and artificial intelligent systems will not be used to replace human tasks, but to augment their skills.”

As stated earlier, the building blocks to enable digital transformation are available and widely accessible. There is simply no time to delay; established companies must start reengineering their business processes or risk losing it to burgeoning startups

Don’t forget to give us your ? !

The Key To Relevance In The Next Decade was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Introduction to Pandas for Data Science

Originally from KDnuggets https://ift.tt/2TTlG3J

Deep Learning for Coders with fastai and PyTorch: The Free eBook

Originally from KDnuggets https://ift.tt/3eBiBx4

Top Stories May 25-31: Python For Everybody: The Free eBook; Interactive Machine Learning Experiments

Originally from KDnuggets https://ift.tt/3gDtJeR

Interview with Nikola Pulev, Instructor at 365 Data Science

Nikola Pulev, Instructor at 365 Data Science

Hi Nikola, could you briefly introduce yourself to our readers?

Hi, my name is Nikola and I am a Cambridge Physics graduate turned data scientist.

A man of few words! That’s the epitome of a brief introduction that sparks an interviewer’s interest. So, what do you do at the company and what are the projects you’ve worked on so far?

Well, I am part of the 365 Data Science Team in the role of a course creator and instructor. Course creation is, in general, a collaborative effort. There are lots of people involved in the production of each course. Generally, my part is to do continuous in-depth research on the topic and use my expertise to write the content of the course.

So far, I have developed the Web Scraping and API Fundamentals course along with Andrew Treadway. This was a very exciting project for me, as it was my first course for the company.

The Web Scraping course was warmly received by our students and we couldn’t be happier about it. That said, what are you working on right now, Nikola?

Well, the project I am currently working on is a continuation of our Deep Learning course, as it delves into Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs). This is an extensive topic, though, and the project is still in development.

While working on this awesome new addition to the 365 Data Science program, how do you help your students through the Web Scraping learning process?

Although our courses are online, the students have the opportunity to ask all the questions they have in the Q&A Hub. I do my best to answer those questions as thoroughly as possible. However, in my opinion, a good teacher should aim to answer the question a student may have before the student themselves asks it. So, in my courses, I constantly try to detect the concepts that may be confusing and clarify them in more detail.

Speaking of details, I think it’s time to tell us a bit more about your academic background and experience…

So, I have graduated from the University of Cambridge as a Natural Scientist, although I mainly specialized in Physics. I have always had a passion for mathematics and science in general, as well as coding. Not necessarily as a programmer, but I do like to create little programs to solve problems – whether it would be to automate some tasks or to create simulations.

So, how do you apply your skillset in your position now?

The years in university and Physics in general, have taught me to think analytically and to research topics extensively. Also, I have a circle of friends that are into science as well, and we frequently exchange knowledge. So, in that regard, I am practicing both my analytical and my teaching skills.

Nikola, the content you create surely reflects your remarkable academic background. But how did you choose data science and why did you choose 365 Data Science in particular?

When I graduated, I wanted to do something that would include mathematics and coding and that can be applied in practice. Data science seems to provide a good mixture of those. But that was not the only factor in my decision

365 Data Science was the main factor – they provided me with the opportunity to contribute to the society in a positive manner. And teaching makes me feel accomplished in so many ways!

As for the team itself, they are very professional and a great group of people!

I couldn’t agree with you more on that! In fact, your teammates were eager to ask you some questions themselves, so the next few come directly from them. Are you up for the challenge of answering those?

Absolutely. Shoot!

Which one do you prefer: 80% or 200% difficulty?

80% is for the weak.

Alright! If you had to battle 100 duck-sized whales or 1 whale-sized duck which would you choose?

Obviously the 100 duck-sized whales – they don’t have teeth or beaks and they are only in water.

I can definitely see you surviving the Apocalypse with this answer, Nikola! So, if you suddenly became the ruler of the world what would be the first thing you would do?

I would ban alarm clocks and make it so that there is no need to wake up early (I have to take care of my fellow night owls after all). Then, I would wake up to the sound of my alarm and realize it was all just a dream – too good to be true…

I think you just scored some major points with all 365 night owls (which is about 95% of the team). Now, before I leave you to your students’ Q&A, let’s wrap things up with our signature final question: Is there a nerdy thing you’d like to share with the world?

Recently, a friend of mine dropped the bombshell that vectors can be considered as a generalization of tensors and not the opposite, which is mindboggling. Normally, I wouldn’t go around scaring people with this info, but since you asked…

Oh, wow, I believe our readers will demand a dedicated blog post on this one!

Thanks for taking the time for this interview, Nikola. We wish you tons of success with your new project and all upcoming 365 Data Science courses!

The post Interview with Nikola Pulev, Instructor at 365 Data Science appeared first on 365 Data Science.

from 365 Data Science https://ift.tt/3dyhEoY

Why the AI revolution now? Because of 6 key factors.

About: Data-Driven Science (DDS) provides training for people building a career in Artificial Intelligence (AI). Follow us on Twitter.

In recent years, AI has been taking off and became a topic that is frequently making it into the news. But why is that actually?

AI research has started in the mid-twentieth century when mathematician Alan Turing asked the question “Can Machines Think?” in a famous paper in 1950. However, it’s been not until the 21st century that Artificial Intelligence has shaped real-world applications that are impacting billions of people and most industries across the globe.

Top 4 Most Popular Ai Articles:

3. Real vs Fake Tweet Detection using a BERT Transformer Model in few lines of code

In this article, we will explore the reasons behind the meteoric rise of AI. Essentially, it boils down to 6 key factors that are creating an incredibly powerful environment that enables AI researchers & practitioners to build prediction systems from recommending movies (Netflix) to enabling self-driving cars (Waymo) to making speech recognition (Alexa).

The following 6 factors are powering the AI Revolution:

- Big Data

- Processing Power

- Connected Globe

- Open-Source Software

- Improved Algorithms

- Accelerating Returns

Let’s dive into each of those and get to the details of why exactly they are making a difference and how they are influencing the rapid progress in AI.

Big Data

First things first. Here’s a formal definition of Big Data from research and advisory company Gartner:

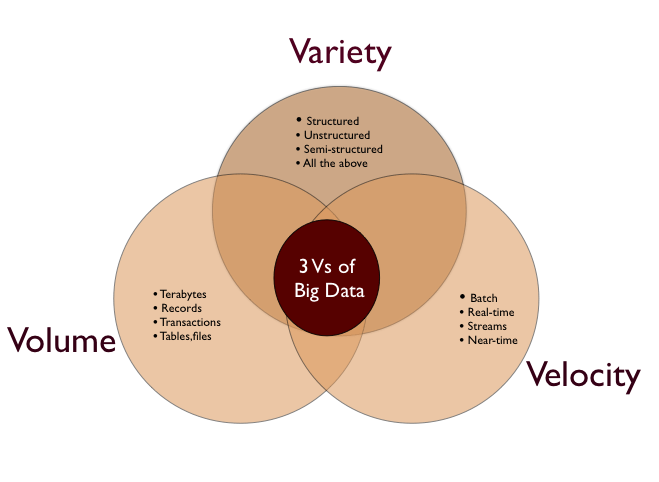

“Big data is high-volume, high-velocity, and/or high-variety information assets that demand cost-effective, innovative forms of information processing that enable enhanced insight, decision making, and process automation.”

Put simply, computers, the internet, smartphones, and other devices have given us access and generated vast amounts of data. And the amount is going to grow rapidly in the future as trillions of sensors are deployed in appliances, packages, clothing, autonomous vehicles, and elsewhere.

Data is becoming so large and complex that it is difficult or almost impossible to process it using traditional methods. This is where Machine Learning and Deep Learning come into play as AI-assisted processing of data allows us to better discover historical patterns, predict more efficiently, make more effective recommendations, and much more.

Also, as a side note: Data can be broadly put into 2 buckets. (1) Structured and (2) Unstructured Data. Structured data is organized and formatted in a way so it’s easily searchable in relational databases. In contrast, unstructured data has no pre-defined format such as videos or images, making it much more difficult to collect, process, and analyze.

One framework that is often used to describe Big Data is The Three Vs which stands for Volume, Velocity, and Variety.

Volume

The amount of data matters. With big data, you’ll have to process high volumes of low-density, unstructured data. This can be data of unknown value, such as Twitter data feeds, clickstreams on a webpage or a mobile app, or sensor-enabled equipment. For some organizations, this might be tens of terabytes of data. For others, it may be hundreds of petabytes.

Velocity

Velocity is the fast rate at which data is received and (perhaps) acted on. Normally, the highest velocity of data streams directly into memory versus being written to disk. Some internet-enabled smart products operate in real-time or near real-time and will require real-time evaluation and action.

Variety

Variety refers to the many types of data that are available. Traditional data types were structured and fit neatly in a relational database. With the rise of big data, data comes in new unstructured data types. Unstructured and semi-structured data types, such as text, audio, and video, require additional preprocessing to derive meaning and support metadata.

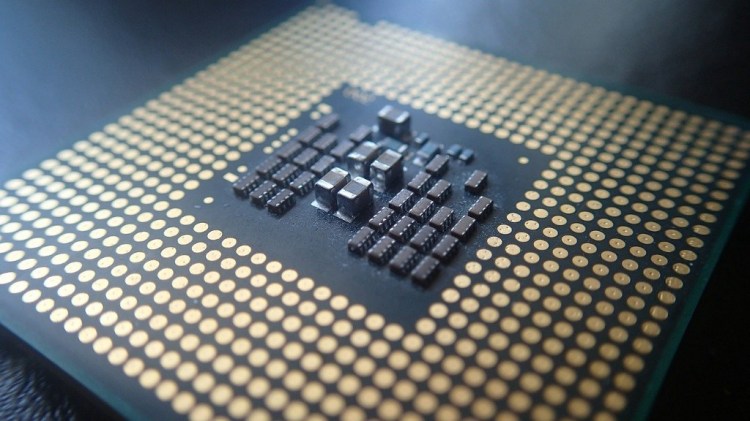

Processing Power

Gordon Moore, the co-founder of Intel Corporation, is the author of Moore’s law which says that the number of transistors on a microchip doubles every two years. This has led to the increased computing power of processors over the last 50+ years. However, new technologies have emerged which are accelerating compute, specifically for AI-related applications, even more.

One is the Graphical Processing Units (GPUs). GPUs can have hundreds and even thousands of more cores than a Central Processing Unit (CPU). The GPUs strength is processing multiple computations simultaneously which was mainly used in graphic-intensive computer games so far. But the computation of multiple parallel processes is what especially Deep Learning requires to manage large data sets to improve prediction quality.

Nvidia is the market leader in GPUs. Check their website if you’re interested in learning more about the products and AI solutions.

The second technology is Cloud Computing. AI researchers & practitioners don’t have to rely on the computing power of their local machines, but can actually “outsource” the processing of their models to cloud services such as AWS, Google Cloud, Microsoft Azure, or IBM Watson.

Of course, those services don’t come for free and can become actually fairly expensive depending on the complexity of the Deep Learning model that needs to be trained. Nonetheless, individuals and companies with the budget have now an option to access massive processing power that was just not available in the past. This gives AI a huge boost.

Lastly, a complete new set of Dedicated Processors start to emerge that are designed for AI applications from ground up.

One example is the Tensor Processing Unit (TPU) developed by Google. Those processors are particularly using Google’s own TensorFlow software and speed up the compute of neural networks significantly.

There’s a large number of hardware startups going after that big opportunity of creating dedicated chips that accelerate AI. Just to list a few: Graphcore, Cerebras, Hailo, Nuvia, or Groq. Check them out if you’re interested in those specialized processors. They could become a game-changer for AI.

Connected Globe

Thanks to the internet and smartphones, humans are more connected than ever. Of course, this hyper-connectivity also comes with a few downsides, but overall, it has been a huge benefit to society and individuals. Access to information and knowledge through social media platforms as well as online communities around various topics are now available to everyone.

And the Connected Globe is supporting the rise of AI. Information about the latest research & applications is spreading easily and sharing of knowledge is absolutely encouraged. Also, there are AI-related communities that are developing open-source tools and sharing their work, so more people can benefit from everyone’s learnings and findings.

For example, Medium hosts many publications that are targeted at an AI audience, and a large number of individuals share helpful insights. One can also find amazing resources on Twitter posted by top AI researchers and practitioners who are making big impact in the field. And of course GitHub — a massive community of software developers who share projects, code, etc.

At the end, everyone (even the big tech companies like Google and Facebook) knows that AI is too complex to be solved by individuals or single companies. That insight encourages sharing and collaboration, which can be done much more effectively than ever before through interconnected communities.

Open-Source Software

Let’s start with a definition: “Open source software is software with source code that anyone can inspect, modify, and enhance.” For example, Linux is probably the best-known and most-used open source operating system and had huge influence on the acceptance and usage of open source software.

Open-source software and data are accelerating the development of AI tremendously. In particular, open-source Machine Learning standards and libraries such as Scikit-learn or TensorFlow support the execution of complex tasks and allow the focus on the conceptual problem solving instead of the technical implementation. Overall, an open-source approach mean less time spent on routine coding, increased industry standardization and a wider application of emerging AI tools.

Check this article on Analytics Vidhya for an overview of 21 different open source Machine Learning tools and frameworks. They grouped them in the following 5 different categories:

- Open Source Machine Learning Tools for Non-Programmers

- Machine Learning Model Deployment

- Big Data Open Source Tools

- Computer Vision, NLP, and Audio

- Reinforcement Learning

Improved Algorithms

AI researchers have made significant advances in algorithms and techniques that contribute to better predictions and completely new applications.

Particularly Deep Learning (DL), which involves layers of neural networks, designed in a fashion inspired by the human brain’s approach to processing information, has seen massive breakthroughs in the last few years. For example, Natural Language Processing (NLP) and Computer Vision (CV) are completely powered by DL and used in many real-world applications from Search Engines to Autonomous Vehicles.

Another emerging area of research is Reinforcement Learning (RL) in which the AI agent learns with little or no initial input data, by trial and error optimized by a reward function. Google’s subsidiary DeepMind is one of the leading AI research companies and has used RL mainly in computer games so far demonstrating the potential. The famous AlphaGo algorithm beat top human Go player Lee Sedol in a match in 2016. Something that many people didn’t expect to happen so soon, because of the complexity of the game.

There are many other AI research labs such as OpenAI, Facebook AI, and universities that keep pushing the envelope and make constantly new AI discoveries that trickle down to actual industry applications over time.

Accelerating Returns

Artificial Intelligence, Machine Learning, and Deep Learning are not mere theories and concepts anymore, but actually boost the competitive advantage of companies and increase their productivity. Competitive pressures force companies to use AI systems in their products and services.

For example, most consumer companies leverage AI nowadays for personalization and recommendation. Think about Netflix or Amazon. And pharmaceutical companies such as Pfizer are now able to shorten the drug development process from a decade to just a few years using AI.

Those huge returns encourage companies to invest even more resources in R&D and developing new applications that take advantage of the possibilities of Artificial Intelligence and make it better — a self-reinforcing cycle.

Conclusion

We identified six factors that contribute to the AI revolution: Big Data, Processing Power, Connected Globe, Open-Source Software, Improved Algorithms, and Accelerating Returns.

Those factors are converging and a lot more development and innovation is continue to happen that will keep accelerating the impact of AI.

Don’t forget to give us your ? !

Why the AI revolution now? Because of 6 key factors. was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.