I’m just trying to build up an intuition here.

What do we mean by evolution?

Evolution, this mysterious concept that allegedly started with the works of Charles Darwin, so many people call it Darwinism. Tree of life, this famous motto “Survival of the fittest, not the best” that echos in our minds when we think of evolution. Monkeys as our furry cousins, chickens that are evolved from T-rexes, and many more stories linked to this single word: “Evolution.” It’s like a perfect battlefield for science enthusiasts and religious fundamentalists, and it usually ends like “…you might be descending from chimps but not me…” [holy spiritually drops the mic].

I know, I was there. Had my arguments with creationists, sat alone and dwelled on why did something evolve like this, why didn’t we grow wings and horns. For me, it all got serious when I started to experience a personal renaissance slowly happening around 2009. I started learning tiny pieces about evolution on my biopsychology textbooks, but then I became obsessed with these stuff around 2012, actively searching for more information. The Internet helped a lot; actually, all those youtube videos and lectures expanded my understanding of evolution. The next pieces fit after I enrolled in a graduate programme with a strong focus on animal behaviour and biology. I learned evolution is not all about natural selection, it’s also about randomness and sexual selection and a bunch of other concepts that we’ll just skip here. When I was done there, I was pretty confident that I’ve seen the light, finally, I know how evolution works but nope. I was almost there, but a crucial piece of information was misaligned. In this post, I want to share my current knowledge of evolution using some Adobe Illustrator and results from the current state of my PhD project. You see, I’m not an evolutionary biologist so probably this post won’t give you the most accurate explanation, what I want to do here is to build up a visual intuition. The last “Eureka” happened when I saw bits of evolution with my own eyes, and I realised what this sentence really means: Survival of the fittest, not the best. So I want to do the same here.

Evolutionary Optimisation; The fittest

First of all, the motto is not from Darwin, but it was Herbert Spencer’s impression of Darwin’s book but let’s get not distracted by who coined it. Back to the content, natural selection is one of the many ways that organisms evolve, and according to good old Wikipedia, it’s defined like this:

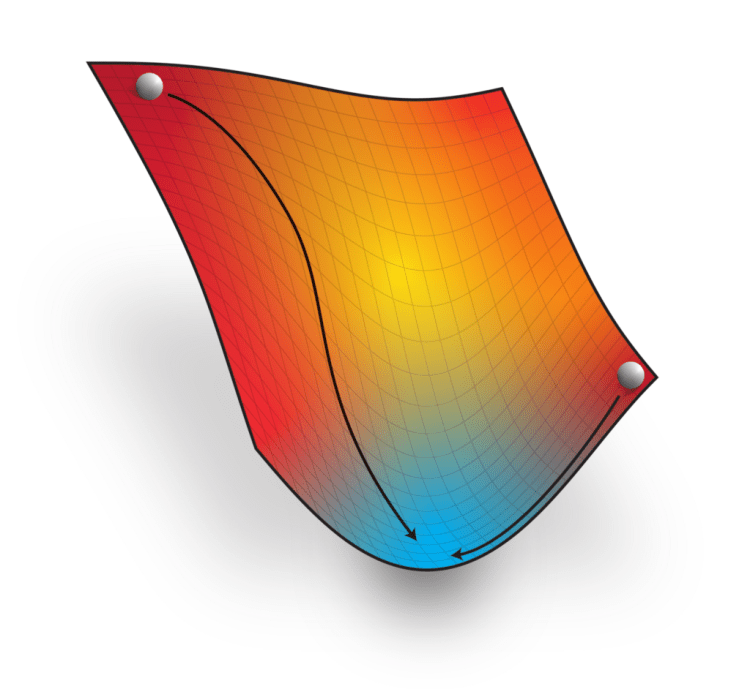

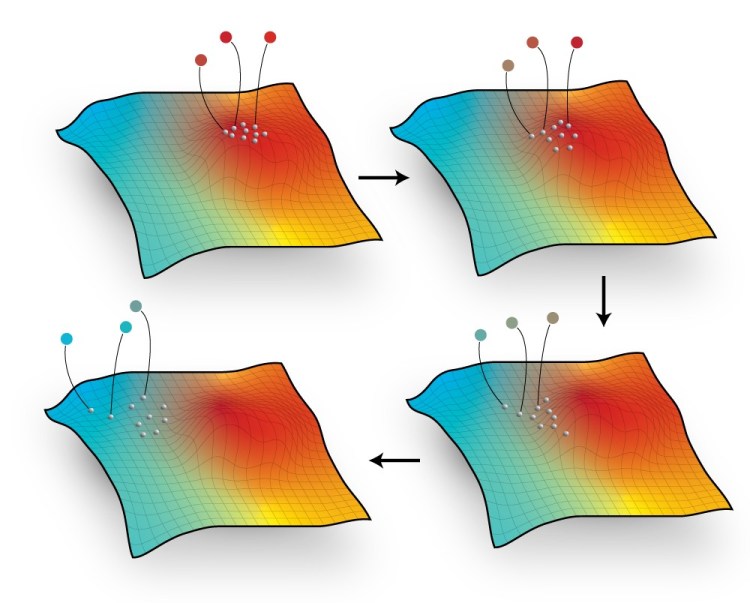

What the fuck, Wikipedia? diFfeRenTiaL sUrviVal aNd reProDuCtiOn of inDivIdUalS dUe to difFeReNcEs in pHeNoTyPe… that’s one of the reasons that people can’t see the beauty in it. Let’s make it simpler using some common sense. Imagine you’re searching for gold, want to reach the X, which by chance, you know it’s located at the lowest point of the field. It’s night by the way so you really can’t see what’s around. How should we reach it? Well, that seems not that terrible, I’d start by walking downwards till I reach a point that every other direction goes up. Probably I’m there, but I don’t know since I don’t have a helicopter view or something. To be sure, I start from a different point once more and see if I end up in the same lowest place. I shall now dig and hope for the best. Let’s make it more abstract using balls and surfaces in the pictures below:

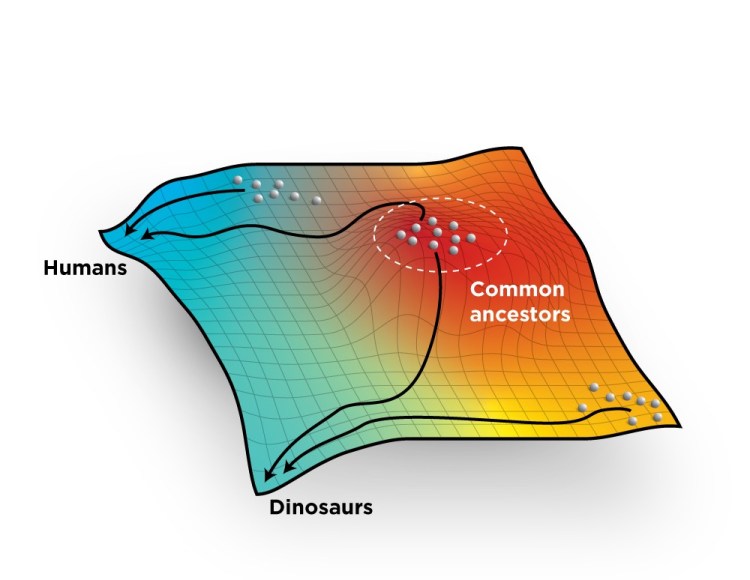

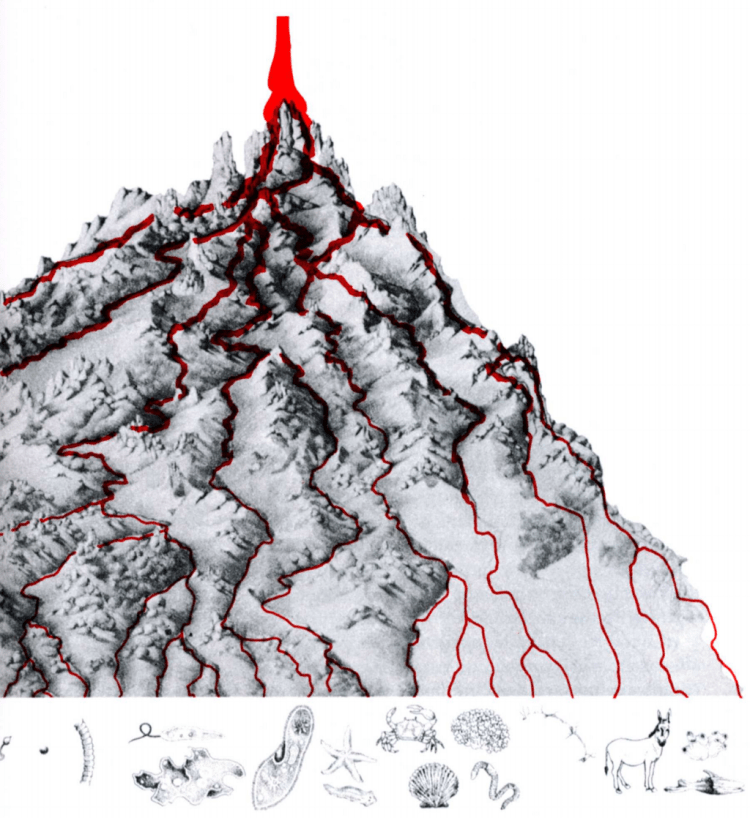

Technically, this is how many optimisation algorithms work to minimise the error of some fancy machine learning tools. Interestingly, a family of optimisation algorithms called “evolutionary algorithms” are inspired by how evolution works. Ok now imagine the surface to be like a mountain full of many hills, again the goal is to find the lowest point because we want the gold baby. You can do the same, keep walking downwards waiting for a flat land with surroundings being higher than itself then dig and hope you’re on the right spot. That’ll take some time since you have to try from a couple of other initial points. Now imagine you’re doing the same, but this time you gathered groups of people to help, they all start from a random position, move downwards step by step. You folks might all reach the same local minimum but probably each will end up in a different low point. Now imagine the space you’re exploring is so vast that during this process people need to settle in those locations like tribes and after a while, they develop their language and culture that if by chance you end up going there, they look pretty different. Ignoring the inner mechanisms of evolution, this is a good analogy for how it’s working. Here, let’s take a look:

Usually, in an evolutionary algorithm, we optimise whatever we want it to be optimised using a population of points on the “error landscape”. The algorithm, in its essence, is pretty simple:

start from a random location, see which solution has the lowest error, start a new population from there, keep moving till you reach a local minimum.

Trending AI Articles:

1. TensorFlow Object Detection API: basics of detection (2/2)

2. Understanding and building Generative Adversarial Networks(GANs)- Deep

Here we have parameters to play with, how many points we have in our population? How to reproduce the fittest solution? How tight are the circles around each other? And sometimes, when the population considered to be two different population? And so on. Look at the following figure to see what I mean by the last sentence:

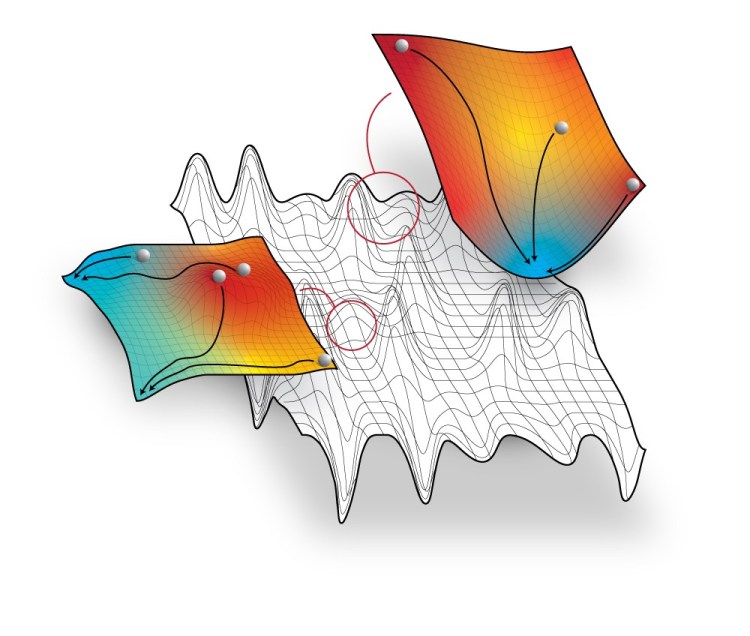

You see, after a while, the population can end up separated into different species. Algorithms such as NEAT exploit this feature to produce many right solutions for the task you’re working on. Hopefully, by now, we have a way to see what does the fittest mean. I know I oversimplified this and you should know it too. For example, this landscape is not static, and we don’t know what are we optimising here. Take a look at this:

The landscape is massive, open-ended, dynamic, and has an unknown number of variables and constraints. But at least now we have a way to look at a toy example. But just for everyone to be on the same page, keep this figure in mind:

The misalignment; Survival

I remember reading many times that evolution isn’t smart. It’s just recycling stuff and doing dirty, messy things. But I couldn’t get this, I mean look around you, everything is so perfect for its environment. Tiny stuff does brilliant things how this is messy and not elegant. Until I used NEAT for my project and saw the mess in action. Oh boy, it’s terrible inside. I remember reading about tons of junk DNA that are just there doing apparently nothing, or many things that we carry which had a function at a point but not anymore. Let me show you what’s happening.

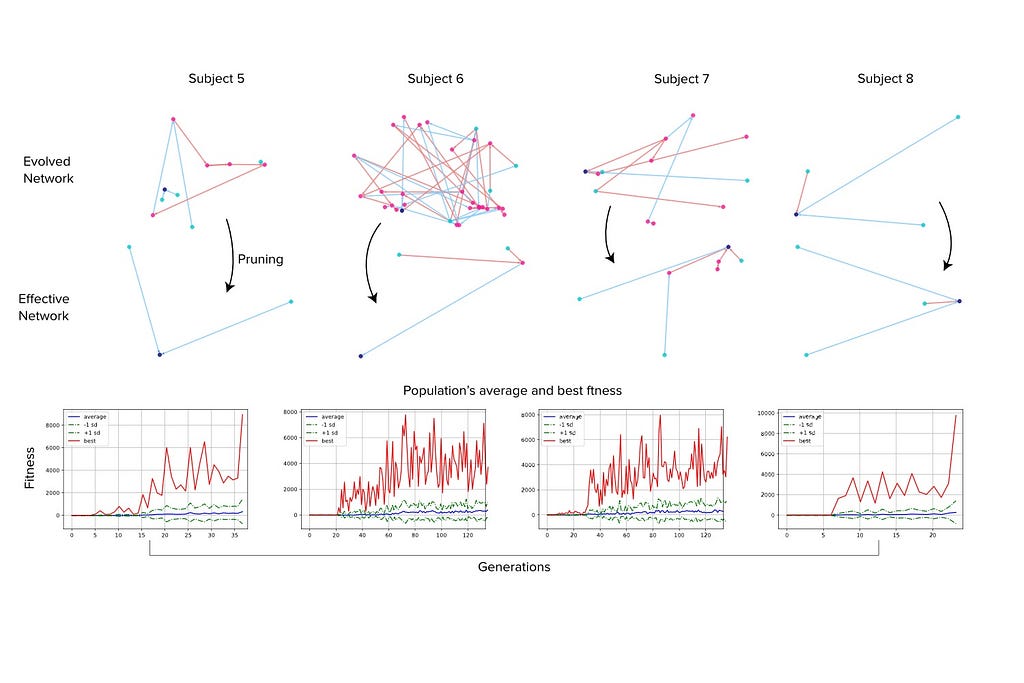

NEAT is an evolutionary algorithm that people use to train their AIs. You should probably know what it does; otherwise, I wasn’t successful in what I meant to do in the first part. Tell me, please! Anyway, I won’t go into the technical details of NEAT, and how cool it is, you can find ton’s of articles here in Medium so just search “neuroevolution” and watch NEAT in action. I used NEAT to make artificial brains that are capable of playing the given computer game. I didn’t use fancy Deep Reinforcement Learning stuff because I needed the network to be traceable. My PhD project is not about developing better AI, but as a neuroscientist, I want to know how the structure of this artificial brain can be linked to its function. For that, I’m using a Game Theoretical framework called Multi-perturbation Shapley Value Analysis. It doesn’t matter how it works, all you need to know is: what I wanted to do after I had my AI agent, is to ruthlessly destroy its brain many times to see how damaging different components messes with the performance. So I started playing Flappy Bird. I ended up with many networks that are good at playing Flappy Bird equally, but the strange thing was their topology. Let me show you:

Ok just look at this fuck, NEAT evolved those entangled webs with lots of literally useless neurons and connections. I could prune them because in this case, I only had one output neuron, so any path that eventually is not ending up there is useless. You see, after pruning those deadends we end up with a much smaller network that is doing the job while the rest are just there much like our junk DNA. But why?

I was so troubled with this because I was asking the wrong question from evolution. I was asking what the evolutionary benefit of something is? in other words, if something is there, it means it had an advantage. Hence, it stayed there; otherwise, evolution would throw it away. Nope, it’s the other way around. We are talking about the survival of the fittest so: whatever doesn’t kill me, it’s not essential for evolution. Let me put it differently:

Not everything has evolutionary benefits, we are carrying lot’s of garbage in our bodies because they didn’t have much of an evolutionary cost.

Let me go back to the Shapley value for a second and then we can wrap it up. What this value indicates is the importance of the element for the system’s performance (oversimplified). Perfect, I now have a number for how important each element is + evolution = we can see stuff again. That’s what I did, this time not with Flappy Bird but Space Invaders. Ask me for the details if you’re interested, but for now, all we need to know is that I ended with a system with 51 connections among 19 neurons. I calculated the importance of each connection. Look for yourself:

On the left side, you see most of the connections are somewhat useless (the Shapley values are around zero). This time I couldn’t prune them because they are all connected to the output neurons at the end. But functionally, their existence is not crucial for the performance. In fact, this is what right side of the figure is showing. I could have the same performance with only the four most important connections. What makes it more interesting is that the other 47 connections can’t do shit without these four! So basically, evolution didn’t throw them away, even the one that had a negative impact on the performance. What I want to show here is that not only we are carrying junks around, some of them are reducing the fitness. This will take us to the last point:

It’s still happening.

There was another point that I kept forgetting. We’re not done, the landscape is massive, open-ended, dynamic, and has an unknown number of variables and constraints. It’s possible that I’d lose the one bitchy connection that is messing with my fitness, but I decided to stop it after the network reached a specific lowest point. It did, with the burden on its artificial shoulders, it did anyway so who cares. I ended the simulation to see the current network, it’s like us looking around assuming this is it. We are the evolutionary hallmark; we are the fittest, look at us, look at this big brain. No stop looking we’re not done, and there’s no end to this as long as there’s a landscape to explore. Organisms will adapt, evolution will continue fitting and shit will change.

I want to leave you with a figure from the book “The Tree of Knowledge: The Biological Roots of Human Understanding” by Francisco J. Varela It inspired me a lot, I recommend y’all to give it a try.

Disclaimer: I don’t know how many times should I emphasise on this, I’m not an evolutionary biologist, and this post wasn’t supposed to give you a lecture or something. I struggled myself, and I thought this post could help others. I know many like me need to see stuff to understand them so, again, I wanted to give you a visual intuition. Learn the real deal somewhere else.

Don’t forget to give us your ? !

On Evolution was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Via https://becominghuman.ai/on-evolution-6db4f308795?source=rss—-5e5bef33608a—4

source https://365datascience.weebly.com/the-best-data-science-blog-2020/on-evolution