Delivering the technical evaluation metrics or the model performance only (accuracy, recall, precision) to client is not enough!

Continue reading on Becoming Human: Artificial Intelligence Magazine »

365 Data Science is an online educational career website that offers the incredible opportunity to find your way into the data science world no matter your previous knowledge and experience.

Delivering the technical evaluation metrics or the model performance only (accuracy, recall, precision) to client is not enough!

Continue reading on Becoming Human: Artificial Intelligence Magazine »

The Backpropagation is used to update the weights in Neural Network .

Paul John Werbos is an American social scientist and machine learning pioneer. He is best known for his 1974 dissertation, which first described the process of training artificial neural networks through backpropagation of errors. He also was a pioneer of recurrent neural networks. Wikipedia

Let us consider a Simple input x1=2 and x2 =3 , y =1 for this we are going to do the backpropagation from Scratch

Here , we can see the forward propagation is happened and we got the error of 0.327

We have to reduce that , So we are using Backpropagation formula .

we are going to take the w6 weight to update , which is passes through the h2 to output node

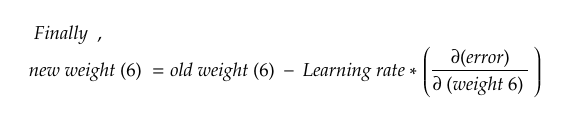

For the backpropagation formula we set Learning_rate=0.05 and old_weight of w6=0.15

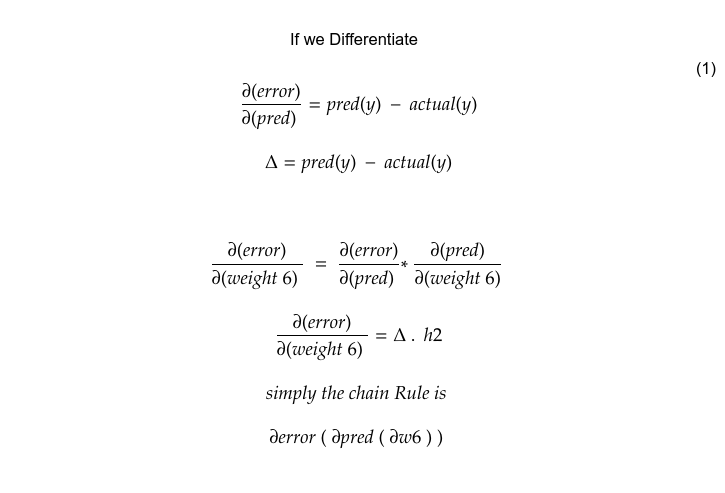

but we have to find the derivative of the error with respect to the derivative of weight

To find derivative of the error with respect to the derivative of weight , In the Error formula we do not have the weight value , but predication Equation has the weight

For that Chain rule comes to play , you can see the chain rule derivative ,we are differentiating respect with w6 so power of the w6 1 so it becomes 1–1, others values get zero , so we get the h2

for d(pred)/d(w6) we got the h2 after solving it

After Solving same as above we will get

d(error)/actual(y) = pred(y)- actual(y)

the more equation takes to get the weight values the more it gets deeper to solve

We now got the all values for putting them into them into the Backpropagation formula

After updating the w6 we get that 0.17 likewise we can find for the w5

But , For the w1 and rest all need more derivative because it goes deeper to get the weight value containing equation .

1. Cheat Sheets for AI, Neural Networks, Machine Learning, Deep Learning & Big Data

3. Getting Started with Building Realtime API Infrastructure

For Simplicity i have not used the bias value and activation function , if activation function is added means we have to differentiate that to and have to increase the function be like

d(error(d pred ( d h ( d sigmoid())) )

this is how the single backpropagation goes , After this goes again forward then calculates error and update weights , Simple…….

Chain Rule for Backpropagation was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Via https://becominghuman.ai/chain-rule-for-backpropagation-55c334a743a2?source=rss—-5e5bef33608a—4

source https://365datascience.weebly.com/the-best-data-science-blog-2020/chain-rule-for-backpropagation

Originally from KDnuggets https://ift.tt/2yiqRlA

Originally from KDnuggets https://ift.tt/2K2CLmm

With the tremendous popularity of data science that shows no signs of slowing down, those looking for future jobs for data science professionals should be ready for some good news. As a humungous 2.5 Quintillion bytes of data gets generated each day, there’s a growing demand for professionals who are capable of organizing this enormous pile of data to offer meaningful insights, which in turn can help businesses make informed decisions and find relevant solutions.

No wonder why future jobs for data science professionals will hail these people as the hero since these are those who can extract meaning from seemingly innocuous data — no matter whether it’s structured and organized or unstructured and disorganized. Though the post of data scientist has featured as the leader among other jobs for a few years in a row, the increasing emphasis on AI (artificial intelligence) and ML (machine learning) has given rise to a few jobs, the demand for which may soon outgrow that of data scientists.

In fact, the competition between machine learning engineers and data scientists is heating up and the line between them is blurring fast.

Before taking a deeper look into future jobs for data science professionals, let’s take a closer look into how artificial intelligence and machine learning have evolved over the years and what lies ahead in store for these domains.

It was mathematician and scientist Alan Turing who spent a lot of time after the Second World War on devising the Turing Test. Though it was basic, this test involved evaluating if it was possible for artificial intelligence to hold a realistic conversation with a person, thus convincing them that they were also human.

Since Turing’s Test, AI was restricted to fundamental computer models. It was in 1955 when John McCarthy — a professor at MIT, coined the term “artificial intelligence”. During his tenure at MIT, he built an AI laboratory. Full List Processing (LISP) was developed by him there. LISP was a computer programming language for robotics intended to provide expansion potential as technology improvements happened with time.

Though promise was shown by some base model machines — be it Shakey the Robot (1966) or Waseda University’s anthropomorphic androids WABOT-1 (1973) and WABOT-2 (1984), it wasn’t until 1990 when Rodney Brooks revitalized the concept of computer intelligence. But it took many more years for artificial intelligence to evolve as it was only in 2014 that Eugene, which was a chatbot program designed by the Russians, was able to successfully convince 33% of human judges. According to Turing’s original test, more than 30% was a pass, though plenty of room was left for stepping it up in the future.

From its humble beginnings, artificial intelligence (AI) has evolved as perhaps the most significant technological advancement in recent decades across all industries. Be it the robotics aspect of AI, or the implementation of machine learning (ML) technologies that are driving useful insights from big data, the future seems to hold a lot of promise. In fact, the enhanced information extracted from large chunks of data is helping companies today to mitigate supply chain risks, improve customer retention rates, and do a lot more.

An example of how these technologies could change the way we live and work became evident when Amazon introduced its Alexa in the workplace. However, many believe that the AI-powered, voice-activated device signals just the beginning. Thanks to NLP (natural language processing), which is made possible via machine learning, modern computers, hi-tech systems, and solutions can now know the context and meaning of sentences in a much better way.

As NLP becomes more improved and refined, humans will start communicating with machines seamlessly exclusively via voice without the need of writing code for a command. Thus, professionals who can design and test devices based on NLP and voice-driven interactions are likely to be in high demand in the future.

3. Machine Learning using Logistic Regression in Python with Code

With the growing interest and implementation of artificial intelligence in various fields and the promising future the global machine learning market (predicted to grow to $8.8B by 2022 from $1.4B in 2017, according to a report by Research and Markets), there’s bound to be a wide variety in future jobs for data science professionals as well those specializing in AI and ML.

Data scientists would continue to be in demand though a new position of machine learning engineer is giving it a tough competition as more and more companies are adopting artificial intelligence technologies. In many places where data specialists are working, this relatively new role is emerging slowly. Perhaps you are now wondering who a machine learning engineer is, what the skill requirements for this position are and what kind of salary is on offer.

Let’s try to find answers to these questions.

These are sophisticated programmers whose work is to develop systems and machines that can learn and implement knowledge without particular direction.

For a machine learning engineer, artificial intelligence acts as the goal. Though these professionals are computer programmers, their focus goes further than particularly programming machines to execute specific tasks. Their emphasis is on building programs that will facilitate machines to take actions without being explicitly directed to carry out those tasks.

The roles performed by these professionals include:

When it comes to the skill sets that machine learning engineers need, there are some that are common with those required by data scientists such as:

In addition to the above, you must have the following ML engineer skills:

Additionally, you should have knowledge of applied Mathematics (with emphasis on Algorithm theory, quadratic programming, gradient descent, partial differentiation, convex optimizations, etc.), software development (Software Development Life Cycle or SDLC, design patterns, modularity, etc.), and time-frequency analysis as well as advanced signal processing algorithms (like Curvelets, Wavelets, Bandlets, and Shearlets).

Apart from data scientist and machine learning engineer, some other future jobs for data science professionals could be

Thus, with the increasing adoption of artificial intelligence and machine learning, there won’t be any dearth of future jobs for data science professionals.

Mini bootcamps enabled for live-online sessions. Now you can join our bootcamps wherever you are. https://t.co/ySWEDhYqsr #online #zoom #guru #datascientist #bigdata #tobedatascientist #artificialintelligence #machinelearning #python #dataanalytic #NLP #datascience #deeplearning

What would be the future jobs for data science in terms of artificial intelligence and machine… was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Researchers in artificial intelligence have long been working towards modeling human thought and cognition. Many of the overarching goals in machine learning are to develop autonomous systems that can act and think like humans. In order to imitate human learning, scientists must develop models of how humans represent the world and frameworks to define logic and thought. From these studies, two major paradigms in artificial intelligence have arose: symbolic AI and connectionism.

The first framework for cognition is symbolic AI, which is the approach based on assuming that intelligence can be achieved by the manipulation of symbols, through rules and logic operating on those symbols. The second framework is connectionism, the approach that intelligent thought can be derived from weighted combinations of activations of simple neuron-like processing units.

Symbolic AI theory presumes that the world can be understood in the terms of structured representations. It asserts that symbols that stand for things in the world are the core building blocks of cognition. Symbolic processing uses rules or operations on the set of symbols to encode understanding. This set of rules is called an expert system, which is a large base of if/then instructions. The knowledge base is developed by human experts, who provide the knowledge base with new information. The knowledge base is then referred to by an inference engine, which accordingly selects rules to apply to particular symbols. By doing this, the inference engine is able to draw conclusions based on querying the knowledge base, and applying those queries to input from the user. Example of symbolic AI are block world systems and semantic networks.

Connectionism theory essentially states that intelligent decision-making can be done through an interconnected system of small processing nodes of unit size. Each of the neuron-like processing units is connected to other units, where the degree or magnitude of connection is determined by each neuron’s level of activation. As the interconnected system is introduced to more information (learns), each neuron processing unit also becomes either increasingly activated or deactivated. This system of transformations and convolutions, when trained with data, can learn in-depth models of the data generation distribution, and thus can perform intelligent decision-making, such as regression or classification. Connectionism models have seven main properties: (1) a set of units, (2) activation states, (3) weight matrices, (4) an input function, (5) a transfer function, (6) a learning rule, (7) a model environment.[1] The units, considered neurons, are simple processors that combine incoming signals, dictated by the connectivity of the system. The combination of incoming signals sets the activation state of a particular neuron.

At every point in time, each neuron has a set activation state, which is usually represented by a single numerical value. As the system is trained on more data, each neuron’s activation is subject to change. The weight matrix encodes the weighted contribution of a particular neuron’s activation value, which serves as incoming signal towards the activation of another neuron. Most networks incorporate bias into the weighted network. At any given time, a receiving neuron unit receives input from some set of sending units via the weight vector. The input function determines how the input signals will be combined to set the receiving neuron’s state. The most frequent input function is a dot product of the vector of incoming activations. Next, the transfer function computes a transformation on the combined incoming signals to compute the activation state of a neuron. The learning rule is a rule for determining how weights of the network should change in response to new data. Back-propagation is a common supervised learning rule. Lastly, the model environment is how training data, usually input and output pairs, are encoded. The network must be able to interpret the model environment. An example of connectionism theory is a neural network.

The advantages of symbolic AI are that it performs well when restricted to the specific problem space that it is designed for. Symbolic AI is simple and solves toy problems well. However, the primary disadvantage of symbolic AI is that it does not generalize well. The environment of fixed sets of symbols and rules is very contrived, and thus limited in that the system you build for one task cannot easily generalize to other tasks. The symbolic AI systems are also brittle. If one assumption or rule doesn’t hold, it could break all other rules, and the system could fail. It’s not robust to changes. There is also debate over whether or not the symbolic AI system is truly “learning,” or just making decisions according to superficial rules that give high reward. The Chinese Room experiment showed that it’s possible for a symbolic AI machine to instead of learning what Chinese characters mean, simply formulate which Chinese characters to output when asked particular questions by an evaluator.

3. Machine Learning using Logistic Regression in Python with Code

The main advantage of connectionism is that it is parallel, not serial. What this means is that connectionism is robust to changes. If one neuron or computation if removed, the system still performs decently due to all of the other neurons. This robustness is called graceful degradation. Additionally, the neuronal units can be abstract, and do not need to represent a particular symbolic entity, which means this network is more generalizable to different problems. Connectionism architectures have been shown to perform well on complex tasks like image recognition, computer vision, prediction, and supervised learning. Because the connectionism theory is grounded in a brain-like structure, this physiological basis gives it biological plausibility. One disadvantage is that connectionist networks take significantly higher computational power to train. Another critique is that connectionism models may be oversimplifying assumptions about the details of the underlying neural systems by making such general abstractions.

While both frameworks have their advantages and drawbacks, it is perhaps a combination of the two that will bring scientists closest to achieving true artificial human intelligence.

Symbolic AI vs Connectionism was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Via https://becominghuman.ai/symbolic-ai-vs-connectionism-9f574d4f321f?source=rss—-5e5bef33608a—4

source https://365datascience.weebly.com/the-best-data-science-blog-2020/symbolic-ai-vs-connectionism

As the panic continues to spread worldwide and the COVID-19 continues to infect more and more people, the first measures to contain the…

Continue reading on Becoming Human: Artificial Intelligence Magazine »

Originally from KDnuggets https://ift.tt/3af94cE

Originally from KDnuggets https://ift.tt/3enF4hF

Originally from KDnuggets https://ift.tt/3a8VZBe