Originally from KDnuggets https://ift.tt/3bNXbyn

Forget Telling Stories; Help People Navigate

Originally from KDnuggets https://ift.tt/38G7J0x

Top Stories Mar 8-14: How To Overcome The Fear of Math and Learn Math For Data Science

Originally from KDnuggets https://ift.tt/3vrfuAT

AI Industry Innovation: Making the Invisible Visible

Originally from KDnuggets https://ift.tt/30KbEVn

Kedro-Airflow: Orchestrating Kedro Pipelines with Airflow

Originally from KDnuggets https://ift.tt/3qH8oVp

How Artificial Intelligence Is Helping Enhance Usability of Websites?

Over the past decade, Artificial Intelligence (AI) has found its applications in many different fields. Businesses are increasingly adopting AI for their growth and rightly so as its benefits are evident from the results seen by these businesses.

Recent studies have shown that more than 90% of leading businesses have ongoing investments in AI . One of the most notable applications of AI for businesses is to improve the usability and user experience of business websites.

If you’re wondering how you can make the most of AI and use it to improve your business website’s usability, then read on to learn more.

Consumerism has changed over the years. Today, consumers are impatient, and they want instant resolutions to their queries. Failing to respond quickly may lead to increased website abandonment and reduced conversions.

The most feasible solution to this problem is AI-powered chatbots. Chatbots are a self-service solution for your customers that provide resolutions to customers in real-time.

AI chatbots are a perfect win-win for you and your customers. Not only do they support customers in real-time, but they also help you reduce your customer service cost as you rely on AI instead of manual support.

AI-chatbots can learn from customer responses and offer better support with every passing day. If you’re noticing increased bounce rate and reduced conversions, then AI chatbots are definitely something that you must consider.

Also Read: Building a simple NLP-based Chatbot in Python!

2. Website Accessibility

Did you know that one in every four Americans is disabled? This means that this population of users wouldn’t be able to access your website’s features if you haven’t fixed your website accessibility yet.

There are a lot many factors that weigh in for accessibility. And not implementing these will lead to reduced conversions, website abandonment, decreased brand reputation, and even expensive ADA lawsuits.

To avoid this, AI comes to your rescue. You can invest in an AI-powered website accessibility solution that will audit your website for accessibility and automatically fix all the issues. This way, you can rest assured that your website is always ADA and WCAG compliant.

3. User-Friendly Search

One of the major elements of your website that impacts usability is search. When users perform searches on your website, they’re looking for something specific. Using semantic search, you can make their experience more user-friendly and rewarding.

AI technologies such as natural language processing and machine learning can learn from customer behaviour and improve their search experience. Apart from better usability, it also leads to increased sales, better conversions, and increased customer retention.

Trending AI Articles:

1. Top 5 Open-Source Machine Learning Recommender System Projects With Resources

4. Why You Should Ditch Your In-House Training Data Tools (And Avoid Building Your Own)

4. Personalized User Experience

In the post COVID era, more and more businesses are establishing their online presence, leading to rising competition. How do you stand out among hundreds of competitors? The best way to go about it by offering a personalized experience to your users.

Consumers are not looking for one-size-fits-all solutions. They want solutions that exactly fit their needs and are tailored specifically to their pain points. You can target your users with personalized experiences with the help of AI.

Dialogue AI is an eCommerce personalization platform that works automatically to generate content and personalization recommendations that create the perfect dynamic widgets to entice sales and engagement. This will enable a powerful shopping experience for your customers and will leave them in total awe! You will see an increase in conversion rate, engagement, and total buy-rate.

Therefore, using AI, you can offer personalized product recommendations, change your messaging to address specific pain points, personalize pop-up messages, and offer many more similar solutions. Personalization with the help of AI can be a game-changer for your business, and hence, it is a must-try!

Also Read: How AI is Impacting Web Design in 4 Big Ways?

5. AI Assistants

AI-powered assistants are getting increasingly popular amongst business websites. These are AI-powered virtual assistants that can assist your users by helping them out in their customer journey.

Right from understanding your offering, browsing through products, and completing the checkout process, such assistants can be extremely helpful in improving your website’s usability.

Using techniques such as image recognition, AI assistants can truly make your customers’ experience much more satisfying and quick.

6. Sentiment Analysis

Understanding customers’ perceptions of your products and services may be vital in bringing about improvements in your business’s key metrics. But, you cannot always expect straightforward feedback from your users. This is where sentiment analysis can be beneficial.

Using AI-driven sentiment analysis tools, you can precisely understand how your customers feel about your products, services, websites, features, etc. Such tools sift through customers’ comments and give you a precise overview of the likeability of your offerings.

This data can be eye-opening, and you can use it to improve your offerings, add new features, remove unwanted features, and offer a better user experience. This will ultimately lead to increased sales and revenues of your business.

Also Read: Machine Learning For Sentiment Analysis (Using Python)

Final Thoughts

To conclude, we can say that AI can be revolutionary in changing the course of your business’s growth. Investing in AI-powered tools will lead to improvements in website usability, which will help you grow your sales and brand awareness.

So, start investing in AI right away to see drastic improvements in your website’s usability.

Don’t forget to give us your ? !

How Artificial Intelligence Is Helping Enhance Usability of Websites? was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

FlexibilityKey Advantage in Data Annotation Market

Flexibility — Key Advantage in Data Annotation Market

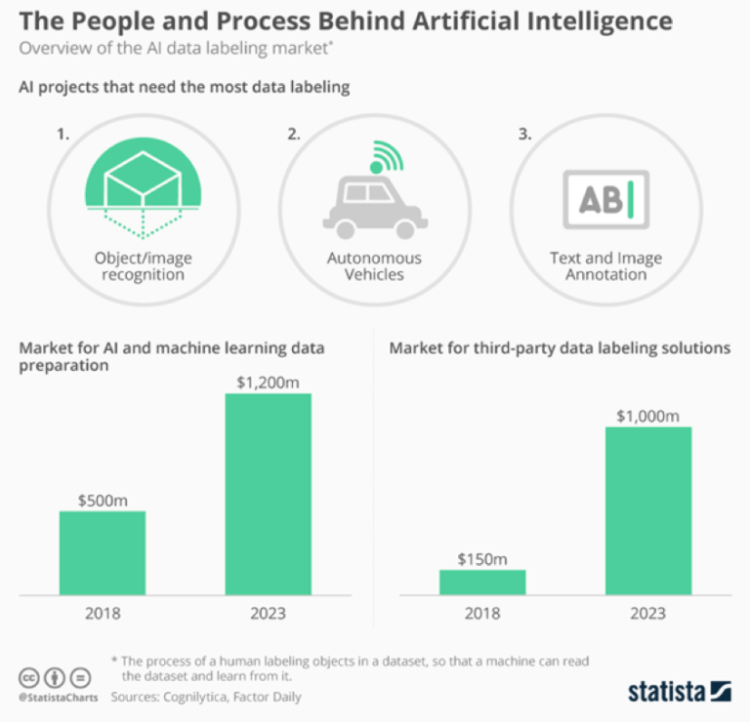

Data Annotation Market Size

The global data annotation market was valued at US$ 695.5 million in 2019 and is projected to reach US$ 6.45 billion by 2027, according to Research And Markets’s report. Expected to grow at a CAGR of 32.54% from 2020 to 2027, the booming data annotation market is witnessing tremendous growth in the forthcoming future.

The data annotation industry is driven by the increasing growth of the AI industry industry

Data Annotation Process is Tough

Unlabeled raw data is around us everywhere, such as emails, documents, photos, presentation videos, and speech recordings. The majority of machine learning algorithms today need labeled data in order to learn and get trained by themselves. Data labeling is the process in which annotators manually tag various types of data such as text, video, images, audio via computers or smartphones. Once finished, the manually labeled dataset is fed into a machine-learning algorithm to train an AI model.

However, data annotation itself is a laborious and time-consuming process. There are two choices to do data labeling projects. One way is to do it in-house, which means the company builds or buys labeling tools and hires an in-house labeling team. The other way is to outsource the work to renowned data labeling companies like Appen, Lionbridge.

The booming data annotation market has also stimulated multiple novel players to secure a niche position in the competition. For example, Playment, a data labeling platform for AI, has teamed up with Ouster, a leading LiDAR sensors provider, known for the annotation and calibration of 3D imagery in 2018.

Customer pain points

Here are some extractions from the Reddit discussion groups:

1 Lack of QA/QC process.

2 Lack of monitoring, some labelers are good while others are bad at the job. Would be great to separate performances based on labelers.

3 Software was not designed for labelers or encourages mistakes.

The list goes on…

Flexibility is the Key Advantage in Data Labeling Loop

As the high-quality standard, data security, scalability are the most important measurements in labeling service, we may have a look at the rest competitive parts, for example, flexibility and customer service.

In machine learning, in each round of testing, engineers would discover new possibilities to perfect the model performance, therefore, the workflow changes constantly. There are uncertainty and variability in data labeling. The clients need workers who can respond quickly and make changes in workflow, based on the model testing and validation phase.

Therefore, more engagement and control of the labeling loop for clients would be a key competitive advantage as it provides flexible solutions.

Trending AI Articles:

1. Top 5 Open-Source Machine Learning Recommender System Projects With Resources

4. Why You Should Ditch Your In-House Training Data Tools (And Avoid Building Your Own)

Solution

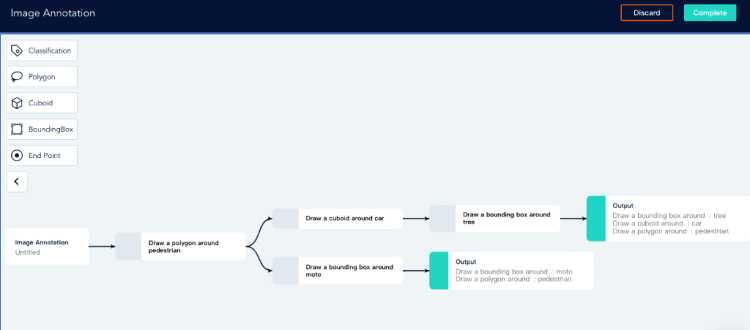

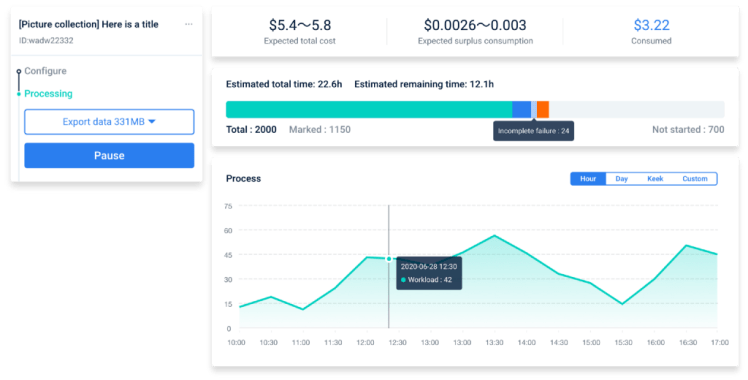

ByteBridge, a human-powered data labeling tooling platform with real-time workflow management, providing flexible data training service for the machine learning industry.

On ByteBridge’s dashboard, developers can define and start the data labeling projects and get the results back instantly. Clients can set labeling rules directly on the dashboard. In addition, clients can iterate data features, attributes, and workflow, scale up or down, make changes based on what they are learning about the model’s performance in each step of test and validation.

As a fully-managed platform, it enables developers to manage and monitor the overall data labeling process and provides API for data transfer. The platform also allows users to get involved in the QC process.

End

“High-quality data is the fuel that keeps the AI engine running smoothly and the machine learning community can’t get enough of it. The more accurate annotation is, the better algorithm performance will be.” said Brian Cheong, founder, and CEO of ByteBridge.

Designed to empower AI and ML industry, ByteBridge promises to usher in a new era for data labeling and accelerates the advent of the smart AI future.

Don’t forget to give us your ? !

Flexibility — Key Advantage in Data Annotation Market was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Synthetic Data: a primer for a non-technical audience

Synthetic Data for starters: all you need to know

Why Synthetic Data are extremely powerful for AI startups

Introduction

Imagine you want to make unrecognisable some photos from an album. The most immediate solution would be to obscure people’s faces, and make them “anonymous”. In today’s reality, this solution doesn’t work.

Suppose someone wants to re-identify the faces in these photos. It might be enough to cross-reference the available information (e.g. the clothes they are wearing), with other freely available datasets and … the re-identification game is served.

Definition of Synthetic Data … in a nutshell

Synthetic data is “any production data applicable to a given situation that is not obtained by direct measurement” (McGraw-Hill Dictionary of Scientific and Technical Terms). In practice, it is data that is artificially created rather than being collected and used. It is often generated with the help of algorithms for data anonymization.

We can distinguish between:

- Fully Synthetic Data: data is completely synthetic and doesn’t contain original data. Since the released data is completely artificially generated and doesn’t contain original data, this technique has strong privacy protection but the truthfulness of the data is lost.

- Partially Synthetic Data: generate partially synthetic data replaces only values of the selected sensitive attribute with synthetic values. Compared to Fully Synthetic Data, privacy protections are weaker and stronger accuracy rates.

In a study from 2017, MIT researchers splitted data scientists into two groups: one using synthetic data and another using real data. 70% of the time group using synthetic data was able to produce results on par with the group using real data.

History of Synthetic Data

In 1993, the idea of original fully synthetic data was created by Rubin. He originally designed this to “anonymize” the Decennial Census long-form responses for the short form households. Later that year, the idea of original partially synthetic data was created by Little. Little used this idea to synthesize the sensitive values on the public use file.

In recent years, has gained major traction as the benefits and risks of data science have become widely known.

Why using Synthetic Data

Synthetic data are extremely useful because of the following advantages:

- Meet very specific needs or conditions that are not available in real data: This can be useful when either privacy needs to limit the availability or usage of the data or when the data needed for a test environment simply does not exist.

- Protect the privacy and confidentiality of a set of data: Real data could contain personal information that you may not want to be disclosed. Synthetic data holds no personal information and cannot be traced back to any individual. Therefore, the use of synthetic data reduces confidentiality and privacy issues.

Trending AI Articles:

1. Top 5 Open-Source Machine Learning Recommender System Projects With Resources

4. Why You Should Ditch Your In-House Training Data Tools (And Avoid Building Your Own)

Some of the business functions that can benefit from synthetic data include:

- Self-driving car simulations pioneered the use of synthetic data

- Software testing and quality assurance

- Clinical and scientific trials for future studies that involve data which don’t exist yet

- Fraud protection in financial services

Applications in AI and Machine Learning

The role of synthetic data in AI and Machine Learning applications is increasing rapidly. This is because these algorithms are trained with an incredible amount of data which, without synthetic data, could be extremely difficult to obtain or generate. It can also play an important role in the creation of algorithms for image recognition and similar tasks that are becoming the baseline for AI.

Generative Adversarial Networks (GAN)

These networks introduced by Ian Goodfellow et al. in 2014. — also called GAN or Generative Adversarial Networks — are a recent breakthrough in image recognition.

They are composed of one discriminator and one generator network. While the generator network generates synthetic images that are as close to reality as possible, the discriminator network aims to identify real images from synthetic ones. Both networks build new nodes and layers to learn to become better at their tasks.

While this method is popular in neural networks used in image recognition, it has uses beyond neural networks. It can be applied to other machine learning approaches as well.

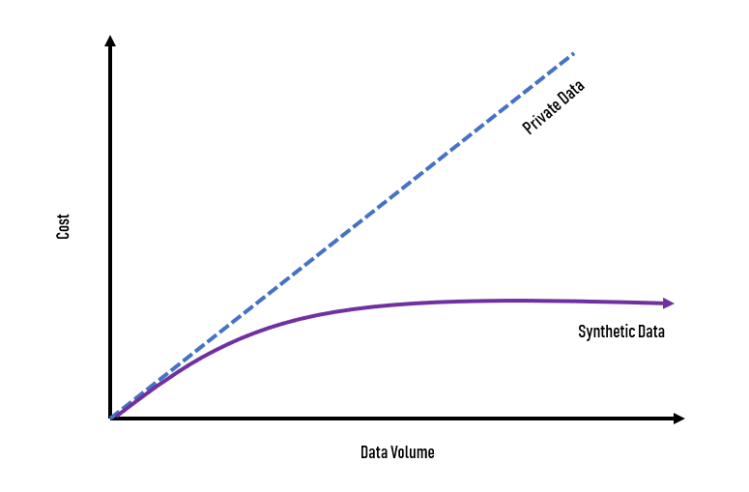

Startups vs. Tech Giants

As I mentioned above, AI and Machine Learning algorithms require immense amounts of data for proper training. The world’s most valuable technology companies, such as Google, Facebook, Amazon and Baidu harvest immense data sets of images, videos and other from their consumers, having a huge competitive advantage for allowing computers and algorithms to learn faster.

Thanks to synthetic data, this advantage is being disrupted by the ability for anyone to create and leverage synthetic data to train computers across many use cases, including retail, robotics, autonomous vehicles, commerce and much more.

“…Synthetic data will democratize the tech industry” — Evan Nisselson, TechCrunch

Some examples

- Spil.ly: Berlin-based startup that developed an augmented-reality app akin to a full-body version of Snapchat’s selfie filters. Roughly a year later since they started using synthetic data, the company had roughly 10 million images made by pasting digital humans it calls simulants into photos of real-world scenes. It looked weird, but it worked.

- Neuromation: Startup based in Tallinn that is churning out images containing simulated images for clients that want to use cameras to track the growth of livestock.

- DensePose: open-source machine learning software disclosed by FacebookAI that can apply special effects to humans in the video, trained with 50,000 images of people hand-annotated with 5 million points.

- AiFi: computer vision and artificial intelligence startup that delivers a more efficient checkout-free solution to both mom-and-pop convenience stores and major retailers.

Conclusion

Synthetic data generation is one of the important techniques for privacy-preserving data publishing. As the published data doesn’t represent any real entity, the disclosure of sensitive private data is eliminated.

Furthermore, it is a powerful solution for an AI startup who doesn’t have the resources to collect, label and process huge amounts of data typically needed to train complex algorithms. The synthetic data will make the artificial intelligence challenge between corporate and startups on equal terms.

If you liked this article, let’s connect on Linkedin!

Don’t forget to give us your ? !

Synthetic Data: a primer for a non-technical audience was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Must Know for Data Scientists and Data Analysts: Causal Design Patterns

Originally from KDnuggets https://ift.tt/3ctZvJI

Know your data much faster with the new Sweetviz Python library

Originally from KDnuggets https://ift.tt/3qGYa7m