Tales of an oceanographer navigating the different waves of tech companies looking for whales.

- Introduction and Hypothesis

I loved to work as a scientist. There is a deep feeling of completion and happiness when you manage to answer why. Finding out why such animal would go there, why would they do this at that time of the year, why is that place so diverse… This applies to any kind of field. This is the reason why I want to advocate that if you are a scientist, you might want to have a look at what is called Data Science in the technological field. Be aware, I will not dwell in the details of titles such as Data engineer, data analyst, data scientist, AI researcher. Here, when I refer to Data Science, I mean the science of finding insights from data collected about a subject.

So, back to our why. In science, in order to answer your why, you will introduce the whole context surrounding it and then formulate an hypothesis. “The timing of the diapause in copepods is regulated through their respiration, ammonia excretion and water column temperature”. Behaviour of subject is the result of internal and external processes.

In marketing, you would have to formulate similar hypothesis in order to start your investigation: “3-days old users un-suscribes due to the lack of direct path towards the check-out”. Behaviour of subject is the result of internal (frustration) and external (not optimized UE/UI) processes.

- References

Although I would have wanted to put that part at the end, as for any scientific paper, it goes without saying that your introduction would present the current ideas, results, and hypotheses of your field of research. So, as a researcher, you need to accumulate knowledge about your subject, and you go looking for scientific articles. The same is true for techs as well. There are plenty of scientific and non-scientific resources out-there that will allow you to better understand, interpret and improve your product. Take this article, for instance, Medium is a wonderful base of knowledge on so many topics! But you could also find passionating articles on PloS One on Users Experience or Marketing Design and etc.

2. Material and Methods

- Data collection

As a Marine biologist and later an Oceanographer, I took great pleasure to go at the field and collect data (platyhelminths, fish counts, zooplankton , etc..). Then we needed to translate the living “data” into numeric data. In the technological industry, it is the same idea. Instead of nets, quadrats, and terrain coverage, you will setup tracking event, collect postbacks from your partners and pull third-parties data. The idea is the same, “how do I get the information that will help me answer my why”. So a field sampling mission and a data collection planning have a lot in common.

Trending AI Articles:

2. Generating neural speech synthesis voice acting using xVASynth

- Data treatment

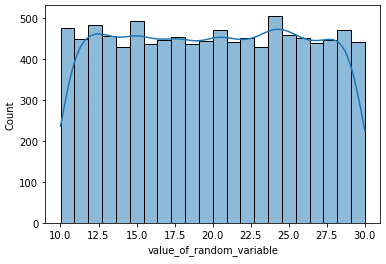

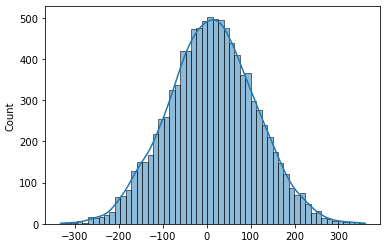

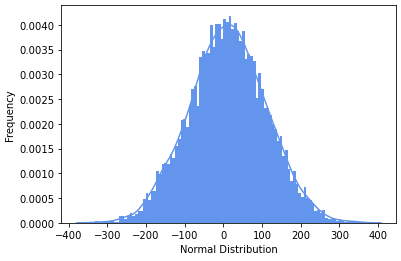

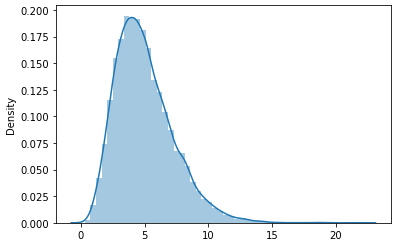

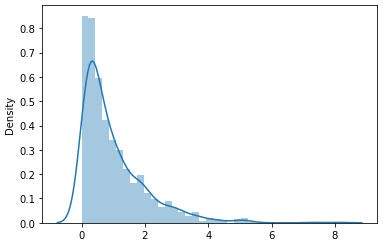

In ecology and oceanography, you need to have the big picture. You play with a lot of scales (spatial, temporal). And to do so, you usually want to have the most complete dataset possible. So you interpolate missing data, you decide to remove some of it or you resample (when you have the time and fundings). This is exactly what you also do in the technologies industry: In order to better understand your product, you want to know as much as you can, so you setup tracking event, you record your demography through google analytics and you aggregate your raw data into “cooked” data with AWS Lambda. Although all of this may sound alien to you if you are from academia, this is pretty much the same idea behind field sample and then identification of you species and environmental variables.

- Language used

-SQL is a must have

As an advice, if you which to become a data analyst or a data scientist, I strongly advice to learn about SQL. When I was studying my evolution courses using R, it was such a pain to have to select specific data from that column where the value of this other column was equal to pink… When I started using SQL, it made so much sense. With your “mind” representation of your data, SQL is a declarative language where you can get multiple parameters while using multiple “WHERE” clauses. Some of them even use parallelization technologies (Hadoop) to get your data event faster (MySQL to site one).

-Python for all

Then, once you have your data, you can play with it. This is where you can clean it, join it with other data or aggregate it into different data using formulas. Python is one of the best language for that as it is really simple to understand. From someone who spent his whole academia life using R, MatLab (and Ferret), it took me maybe 2 months to be comfortable using Python and maybe 6 months to be completely autonomous (although it never hurts to have your code reviewed).

3. Results and Interpretation

Once you understand your data, that you have the full picture (or at least the closer vulgarization of what the truth would be), we want to share our findings with our peers. In science, we do so by the mean of presentations in symposium or scientific papers. Of course we could have feedbacks from our supervisor or reviewers, but they usually take ages to come and they are also mostly a one-way discussion. Meanwhile in tech, you will have to present your findings to people that do not have the same background as you do. Being excited about using that specific statistical technique of clustering because it was the exact use case (nope, that never happened to me…) will not make them as excited as you are.

You will need to work on what is the core take away from your findings.

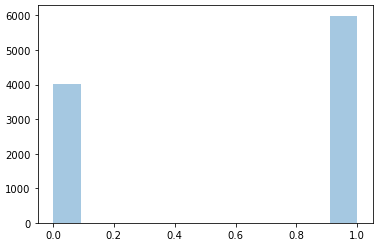

That essentialist approach led me to make very simple figures with at the most 2 metrics in them. Then I had to present in person the results which led me to work on how to express myself for a more general audience.

With a simple approach, I realized also that I needed to look at the simple questions first, the “quick wins” as they say, that would then lead me towards more detailed analysis.

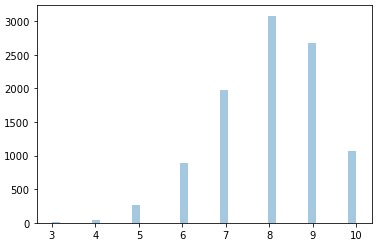

Of course, all of the above points can be found in academia, but in the tech industry, you have to do some studies from the ground up every month (sometimes every week depending on the project). In academia, you are the expert of your field, but you only focus on it and rarely venture elsewhere. You may have a couple of presentations a year and at most you are working on 1 to 3 paper a year. In the tech industry, you have reports to give every week. One day you can work on the acquisition cohorts of Whales (high-spending users) and the next you have to create a forecasting function that will identify potential users that will churn (un-subscribe/cancel or leave the product). The presentations are every month or even every week. So yeah, the pace is much more dynamic in the tech industry but also, you learn so much more, faster.

I know there are a lot of us who spent quite some times in academia who could not pursue it. Whether it was because your supervisor had a different agenda, you were sick of struggling to be able to afford a decent life or that there were no opportunities where you lived, it is not the end of the world. I found all the things that I loved in science in the tech industry. Flexible hours, creating your own projects, being responsible for your budget (in time) and sampling tools (homemade functions, third-parties solutions), the thrill of the investigation, and, in the end, presenting your results to your peers and have feedback on the spot that will lead to further studies.

In the meantime, nothing stops you from volunteering in an association. I realized that I had a better impact on the planet working in a marketing company but volunteering at picking trash while running in the forest than doing Arctic bio-geo-chemical modeling… So, do not hesitate to take a leap of faith, you have nothing to lose and only all to gain.

Don’t forget to give us your ? !

From Science to Data Science was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Via https://becominghuman.ai/from-science-to-data-science-8e683fde3312?source=rss—-5e5bef33608a—4

source https://365datascience.weebly.com/the-best-data-science-blog-2020/from-science-to-data-science