Originally from KDnuggets https://ift.tt/2Q5qSCS

A simple explanation of Machine Learning and Neural Networks

A simple explanation of Machine Learning and Neural Networks and A New Perspective for ML Experts

This is an introduction to machine learning an neural networks in a simple and intuitive way. The concepts are explained in a way different from the traditional explanations for neural networks, with a new perspective.

Deep learning, neural networks and machine learning have been the buzz words for the past few years. Surely, there is a lot that can be done using neural networks.

There has been immense research and innovation in the field of neural networks. Here are some amazing tasks that neural networks can do with extreme speed and good accuracy:

- Image classification — For example, when given images of cats and dogs, the neural network can tell which image has a cat and which has a dog.

- Object Detection — locating different objects in a given image.

- Chatbots — Neural networks can chat with humans in conversations. Neural networks can do tasks for humans based on the human’s typed request or spoken request.

- Language translation — Translating text from one language to another.

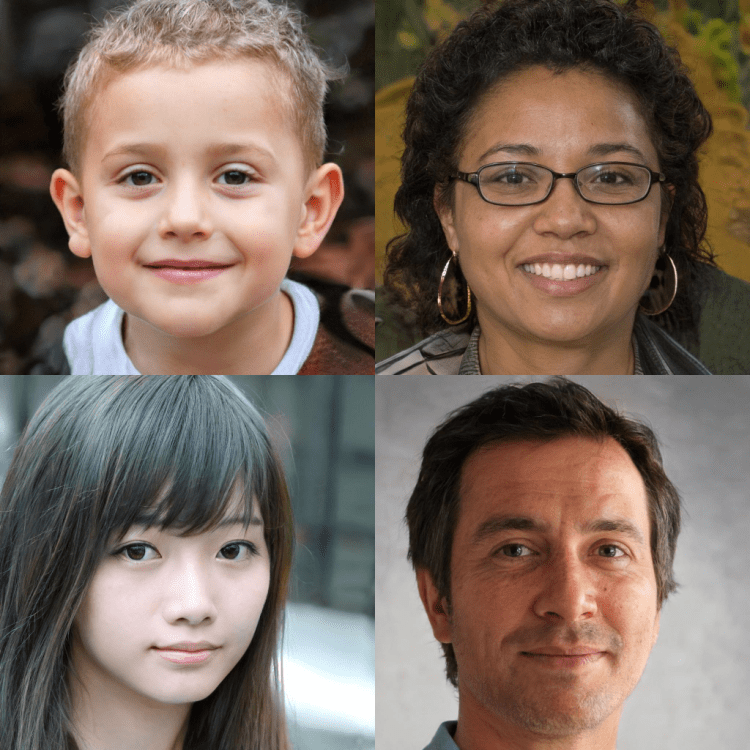

- Image generation — Generating new images of a particular type. The people in the picture below do not exist! These faces have been generated by AI!!

- Predicting stock prices

- Playing computer games

People use neural networks for numerous applications. Moreover, it is essential to note that generally, for each task a particular type of neural network that is used; that is, the neural network structure varies for different tasks.

This brings us to the question: What are neural networks?

Before we come to that, let us look at this question: What is machine learning?

What is machine learning?

Generally, when we want to predict house prices in a city like Bangalore, we have some data about different houses like

- the carpet area (in sq. ft.)

- walled area (in sq. ft.)

- house type — independent house, flat in an apartment complex, villa

- latitude and longitude (for the location of the house)

- number of floors in the house

- which floor the house is in (ground floor, 1st floor, etc.)

- facilities available — swimming pool, gym, etc.

- builder of the apartment/house

Typically, we would have a function that take the values of these features (characteristics) as input and estimate the price of the house. In other words, we already have a function that can be used to calculate the price of the houses based on these values.

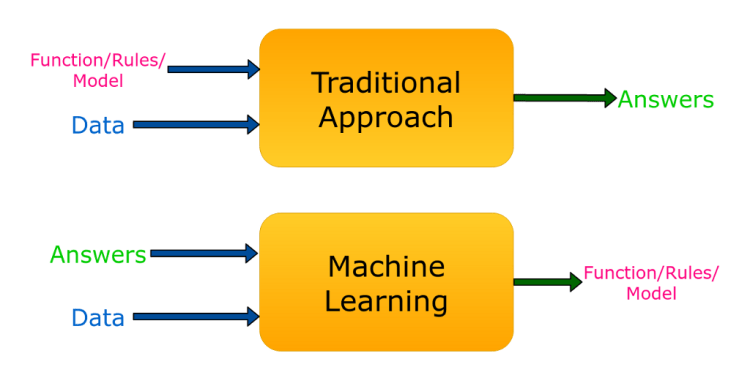

Generally, we have some data and a function. We use this function to determine some quantity (house price) that we need.

However, in many cases, the data we get is new and we do not have any such function to estimate the price of the houses. We might need to take the help of experts to determine such a function. Even then, in many cases, this may not be possible to obtain easily.

In such situations, machine learning turns out to be of great value!

The machine learning (ML) algorithm is used to find the function that is mentioned here. The machine learning algorithm will take in the values of the features (the different characteristics of each house) and also the output quantity (price of the corresponding houses). It will find a function which will calculate the prices of these houses. This function will be such that the overall error between the actual house price and, the prices calculated by the function based on the features of the corresponding houses is least.

Then, this function given out by the ML algorithm can be used to predict the prices of other houses as well!

The following are given as input to the machine learning algorithm to find the function:

- the data/characteristics of many houses.

- the prices of each of those houses.

The machine learning algorithm “learns” a function using this data.

When data of new houses is given, this learned function can predict the prices of the new houses.

Trending AI Articles:

2. How AI Will Power the Next Wave of Healthcare Innovation?

Linear Regression

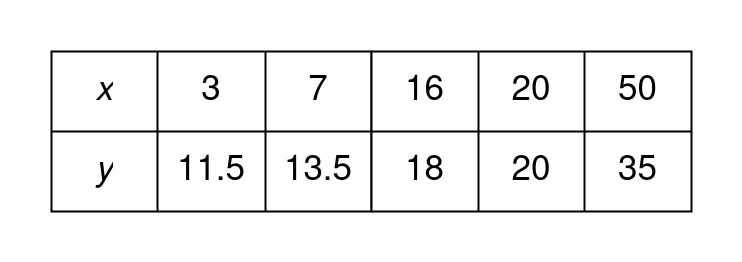

So, what functions can you think of? A very simple function is the straight line equation: y = f(x) = mx + c. Here, there is only one input value, x. Given this, we can find the output y by computing f(x).

Let us look at an example of this. There is a bicycle shop that rents bicycles with a base fare of ₹10 and an additional fare of ₹0.50 per minute. Let x be the number of minutes the bicycle is rented and y be the fare in rupees. Then, clearly, the function is y = f(x) = 0.5x + 10.

If we want to use machine learning to find this function, we have to get the fare values (y values) for many minutes (x values).

Using these values for x and y, the machine learning algorithm will return the function, that is, it will give m and c, so that we can use f(x) = mx + c.

The next few sections may have some Math which is complicated for some of you. Don’t worry about that now. Just assume that it such functions exists and all this works. It all boils down to this: a function F which takes n values as input and gives m values as output.

Extending the function to multiple inputs and outputs

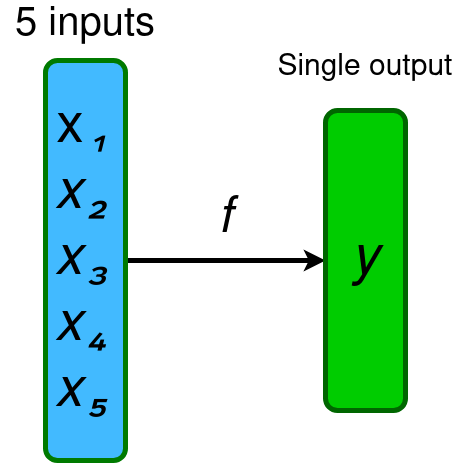

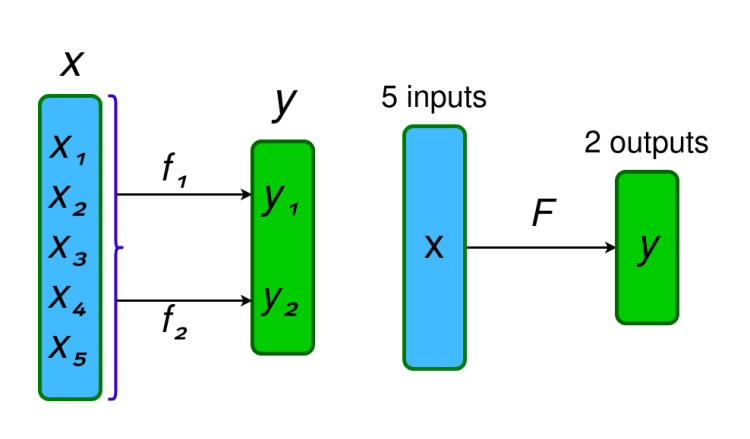

We can extend this to taking in multiple inputs as follows:

The multiple inputs would correspond to each characteristic of the house that we mentioned earlier.

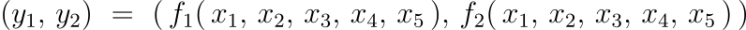

Similarly, we can also have multiple outputs being given by the function f. For example, the price of a house as well as the rent of a house could be predicted.

Here, F takes 5 values as input and gives 2 values as output.

Once again, as I said, don’t worry too much about the Math above and the Math that is yet to come.

We have seen what linear regression is. How would it be if we have multiple steps in linear regression?

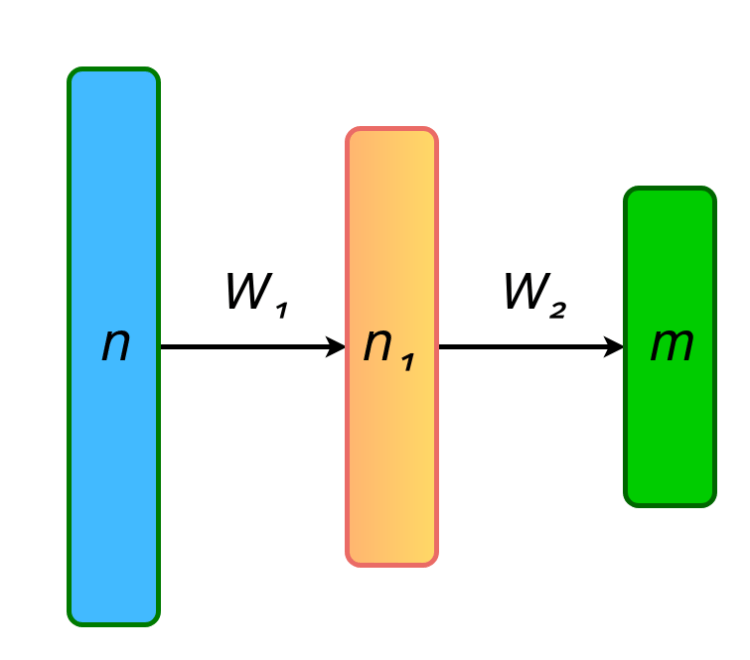

We saw that linear regression is basically a multiple-input function (and possibly multiple-output). Let us assume that there are ‘n’ input variables and ‘m’ output variables (‘m’ is 1 quite often). The ‘n’ variables are taken as input and transformed into the output ‘m’ variables through a function ‘f’ or a matrix multiplication (with a matrix, ‘W’). Here, we consider the function f and the matrix multiplication with ‘W’ as equivalent.

Instead of the above technique, let us say that the ‘n’ input variables are transformed into ‘n1’ intermediate variables by a function ‘f1’ (or a matrix ‘W1’). Then, let us say that these ‘n1’ intermediate variables are transformed into the output ‘m’ variables by a function ‘ f2’ (or a matrix ‘W2’). The final user will see only the input variables and the output variables and not the ‘n1’ intermediate variables because these are not of much importance to the final user. Thus, this set of ‘n1’ variables is called a hidden layer.

Similarly, instead of having one hidden layer we can have multiple hidden layers, say three hidden layers. Then, the following will be the process:

Input Layer: ‘n’ input variables

‘W1’ (or ‘f1’) transforms ‘n’ to ‘n1’

Hidden layer 1: ‘n1’ variables in this layer

‘W2’ (or ‘f2’) transforms ‘n1’ to ‘n2’

Hidden layer 2: ‘n2’ variables in this layer

‘W3’ (or ‘f3’) transforms ‘n2’ to ‘n3’

Hidden layer 3: ‘n3’ variables in this layer

‘W4’ (or ‘f4’) transforms ‘n3’ to ‘m’

Output layer: ‘m’ variables in this layer

Neural Network

In the above examples, we use matrix multiplication which is a linear operation. When we have multiple stages of linear operations, we can achieve a greater number of functions more easily.

However, not many functions can be obtained by performing linear operations. Thus, we introduce an element of non-linearity at each stage. This way, we will be able to achieve a greater number and variety of functions. Ultimately, this is what a basic neural network is.

Training the Neural Network or the Linear Regression Model

Let us say that we have created a neural network model or a linear regression model. How can we ensure that its predictions are accurate?

- The neural takes in the data (features/characteristics of each house) and the corresponding output values (house price).

- It predicts its own output value (predicts a house price for a house based on the features of the house).

- It compares the predicted value with the actual value.

- It minimizes the overall error between predicted value and the actual value for many houses (say 10,000 houses). [This step is actually a long process and requires a separate detailed explanation. For, further study, check out “Gradient Descent”.]

- The model is now ready to be used to predict the value (house price) of new data (new houses).

When we change the structure of the neural network that we saw above, we can make it suited for different kinds of tasks.This is what we call deep learning (because the network has some depth due to the series of layers). Deep learning and machine learning are finding their way into almost all fields in the industry. So, it is better if you go ahead and learn some basics and use it to your advantage.

Some other concepts that you may want to look up next to understand machine learning and deep learning are:

Very basic concepts — Accuracy, Mean Square Error, Classification, Regression, Confusion Matrix

Intermediate concepts — Decision Trees, Gradient Descent, Logistic Regression

More advanced concepts — Convolutional Neural Networks (CNNs), Support Vector Machines (SVM)

Note: I have written this article solely based on my knowledge and my understanding of machine learning. If you feel that there is anything lacking anywhere in this article, please feel free to get back to me so that I can improve it for others.

All equation images have been created by me using this website: https://latex2image.joeraut.com/

All other images used have either been created by me or are have a Creative Commons License.

Don’t forget to give us your ? !

A simple explanation of Machine Learning and Neural Networks was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Artificial Intelligence in RadiologyAdvantages Use Cases & Trends

Artificial Intelligence in Radiology — Advantages, Use Cases & Trends

The radiology department of an average healthcare facility is likely searching for improvements. Even before COVID-19, 45% of radiologists experienced burnout at one point in their career. They felt overwhelmed with the administrative burden and the large number of images they had to check manually, which could reach up to a hundred scans per day. Additionally, radiology practice is lacking non-invasive methods for tissue classification. Invasive procedures take time and cause stress to patients. Luckily, AI healtchare solutions are coming to the rescue. The global AI radiology market was valued at $21.5 million in 2018, and it is forecast to reach $181.1 million in 2025, growing at a staggering CAGR of 35.9%. However, despite the numerous advantages of AI in radiology, there are still challenges preventing its wide deployment. How to properly train machine learning to aid radiology? Where does AI stand when it comes to ethics and regulations? How to make a strong business case for investing in artificial intelligence in radiology?

What is AI, and how is it used in radiology?

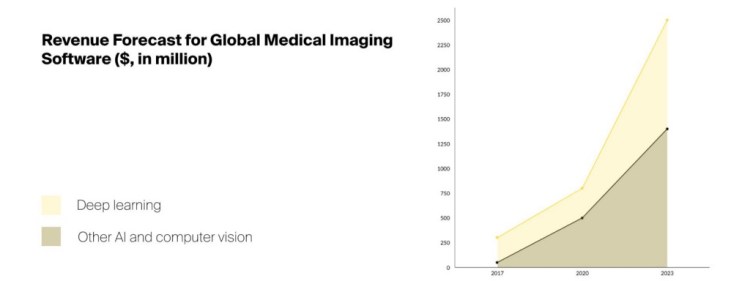

Artificial intelligence is a field of science that pursues the goal of creating intelligent applications and machines that can mimic human cognitive functions, such as learning and problem-solving. Machine learning (ML) and deep learning (DL) are subsets of AI. Machine learning implies training algorithms to solve tasks independently using pattern recognition. For example, researchers can apply ML algorithms to radiology by training them to recognize pneumonia in lung scans. Deep learning solutions rely on neural networks with artificial neurons modeled after a human brain. These networks have multiple hidden layers and can derive more insights than linear algorithms. Deep learning algorithms are widely used to reconstruct medical images and enhance their quality. As presented in the graph below, deep learning is gaining popularity in radiology. This AI subset has proven to be more efficient in handling medical data and extracting useful insights.

Two ways of using AI in radiology:

1. Programming an algorithm with predefined criteria supplied by experienced radiologists. These rules are hardwired into the software and enable it to perform straightforward clinical tasks. 2. Letting an algorithm learn from large volumes of data with either supervised/unsupervised techniques. The algorithm extracts patterns by itself and can come up with insights that escaped the human eye.

Top 5 applications of AI in radiology

Computer-aided detection (CAD) was the first application of radiology AI. CAD has a rigid scheme of recognition and can only spot defects present in the training dataset. It can’t learn autonomously, and every new skill needs to be hardcoded. Since that time, AI has evolved tremendously and can do more to help radiologists. Some of the medical digital image platforms enable users to manage different types of images, manipulate them, connect to third-party health systems, and more. So, what are the advantages AI brings to radiology?

Trending AI Articles:

2. How AI Will Power the Next Wave of Healthcare Innovation?

1. Classifying brain tumors

Brain cancer, along with other types of nervous system cancers, is the 10th leading cause of death in the US. Conventionally, prior to the operation, patients suffering from a brain tumor are left in the dark along with their surgeons. Both of them don’t know which kind of tumor is there and what treatment the patient will have to undergo. The first step is to remove as much infected brain mass as possible. A tumor sample is obtained from this mass and analyzed to classify the tumor. This intraoperative pathology analysis lasts around 40 minutes as the pathologist processes and stains the sample. In the meanwhile, the surgeon is idle. After receiving the results, they must quickly decide on the course of action. Introducing AI in radiology to this mix reduces the tumor classification time to about three minutes and can comfortably be done in the operating room. According to Todd Hollon, Chief Neurological Resident at Michigan Medicine, “It’s so quick that we can image many specimens from right by the patient’s bedside and better judge how successful we’ve been at removing the tumor.” As another example, a recent study conducted in the UK discovered a non-invasive way of classifying brain tumors in children using machine learning in radiology and diffusion-weighted imaging techniques. This approach uses the diffusion of water molecules to obtain contrast in MRI scans. Afterward, the apparent diffusion coefficient (ADC) map is extracted and fed to machine learning algorithms. This technique can distinguish three main brain tumor types in the posterior fossa part of the brain. Such tumors are the most common cancer-related cause of death among children. If surgeons know which variant the patient has in advance, they can prepare a more efficient treatment plan.

2. Detecting hidden fractures

The FDA started clearing AI algorithms for clinical decision support in 2018. Imagen’s OsteoDetect software was among the first the agency approved. This program uses AI to detect distal radius fractures in wrist scans.The FDA granted its clearance after Imagen submitted a study of its software performance on 1,000 wrist images. The confidence in OsteoDetect increased after 24 healthcare providers using the tool confirmed that it helped them detect fractures. Another use of AI in radiology is spotting hip fractures. This type of injury is common in elderly patients. Traditionally, radiologists use X-ray to detect this type of injury. However, such fractures are hard to spot as they can hide under soft tissues. A study published in the European Journal of Radiology demonstrates the potential of employing Deep Convolutional Neural Network (DCNN) to help radiologists spot fractures. DCNN can identify defects in MRI and CT scans that escape the human eye. Researchers conducted an experiment where human radiologists attempted to identify hip fractures from X-rays while AI was reading CT and MRI scans of the same hips. As a result, the radiologists could spot 83% of the fractures. DCNN’s accuracy reached 91%.

3. Recognizing breast cancer

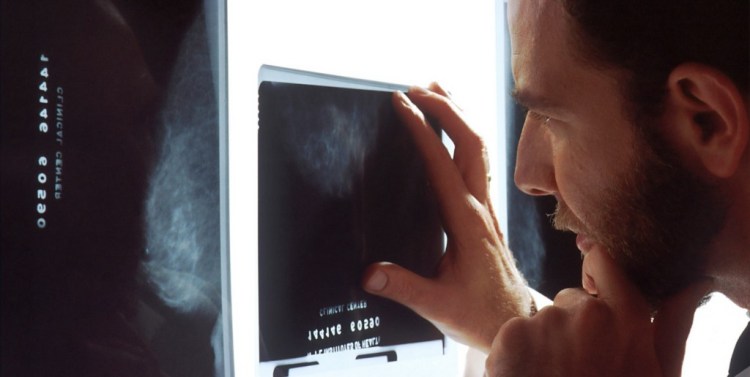

Breast cancer is the second leading cause of death among women in the US. Despite the severity of this disease, doctors miss up to 40% of breast lesions during routine screenings. At the same time, only around 10% of women with suspicious mammograms appear to have cancer. This results in frustration, depression, and even invasive procedures that healthy women are forced to undergo when wrongly diagnosed with cancer. Radiology AI simulation tools can improve this situation. A study conducted by Korean academic hospitals used an AI-based tool developed by Lunit to aid radiologists in mammography screenings. The study found that radiologists’ accuracy increased from 75.3% to 84.8% when they used AI. The algorithm was particularly good at detecting early-stage invasive cancers. Some women with developing breast cancer don’t experience any symptoms. Therefore women, in general, are advised to do regular mammogram screenings. However, due to the pandemic, many couldn’t do their routine checkups. According to Dr. Lehman, a radiologist at the Massachusetts General Hospital, about 20,000 women skipped their screenings during the pandemic. On average, five out of 1,000 screened women exhibit early signs of breast cancer. This equates to 100 undetected cancer cases. To remedy the situation, Dr. Lehman and her colleagues used radiology AI to predict which patients are likely to develop cancer. The algorithm analyzed previous mammogram scans available at the hospital. It combined the scans with relevant patient information, such as previous surgeries and hormone-related factors. The women whom the algorithm flagged as high risk were persuaded to come for routine screening. The results showed many of them had early signs of cancer.

4. Detecting neurological abnormalities

Artificial intelligence in radiology has the potential to diagnose neurodegenerative disorders such as Alzheimer’s, Parkinson’s, and amyotrophic lateral sclerosis (ALS) by tracking retinal movements. This analysis takes around 10 seconds. Another approach to spotting neurological abnormalities is through speech analysis, since Alzheimer’s changes patients’ language patterns. For instance, people with this disorder tend to replace nouns with pronouns. Researchers at Stevens Institute of Technology developed an AI tool based on convolutional neural networks and trained it using text composed by both healthy and affected individuals. The tool recognized early signs of Alzheimer’s in elderly patients solely based on their speech pattern with a 95% accuracy. Such software helps doctors identify which patients with mild cognitive impairment will go on to develop degenerative diseases and how severely their cognitive and motor skills will decline over time. This gives the endangered patients an opportunity to arrange for care facilities while they still can.

5. Offering a second opinion

AI algorithms can run in the background offering a second opinion when radiologists disagree on a problematic medical image. This practice decreases the decision-making-related stress level and helps radiologists learn to work with AI side-by-side and appreciate its benefits. Mount Sinai Health System, New York City, used AI for reading radiology results alongside the human specialist as a “second opinion” option for detecting COVID-19 in CT scans. They claim to be the first institution to combine AI and medical imaging for the novel coronavirus detection. Researchers trained the AI algorithm on 900 scans. And even though CT scans are not the primary way of COVID-19 detection, the tool can pick on mild signs of the disease that human eyes can’t notice. This AI model provides a second opinion when the CT scan shows negative results or nonspecific findings that radiologists can’t classify.

Challenges on the way to AI deployment in radiology

After viewing the exciting AI applications in radiology, one could assume that the sky’s the limit for this technology. It is indeed very promising, but there are difficulties in applying machine learning in radiology.

Development hurdles

Availability of training datasets To function properly, machine learning algorithms in radiology need to be trained on large amounts of medical images. The more, the better. But in the medical field, it is difficult to gain access to such datasets. For the sake of comparison, a typical non-medical imaging dataset can contain up to 100,000,000 images, while medical imaging sets rarely exceed about 1,000 images. Labeling Another problem is producing labeled datasets for supervised training. Medical image annotation is a very time-consuming and labor-intensive process. Radiologists and other medical experts must do this task manually assigning appropriate labels for the given AI application. There is potential for automatically extracting structured labels from radiology reports using natural language processing. But even then, radiologists will most likely need to review the results. Customization Opting for existing algorithms instead of developing custom ones can also be problematic. Many successful deep learning models available on the market are trained on 2D images, while CT scans and MRIs are 3D. This extra dimension poses a problem, and the algorithms need to be adjusted. Technological limitations Finally, AI technology itself is leaving room for doubt. Computer power has been doubling every two years. However, according to Wim Naude, a business professor from the Netherlands, this established pattern is diminishing. Consequently, we may not have the necessary power and multitasking abilities to take over the broad range of tasks that an average radiologist performs. AI’s silicon-based transistors will have to be replaced with technology such as organic biochips, which is still in its infantry to achieve such capabilities.

User experience can use a makeover

One of the most common remarks radiologists make is about their rough experience with medical imaging software. It takes many clicks, long waiting times, and a thorough manual study to accomplish even a simple task. Medical programs focus on the technical aspect of performing the job, but their interface is counter-intuitive and not user-friendly.

Complexity in identifying business use cases for acquiring radiology software

One of the most significant barriers to deploying artificial intelligence in radiology is convincing decision-makers that AI is a worthy cause. This technology requires hefty investments upfront, but it will not pay back quickly. Radiologists will take time to learn how to use it. Furthermore, the examples of successfully adopting AI in clinical practices are still limited. Suppose you concentrate solely on the radiology department. In that case, AI tools will help radiologists to read scans and produce reports faster, which is only a matter of efficiency and does not qualify for such a big investment. The solution here is to broaden your perspective and look at the overall picture. As Hugh Harvey, the Managing Director at Hardian Health, said,

“Radiology is not stand alone. Almost all departments use radiology, so the return on investment may not be only in radiology. Health economics studies must be across departments and stakeholders.”

Note that reimbursement opportunities are opening up. In September 2020, the Center for Medicare and Medicaid Services made its first approval of reimbursing made its first approval of reimbursing AI-augmented medical care. Reimbursement will influence AI’s ability to affect radiology. It is easier to pour investments into AI knowing that a part of it can be subject to refund.

The black-box nature of AI

Often, researchers and practitioners don’t fully understand how AI algorithms learn and make decisions. This is known as a black-box problem. Continuous learning When ML continues learning independently, it can take into account some irrelevant criteria. When the tool doesn’t explain its decision logic, radiologists can’t spot these self-added factors. For example, algorithms will learn that implanted medical devices and scars are signs of health issues. This is a correct assumption, but then the algorithm might assume that patients lacking these marks are healthy, which is not always true. Another example comes from Icahn School of Medicine. A team of researchers developed a deep learning algorithm to identify pneumonia in X-ray scans. They were baffled to see this software’s performance considerably declining when tested with scans from other institutions. After a lengthy investigation, they realized the program was considering how common pneumonia is at each institution as a factor in its decision. This is obviously not something the researchers wanted. Biased datasets Biased training datasets also present a problem. If a particular tool is mainly trained on medical images of a specific racial profile, it will not perform as well on others. For example, software trained on white people will be less precise on people of color. Also, algorithms trained and used at one institution need to be treated with caution when transferred to another organization as the labeling style will be different. A study by Harvard discovered that algorithms trained on CT scans can even become biased to particular CT machine manufacturers.

When radiologists don’t receive an explanation of a particular AI decision, their trust in the system will decline.

Loose ethics and regulations

There are several ethical and regulatory issues coined with the use of AI in radiology. Changing behavior Machine learning algorithms are challenging to regulate because their outcome is hard to predict. For example, a drug mostly works in a similar way, and we can anticipate its outcome. In contrast, ML tools tend to learn on the fly and adapt their behavior. Who is responsible? Another issue up for debate is who carries the final responsibility if AI led to a wrong diagnosis and the prescribed treatment caused harm. Due to AI’s black-box nature, the radiologist often can’t explain the recommendations delivered by artificial intelligence tools. So, should they follow these recommendations, no questions asked? Permissions and credit sharing The third hurdle is the use of patient data for AI training. There is a need to obtain and reobtain patient consent and offer a reliable and compliant data storage facility. Also, if you trained AI algorithms on patient data and then sold it and made a profit, are the patients entitled to a part of it? Now, we rely on the goodwill of AI software developers and researchers who train these tools to deliver an unbiased, reliable product that meets the appropriate standards. Instead, healthcare facilities adopting AI need to arrange for regular audits of the product to make sure it is still useful and compliant.

Artificial intelligence future in radiology

Many are wondering how will AI affect radiology and whether it will take over this field and replace human physicians. The answer to that is NO. In its current capacity, AI is not powerful enough to solve all the complex clinical problems radiologists are dealing with daily. As Elad Walach, the CEO of the Tel Aviv-based startup Aidoc puts it, “AI solutions are becoming very good at doing one thing very well. But because human biology is complex, you typically have to have humans who do more than one thing really well.” Radiologist specialization will not go extinct, but the scope of their work will change. AI will take over routine administrative tasks, such as reporting and will advise radiologists on decision making. According to Curtis Langlotz, a radiologist at Stanford, “AI won’t replace radiologists, but radiologists who use AI will replace radiologists who don’t.” To make radiologists comfortable using AI, education policymakers will need to implement some changes. It would be helpful to teach radiology students how to integrate AI into their clinical practice. This topic must be a part of their curriculum. Another prediction for artificial intelligence in radiology is augmenting the abilities of doctors in developing countries. For example, researchers at Stanford University are building a tool that will enable physicians to take pictures of an X-ray film using their smartphones. Algorithms underlying this tool will scan the film for tuberculosis and other problems. This app’s benefit is that it works with X-rays and doesn’t require advanced digital scans that are lacking in poor countries. Not to mention that hospitals in these countries might not have radiologists at all. Artificial intelligence’s future in radiology is promising, but the collaboration is still in its infantry. John Banja, professor in the Center of Ethics at Emory University, said: “It remains anyone’s guess as to how AI applications will be affected by their integration with PACS, how liability trends or regulatory efforts will affect AI, whether reimbursement for AI will justify its use, how mergers and acquisitions will affect AI implementation, and how well AI models will accommodate ethical requirements related to informed consent, privacy, and patient access.”

Take-away message

If you are a decision-maker at a medical tech company and you want to develop AI-based radiology solutions, here are some steps that you can take during the fundraising, development, and support stages that will improve your chance of success. Fundraising:

- Coming up with a strong business case is a challenge. Focus on the long-term benefits of AI to the whole clinic, not only to the radiology department.

Development:

- Consult experienced radiologists on the rules you want to hardwire into your algorithms, especially if your developers don’t have a medical background.

- Diversify your training data. Use medical images from different population cohorts to avoid bias.

- Customize your training datasets to the location where you want to sell your software. If you are targeting a particular medical institution, gather as many details as possible. Information, such as the type of CT scanners they are using, will help you deliver more effective algorithms.

- Overcome the black-box problem by offering some degree of decision explanation. For example, you can use rule extraction, a data mining technique that can interpret models generated by shallow neural networks.

- Work on the user experience aspect of your tool. Most radiology software available on the market is not user-friendly. If you can pull it off, your product will stand out among the competition.

Support:

- Suggest organizing regular audits after clients deploy your tools. Machine learning algorithms in radiology continue to learn, and their behavior adapts accordingly. With audits, you will make sure they are still fit for the job.

- Monitor updates on relevant regulations and new reimbursement options.

If you want to learn more about AI applications in radiology and how to overcome deployment challenges, feel free to contact ITRex experts.

Don’t forget to give us your ? !

Artificial Intelligence in Radiology — Advantages, Use Cases & Trends was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Why Kaggle Competitions move you away from real world Data Science problems

6 reasons why learning using kaggle competitions does not train you for the real world data science problems.

Continue reading on Becoming Human: Artificial Intelligence Magazine »

The Most In-Demand Skills for Data Scientists in 2021

Originally from KDnuggets https://ift.tt/3tpVMV4

ETL in the Cloud: Transforming Big Data Analytics with Data Warehouse Automation

Originally from KDnuggets https://ift.tt/2RxcovL

Is Your Model Overtained?

Originally from KDnuggets https://ift.tt/3x5yiXw

source https://365datascience.weebly.com/the-best-data-science-blog-2020/is-your-model-overtained

How to Get Good Training Data for ML Project?

Data Labeling Service — How to Get Good Training Data for ML Project?

Four Customer Pain Points in Getting No Bias Training Data

With the commercialization of AI products, auto-driving, face recognition, security, and other fields have become popular scenarios, and AI companies begin to focus on scenario-based landing capability.

As the basis of the AI industry, high-quality training data is one of the decisive elements of the model launching.

Relevant statistics show that the amount of data generated in 2025 will be as high as 163ZB, 90% of which are unstructured data. These unstructured data can only be “awakened” by cleaning and labeling. The potential and large demands allow the data labeling service to keep booming and expanding.

In the coming days, large scalable and highly customized data products have become the main forms of service in the data labeling industry. However, due to the low threshold and uneven service quality, clients often encounter pain points such as data quality, efficiency, data security, and customer service when choosing outsourcing partners.

1. Data quality

The deep learning algorithm training under supervised learning relies heavily on annotated data, and the quality of the data set will directly determine the performance of the AI model.

However, there are serious data quality problems in the data annotation industry. It is shown that the current first-time pass rate is less than 50%, and the third-time pass rate is less than 90%, which is far from meeting the needs of AI enterprises.

The clients hope that the data service company can improve the first-time pass rate and significantly reduce the rework situation.

2. Efficiency

At present, the two main data labeling operation and management types are “crowdsourcing” and “subcontracting”, which is difficult to manage the annotation team directly and effectively. Therefore, project delay has become a normal issue.

For customers, project delay means the loss of first-mover advantage in the fierce competition. Therefore, the data service is expected to have an efficient project management system, improving efficiency, and completing the project as scheduled.

3. Data security

A lot of sensitive data, such as face data, license plate data, and so on, would be frequently exposing. Therefore, the storage and transmission of the data require a high level of security.

The demander expects the data service provider to have clear and specific security management and pay enough attention to the process such as data transmission, storage, and data destruction.

4. Management skills

Under the mode of “crowdsourcing” and “subcontracting”, it is difficult for companies with weak management ability to serve high-quality data while multiple projects are ongoing.

Therefore, the demander hopes that the data service enterprise can establish a perfect managed labeling loop, optimizing the project experience.

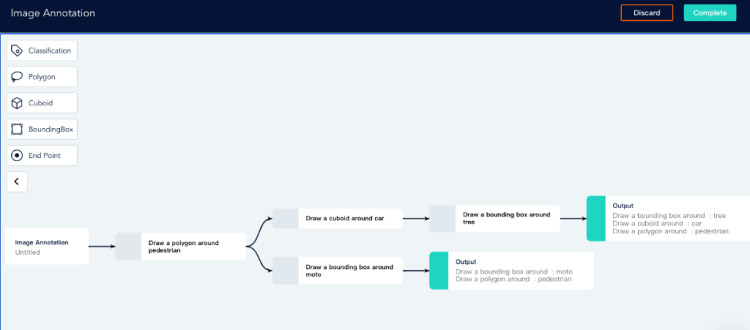

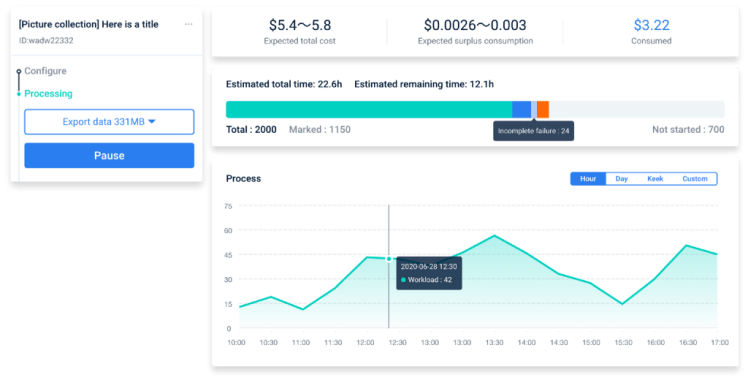

ByteBridge — A Human-Powered Data Labeling SAAS Platform

There is a SAAS labeling platform. The biggest advantage is clients can individually decide when to start their projects and get their results back instantly.

- Set labeling rules, iterate data features, attributes, and task flows, scale up or down, make changes.

- Monitor the labeling progress and get the results in real-time on our dashboard.

These labeling tools are available on the dashboard: Image Classification, 2D boxing, Polygon, Cuboid.

Once task flow well settled, the project can start in 24h. A medium-level project with 10,000 image labeling will take less than 1 business day.

If you need data labeling and collection services, please have a look at bytebridge.io, the clear pricing is available.

Please feel free to contact us: support@bytebridge.io

Don’t forget to give us your ? !

How to Get Good Training Data for ML Project? was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

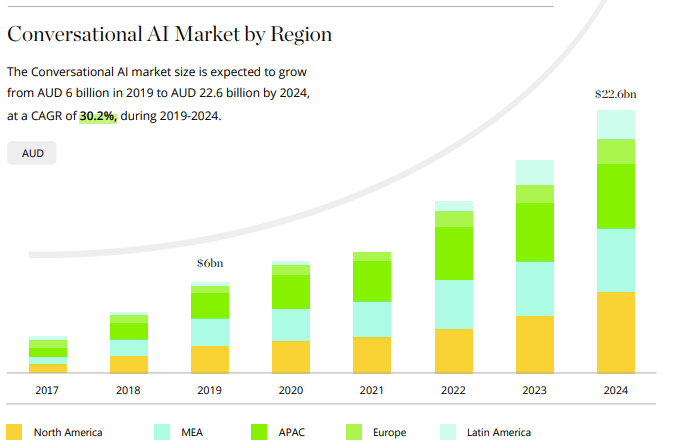

5 Ways Conversational AI is Shaping the Future of Learning

The impact of conversational AI is becoming more noticeable, and we can see it from the growth of the conversational AI market, which will reach AUD 22.6 billion or USD 17 billion by 2024:

Source: Deloitte

We already know how significant the role of conversational AI is in healthcare, media, and financial services. The business world seems to be benefiting from conversational AI the most, as this technology powers many business operations, including marketing and customer support.

But where does education stand? How is conversational AI shaping the future of learning?

Let’s explore this topic a bit more.

What Does Research Say About Practical Applications of Conversational AI?

As you know, conversational AI is the technology that lets computers recognize natural language, decipher, and understand it to find the right response and replicate human speech. Conversational AI usually involves chatbots and virtual assistance that can come in handy in the learning process.

How?

A study published in the Computers & Education journal explores different uses of conversational AI in education. It references Lars Satow who developed a model of learning facilitation by AI teaching assistants. According to this model, conversational AI will:

● send personalized messages to welcome new learners

● advise learning materials and suggest possible tasks to improve learning

● respond to general topic-related questions asked by students

● establish the requirements for the students to meet learning objectives

● provide personalized comments

● analyze individual learning requests

These activities create a multi-functional learning environment that facilitates learning, both online and in a classroom setting. The study has determined that the current state of conversational AI can perform tasks from level one to level four of Lars Satow’s model, which shows that AI’s potential is already high enough to be used in education on a broader scale.

With such a significant potential in mind, how can conversational AI impact the future of learning?

Let’s take a look at a few possible scenarios.

Trending AI Articles:

2. How AI Will Power the Next Wave of Healthcare Innovation?

1. Providing Students With Accurate Information

It’s common to believe that students are quite tech-savvy and familiar with how to use technology for their benefit. But even though it takes no time for a student to figure out the latest Samsung smartphone, most of them still don’t know how to look for credible information online.

According to research, students fail at understanding how to tell apart a trustworthy website from an untrustworthy one. In a survey, students didn’t name the citation or article sponsorship as key factors in determining the credibility of the source. Besides, out of 203 students, 80% believed that sponsored content is 100% trustworthy.

How can conversational AI help here?

You can program a chatbot or a virtual assistant to identify search queries submitted by the students and provide links to credible resources online. These can be links to public online libraries, scientific websites, .edu links, and other resources that don’t spread misinformation.

2. Helping Several Students at the Same Time

Another situation in which conversational AI can be extremely useful is helping teachers cater to students’ needs. Educators often have to teach in large classes, sometimes reaching up to 30 people and more in some countries, and sometimes students get deprived of the attention they need.

Conversational AI can help improve this situation. For instance, chatbots can answer the most common questions related to the topic of the lesson, supply students with learning materials, and guide them through tasks. As a result, every student will receive help.

Conversational AI can also be extremely helpful in special education. Linda Ferguson, the CEO of subjecto, the resource of flashcards and other educational materials, says that chatbots can help teach children with special needs since they also need a personal approach. Chatbots and virtual assistants can help special needs students manage tasks and get access to learning resources without the teacher’s help. Using conversational AI will also help them become more confident in using technology, which can benefit them in the long run.

3. Boosting Academic Performance

Many students lack guidance while studying, which leads to poorer academic performance. Research has even found that teachers might require guidance counselor training since the connection between teacher support and academic success is so strong.

But even if students get enough help from teachers, they can still fall behind. Sometimes students have a lot on their plate, and it gets hard for them to manage all the tasks and responsibilities, which are often accumulating before exam weeks.

What is the role of conversational AI here?

In this case, conversational AI can act as a reminder, helping students remain on track and keep up with their schedules. They can also ask questions related to assignments at any time of the day.

Chatbots and virtual assistants can provide personalized help, which also positively impacts academic performance. Pounce, a chatbot created by Georgia State University, helped the school reduce dropout rates because it catered to each student’s personalized requests. As a result, GSU managed to narrow the graduation gap and boost its students’ academic performance.

4. Collecting Valuable Student Feedback

Student engagement is an important part of academic performance. And proper feedback channels play a significant role in keeping students motivated.

Conversational AI solutions can create such feedback channels, connecting teachers with their students and keeping student feedback in one centralized storage. Besides, chatbots can collect this feedback anonymously, removing any bias from the learning process.

Educators also can use conversational AI to evaluate the progress of the course they teach. For instance, Hubert, an edu-bot, uses natural language processing to collect student feedback on courses and provides teachers with solutions on how to improve the course.

5. Sharing Administrative Information

In education, it’s also important to provide students with administrative support and keep them updated on the latest school news. At this point, conversational AI can also replace usual news boards in school corridors, send timely notifications to students and answer their questions.

The best part about using chatbots and virtual assistants for administrative support is that you can conveniently categorize information for better access. For instance, a smart virtual assistant can have categories with college admission information, campus events, financial aid programs, etc.

As a result, students will have one more resource of important administrative information and won’t have to go on the school’s website to browse for it. They also won’t have to leave the house to talk to a counselor, which can be dangerous during the pandemic.

Wrapping Up

As you can see, there are quite a few applications for AI in education already. It can help students find credible information, perform better academically, and get access to valuable administrative information.

Teachers can also greatly benefit from conversational AI. Chatbots can be of great help during lessons catering to each student’s personal needs. Educators can also use chatbots and virtual assistants to collect student feedback.

That being said, conversational AI still has a long way to go until it can reach its full potential. We still have yet to see greater personalization, which requires further research and development. But the current applications of AI already show their amazing impact on the future of learning.

Credits: Alison Lee is a professional content writer and editor. She’s also a high school teacher passionate about Edtech and how it can help students get a better education.

Don’t forget to give us your ? !

5 Ways Conversational AI is Shaping the Future of Learning was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Why Corporate AI Projects Fail? Part 2/4

Tldr: Poor processes and culture can derail the success of many an exceptional AI team.

In part 1, I introduced a four-pronged framework for analysing the principal factors underlying the failure of corporate AI projects:

- Intuition (Why)

- Process/Culture (How)

- People (Who)

- Systems (What)

and addressed in detail how a lack of organizational intuition for AI can undermine any efforts to embrace and adopt AI successfully.

In the second part of the blog series, I will focus on core aspects of organizational processes and culture that companies should inculcate to ensure that their AI teams are successful and deliver significant business impact.

Culture

Organizational culture is the foundation on which a company is built and shapes its future outcomes related to commercial impact and success, hiring and retention, as well as the spirit of innovation and creativity. Whilst organizational behaviour and culture have been studied for decades, it needs to be relooked in the context of new-age tech startups and enterprises. The success of such cutting-edge AI-first companies is highly correlated with the scale of innovation through new products and technology, which necessitates an open and progressive work culture.

Typically, new startups on the block, especially those building a core AI product or service, are quick to adopt and foster a culture that promotes creativity, rapid experimentation and calculated risk-taking. Being lean and not burdened by any legacy, most tech startups are quick to shape the company culture in the image of the founders’ vision and philosophy (for better or worse). However, the number of tech companies that have become infamous for the lack of an inclusive and meritocratic culture are far too many.

There are innumerable examples, from prominent tech startups like Theranos, Uber to big tech companies like Google and Facebook, where an open and progressive culture has at times taken a back seat. However, with the increasing focus on sustainability, diversity and inclusion, and ESG including better corporate governance, it is imperative for tech companies to improve organizational culture and not erode employee, consumer or shareholder trust or face real risks to the business from financial as well as regulatory authorities as recently experienced by BlackRock and Deliveroo.

Here is a ready reckoner of some of the ways AI companies tend to lose sight of culture:

- Leadership is not clear that the goal of AI is to augment human productivity, reduce costs and improve efficiencies, and not to replace employees. Without human partners in the loop, AI-based products and services cannot succeed

- Hiring non-technical leaders with little intuition of AI, and/or technical leaders with poor product or business mindset to lead AI teams or orgs

Trending AI Articles:

2. How AI Will Power the Next Wave of Healthcare Innovation?

- Poor organization alignment about the long-term vision and purpose of AI across key stakeholders in multiple business verticals

- Lack of investment in organization-wide training in AI especially for business teams resulting in poor intuition about the commercial potential of AI

- Lack of allocation of budget for investing in AI, or investing too late whereby early movers and competitors have already embraced and adopted AI across the org

- Poor management of AI teams with no roadmap, vision, or hiring strategy

- Talking up the promise of AI with no well-defined use cases and projects in sight

- Treating AI like software and expecting things to work the same way as for building and delivering software products

- No structured processes and mechanisms for embracing AI: from defining business-relevant AI use cases to deploying them in the real world

Process

There are several processes that are integral for ensuring a successful AI outcome across the entire lifecycle from conception to production. However, from first-principles, the primary process that needs to be streamlined and managed well is identifying the right use cases for AI that have the potential to create significant commercial impact. In this blog, I will focus only on this particular aspect and expound on the other processes in separate blogs.

What can go wrong in identifying the right set of AI use cases?

- Focus on the technical aspects of AI instead of the business problem

- Defining a use case without checking availability of data for the problem

- Poor intuition for the feasibility of the AI use case without diving deep into the data and consulting business or domain experts

- No clearly defined acceptance criteria, success metrics or KPIs for the use case

- No shared alignment across cross-functional teams for the proposed use cases

- Poor project management, and misallocation of time and bandwidth across AI and cross-functional teams

- Poor prioritization and ranking of proposed use cases

- No clearly defined project milestones and timelines to validate the use case once the project has started

- Poor brainstorming of potential AI solutions for the use case

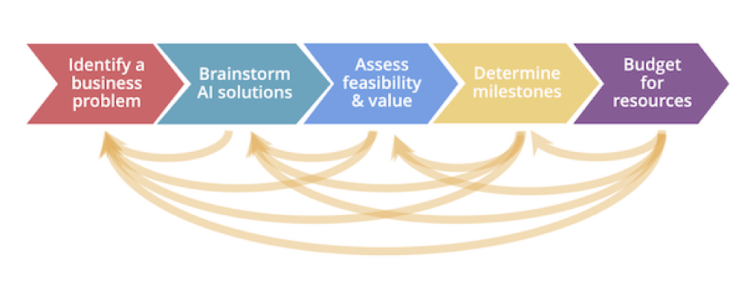

So, having listed a variety of issues that can go wrong in identifying an AI use case, how should one ideally go about scoping AI projects systematically? As per Figure 2, the strategy to scope an AI use case involves 5 steps: from identifying a business problem to brainstorming AI solutions to assessing feasibility and value to determining milestones and finally budgeting for resources.

The scoping process starts with a careful dissection of business, not AI problems, that need to be solved for creating commercial value. As discussed above, if not done right, the rest of the AI journey in an organization is bound to fail.

Secondly, it is important to brainstorm potential AI solutions across AI, engineering and product teams to shortlist a set of approaches and techniques that are practically feasible instead of going with the latest or most sophisticated AI model or algorithm.

Thirdly, AI teams should assess the feasibility of shortlisted methods by creating a quick prototype, validating the approach based on literature survey or discussions with domain experts within the company or partner with external collaborators accordingly. If a particular method does not appear to be feasible, then teams should consider the alternative approaches until they are ruled out.

Once the initial efforts have validated the use case, its feasibility and potential approaches, it is critical to define key business metrics, KPIs, acceptance or success criteria. These are not composed of the typical AI model metrics like precision, accuracy of F-1 score, but KPIs need to be defined that are directly correlated with the impact of the AI models on business goals e.g. retention, NPS, customer satisfaction amongst others.

The final step involves program management of the entire project from allocating time, bandwidth of individual contributors in the AI as well as partner teams, budget for collecting or labeling data, hiring data scientists or buying software or infrastructure to setup and streamline the entire AI lifecycle.

Tldr part 2:

Before you head out to build AI, first ask what are the business problems that are big enough and suitable for an AI-based solution? What business metrics and objectives ought to be targeted? Scope out the problem systematically to ensure the best chance of success.

Build on the initial successes of AI and foster a meritocratic and open culture of innovation and cross-functional collaboration to build AI that solves a variety of business use cases.

Sundeep is a leader in AI and Neuroscience with professional experience in 4 countries (USA, UK, France, India) across Big Tech (Conversational AI at Amazon Alexa AI), unicorn Startup (Applied AI at Swiggy), and R&D (Neuroscience at Oxford University and University College London).

He has built and deployed AI for consumer AI products; published 40+ papers on Neuroscience and AI; consults deep tech startups on AI/ML, product roadmap, team strategy; and conducts due diligence of early-stage tech startups for angel investing platforms (https://sundeepteki.org).

Don’t forget to give us your ? !

Why Corporate AI Projects Fail? Part 2/4 was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.