AI’s transformative potential has been the prime mover for its widespread adoption among organizations across the globe and continues to be the utmost priority for business leaders. PwC’s research estimates that AI could contribute $15.7 trillion to the global economy by 2030, as a result of productivity gains and increased consumer demand driven by AI-enhanced products and services.

While artificial intelligence (AI) is quickly gaining ground as a powerful tool to reduce costs, automate workflows and improve revenues, deploying AI requires meticulous management to prevent unintentional ramifications. Beyond the compliance to the laws, CEOs bear a great onus to ensure a responsible and ethical use of AI systems. With the advent of powerful AI, there has been a great deal of concern and skepticism regarding how AI systems can be aligned with human ethics and integrated with softwares, when moral codes vary with culture.

Creating responsible AI is imperative to organizations and instilling responsibility in a technology requires following criteria to be fulfilled —

- It should comply with all the regulations and operate on ethical grounds

- AI needs to be reinforced by end-to-end governance

- It should be supported by performance pillars that address subjects like bias and fairness, interpretability and explainability, and robustness and security.

Key Dimensions For Responsible Ai

1. Guidance On Definitions And Metrics To Evaluate AI For Bias And Fairness

Value statements often lack proper definitions of concepts like bias and fairness in the context of AI. While it’s possible to design AI to be fair and in line with an organisation’s corporate code of ethics, leaders should lead their organizations towards establishing metrics that align their AI initiatives with company’s values and goals. CEOs should comprehensively lay down company’s goals and values in the context of AI and encourage collaboration across the organization in defining AI fairness . Some examples of metrics include:

- Disparate Impact: The ratio in the probability of favorable outcomes between the unprivileged and privileged groups.

- Equal Opportunity Difference: The ratio of true positive rates between the unprivileged and privileged groups

- Statistical Parity Difference: The difference of the rate of favourable outcomes received by unprivileged group and the privileged group

- Average Odds Difference: The average difference of false positives and true positives between unprivileged group and the privileged group

- Theil Index: The inequality of benefit allocation for individuals

2. Governance

Building Responsible AI practices in the organization requires the leadership to build proper communication channels, cultivate a culture of responsibility, and build internal governance processes that match with the needed regulations and industry’s best practices.

Trending AI Articles:

1. How to automatically deskew (straighten) a text image using OpenCV

3. 5 Best Artificial Intelligence Online Courses for Beginners in 2020

4. A Non Mathematical guide to the mathematics behind Machine Learning

End-to-end enterprise governance is extremely critical for Responsible AI. Organizations should be able to answer the following questions w.r.t AI initiatives:

- Who takes accountability and responsibility

- How can we align AI with our business strategy

- Which processes can be optimized and improved

- What are the essential controls to monitor performance and identify problems

3. Hierarchy Of Company Values

AI development isn’t devoid of trade-offs, in fact, while developing AI models there is often a perceived trade-off between the accuracy of an algorithm and the transparency of its decision making i.e, how explainable its predictions are for stakeholders. A high accuracy AI model can lead to the creation of “black box” algorithms, which makes it difficult to rationalize the decision making process of the AI system.

Likewise, trade-off exists while training AI. As AI models get more accurate with more data, gathering a large volume of data itself can increase privacy concerns. Formulating thorough guidelines and hierarchy of values is vital to shape responsible AI practices during model-development.

4. Security And Reliance

Resilience, security and safety are essential elements of AI for it to be effective and reliable.

- Resilience: Next-generation AI systems are going to be increasingly “self-aware,” with a capability to evaluate unethical decisions and correct faults.

- Security: AI systems and development processes should be protected against potential fatal incidents like AI data theft, breaches in security that lead to systems being compromised or “hijacked”.

- Safety: Ensure AI systems are safe to use for the people whose are either directly impacted by them or will be potentially affected by AI-enabled decisions. Safe AI is critical in areas related to healthcare, connected workforce, manufacturing applications etc.

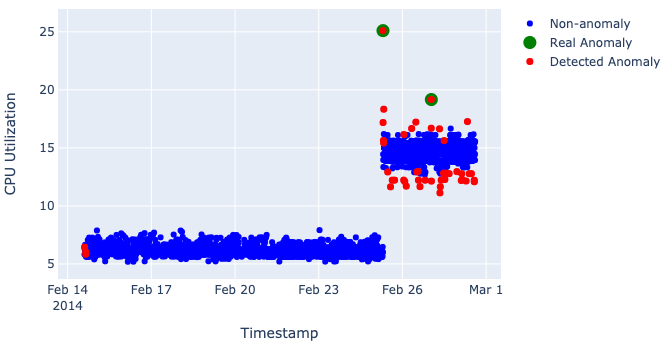

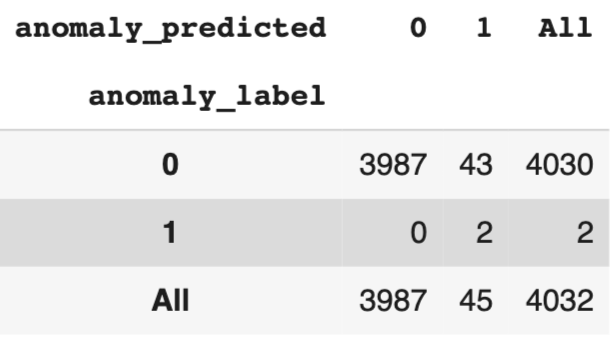

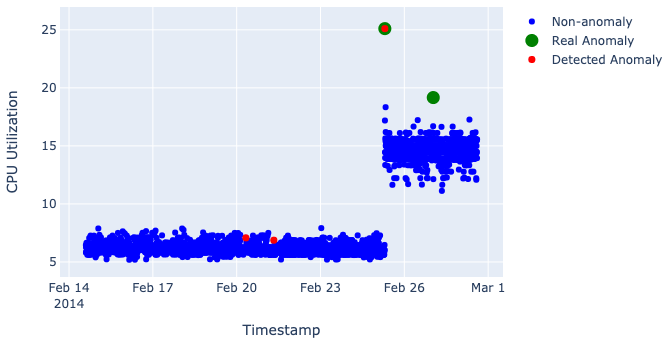

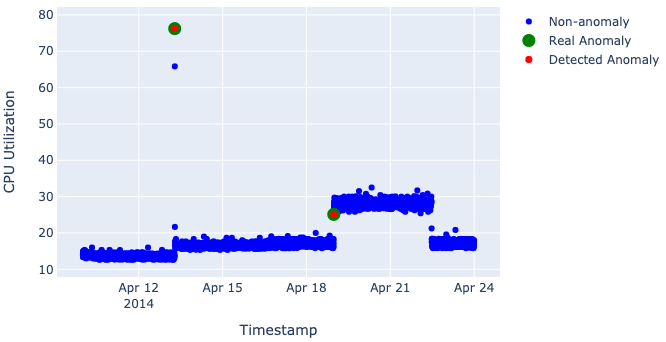

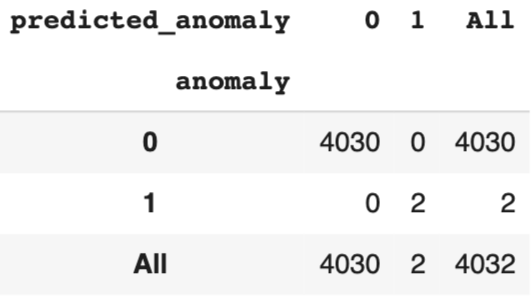

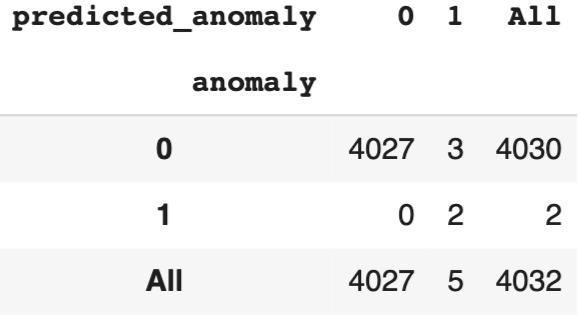

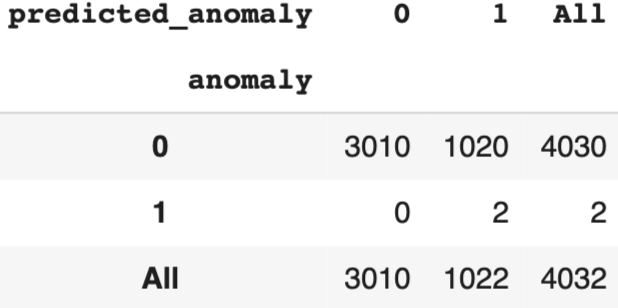

5. Monitoring AI

AI needs to be closely monitored with human supervision and its performance should be audited against key metrics like accountability, bias, and cybersecurity. A diligent evaluation is needed as biases can be subtle and hard to discern and a feedback loop needs to be devised to effectively govern AI and fine tune biases.

A continuous monitoring of AI will ensure that the models will recreate an accurate real-world performance and take user feedback into account. While issues are bound to occur, it is necessary to adopt a strategy that comprises short-term simple fixes and longer-term learned solutions to address the issues. Prior to deploying an AI model, it is essential to analyze the differences and understand how the update will affect the overall system quality and user experience.

6. Explainability

Explainable AI (XAI) is defined as systems with the ability to explain their rationale for decisions, characterize the strengths and weaknesses of their decision-making process, and convey an understanding of how they will behave in the future. While it’s desirable to have complex models that perform exceedingly well, it would be erroneous to assume that the benefits derived from the model outweigh its lack of explainability.

AI’s Explainability is a major factor to ensure compliance with regulations, manage public expectations, establish trust and accelerate adoption. And it offers domain experts, frontline workers, and data scientists a means to eliminate potential biases well before models are deployed. To ensure that model outputs are precisely explainable, data-science teams should clearly establish the types of models that are used.

7. Privacy

Machine Learning models essentially need large sets of data to train and work accurately. A caveat to this process is that a lot of data can be sensitive. It is essential to address the potential privacy implications in using such data. This demands enterprises to adhere to legal and regulatory requirements, be sensitive to social norms and individual expectations, and finally have adequate transparency and control of their data.

Conclusion

A Responsible AI framework enables organizations to build trust with both employees and customers. In doing so, employees will rest their faith in the insights delivered by AI, willingly use it in their operations and ideate new ways to leverage AI in creating greater value.

Building trust with customers, opens the floodgates to use consumer data that can be used to continually improve AI and consumers will be more willing to use your AI-infused products because of the trust in the product and the organization. This also improves brand reputation, allows organizations to innovate, compete and most importantly, enables society to benefit from the power of AI than be paranoid about the technology. via Acuvate.com

Don’t forget to give us your ? !

Responsible AI Practices Your Organizations Should Follow For Better Trust was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.