They didn’t believe AI can make profits in Crypto trading, but when they saw the results of the first 8 months

We set ourselves a hard goal. In cryptocurrency trading, 95% of crypto traders lose money. Imagine you are an elementary math teacher. You have a class of 30 kids. On a Monday morning, you order your class to tell you how much they get if they multiply 32 with 78. Only one kid gives you the right answer. No matter what year, no matter how old they were, only one. This is pretty much the case of crypto traders. But the kids can use a calculator any time, can they? What about the traders? Can they also have a calculator?

We were looking for the answer!

The b-cube.ai startup was founded in 2018. At the time, there were plenty of articles to read about the spread of AI. The internet was crammed with videos in which Elon Musk or Stephen Hawking warned of the dangers of uncontrolled artificial intelligence. But there were also content on how to use AI in cancer diagnosis. If something is so smart as to be a worthy opponent of cancer, why don’t we use it against the Bitcoin market as well?

This idea kept Guruprasad Venkatesha, the CEO of b-cube.ai, awake. Sitting in front of the monitor placed on Morgan Stanley’s white-painted desk, he saw enough of the S&P 500’s red and green candles. He also saw enough account statements and balance sheets. And he had enough experience after years in his former investor analyst firm.

Equities have been trading for more than 400 years. During all this time, traders have accumulated enough knowledge for the next generation of investors.

Trending AI Articles:

1. How to automatically deskew (straighten) a text image using OpenCV

3. 5 Best Artificial Intelligence Online Courses for Beginners in 2020

4. A Non Mathematical guide to the mathematics behind Machine Learning

But what about Cryptocurrencies?

The cryptos didn’t even exist until an unknown person Satoshi Nakamoto, uploaded Bitcoin’s Whitepaper. Moreover, they did not use artificial intelligence for trading. Such technology requires intensive research and development. Not the knowledge you can learn by watching a YouTube video for 20 minutes a day with a mug of green tea.

But then, how can this goal be achieved? How to consistently make a profit in cryptocurrency using AI?

Many try to apply Machine Learning, the subcategory of AI into finance. The AI is excellent in pattern recognition. You can train models to tell the difference between an apple and a pear. So the idea is, if the AI can see patterns in the price data — the chart — it can also tell which direction is the price likely to move next. The AI sees the pattern now, you buy now and you make money.

This couldn’t be further from the truth!

The financial data have different statistical properties which demand a very specific approach. These Machine Learning models are orders of magnitude more complex than theory driven models. They are much harder to design, test and deploy. They are not invented by human understanding. Patterns never have been found by human researchers using only their intuition. The amount of data and noise is simply too enormous for a human mind to grasp. This is directly related to the concept of “microscopic alpha” introduced by López de Prado: the easy correlations that could be found out by human intuition have already been exhausted, and more sophisticated techniques are increasingly required to keep on extracting alpha.

The turning point

In March 2019, the b-cube project entered their incubation program of Centrale Supélec. Thanks to this program, we could get a partnership with the Paris-Saclay University. Which is the number 1 mathematics university in the world. This meant we had access to the university’s quantitative finance lab, professors and could also accept interns. That’s how Francois joined the team. A brilliant AI engineer whose story is also fascinating. As a true officer of the French Army, Francois showed extreme work ethics.

Francois and our CTO, Erwan along with the tech department have been working intensively for months. It was also not uncommon for them to work 16–18 hours a day under a lamplight while the morning birds began to sing from outside.

Our mentor, Dr. Damien Challet, a professor at the university personally guided the research work.

Our usage of AI for crypto trading

1) Use of sentiment analysis, based on social media and news. We absorb these data, store, filter and process them with NLP (Natural Language Processing) in real time. We evaluate the sentiment of the market about a given cryptocurrency. The market is highly driven by sentiment, which can be positive or negative, greed or fear.

2) Use of machine learning to absorb different uncorrelated features and detect patterns in multiple dimensions, which can be related to price and volume (endogenous data), sentiment analysis (once processed by our sentiment analysis engine) or blockchain related data (volume of transactions, speed of mining, size and move happening on “wallet of the whales”, etc.).

Instead of considering the craft of producing individual strategies in what really is an artisanal way, our approach is to produce them in batches industrially, within a pipeline that allows proper testing, deployment, and selection (what we call meta-strategies).

What were the results?

From March to July 2020, we achieved a profit of +15.32%. But then there was a big leap. By August 9, we were already at +46.1%. This result was already satisfactory. From March to October 2020, we have achieved a +65.84% of profits.

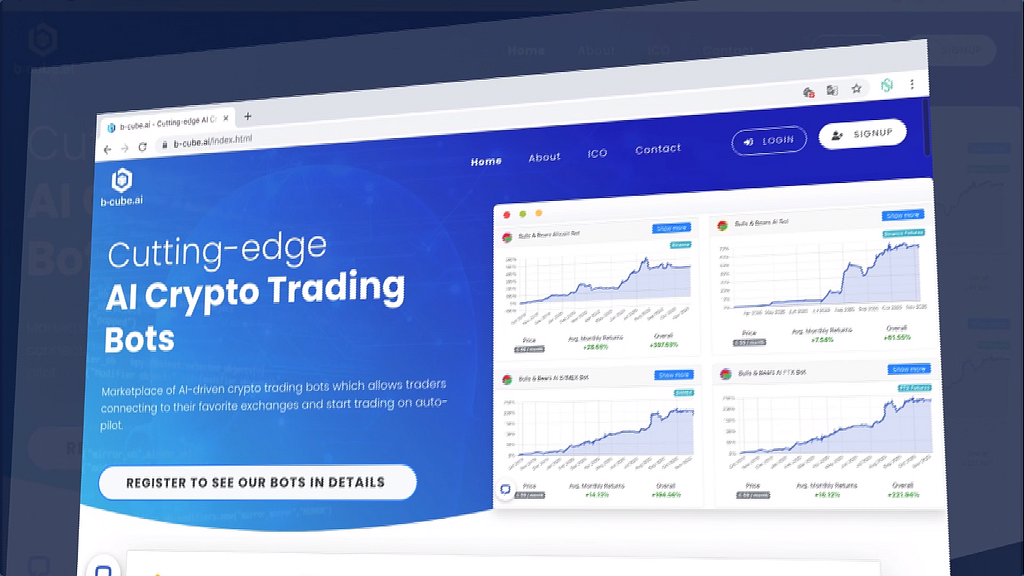

We made our AI Bot public today.

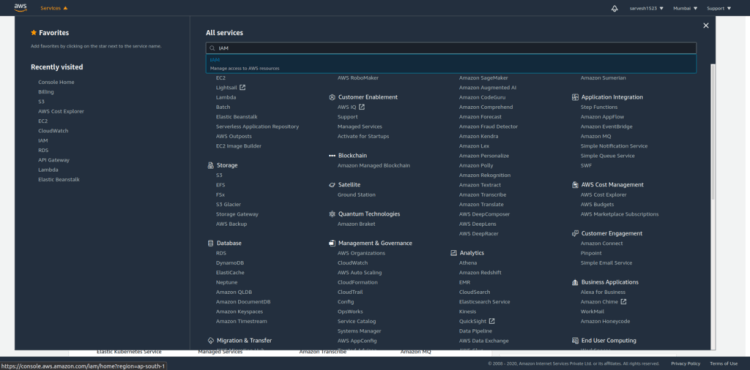

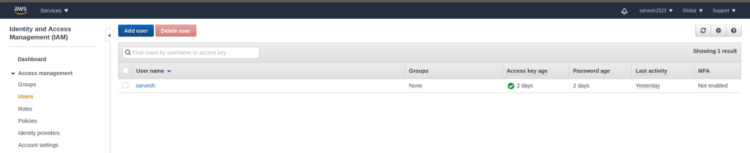

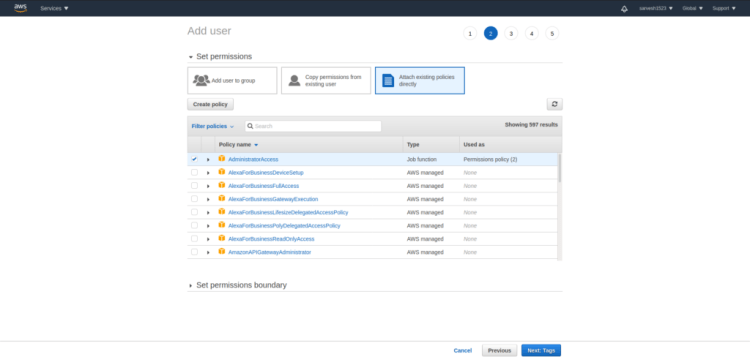

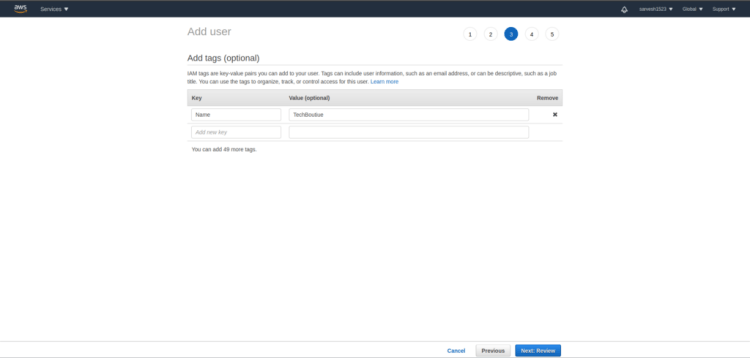

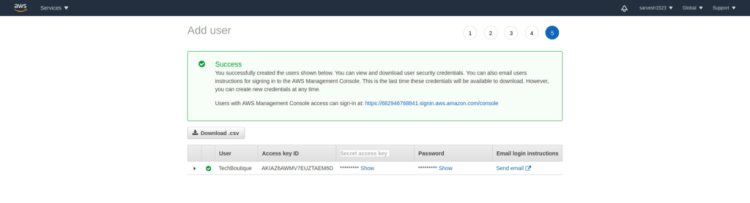

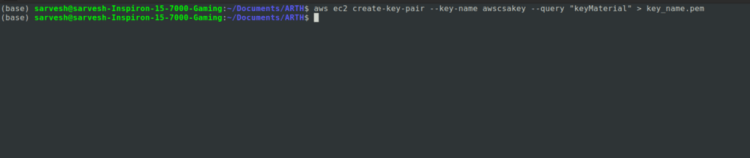

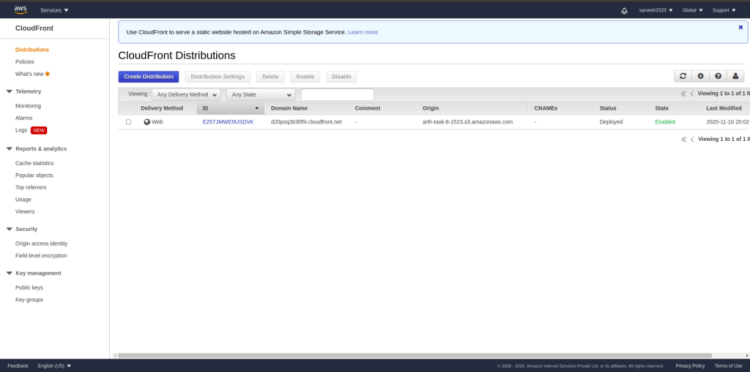

From now on, you can put to work the same Bot that we have benefited over 60% in the last 8 months. This will require a Binance Futures account and a subscription that costs € 59 per month. The Binance account requires API integration. If this is a bit complicated or you don’t have an account, check out our website for more help:

In the end, we can say that it is indeed possible to make a consistent profit in cryptocurrency markets. And this is no longer exclusive, but available to retailers. Look at past results and calculate how you would have performed on your own account. We have uploaded all the trades so far to the website. Their start and end dates are available. The pair traded, the result brought, etc. These results can be tracked by anyone on our website. Register and select the Bulls & Bears AI Bot!

“Amateurs develop individual strategies, believing that there is such a thing as a magical formula for riches. In contrast, professionals develop methods to mass-produce strategies. The money is not in making a car, it is in making a car factory.” — López de Prado, 2018

DISCLAIMER

Trading cryptocurrencies involves risk. The information provided on this website does not constitute investment advice, financial advice, trading advice, or any other sort of advice and you should not treat any of the article’s content as such. Author, website or the company associated with them does not recommend that any cryptocurrency should be bought, sold, or held by you. Do conduct your own due diligence and consult your financial advisor before making any investment decisions.

Don’t forget to give us your ? !

They didn’t believe AI can make profits in Crypto trading, but when they saw the results of the… was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.